- dont_log_me_out : anti semetic statements

- 76

- 167

A perfectly valid female named Isabelle Belato applied for adminship.

Athaenara, who at the time was a sysop and (most likely) a legacy foid, was opposed:

This blatant act of transphobia cannot go unpunished. Therefore another perfectly valid woman, LilianaUwU, decided to report her:

That immediately resulted in her getting blocked. However, it should be noted that because of autism, Wikipedia distinguishes between blocking and banning. The former is a technical measure that prevents the user from editing, while the latter is a purely procedural thing which may be enforced using the former.

Naturally, a request to ban her was also filed, this time by a yet another perfectly valid woman, Tamzin. I recommend reading the discussion because it lists the problematic behaviors exhibited by the bigoted ex-sysop in the past. It's shocking she wasn't banned earlier!

Additionally, a motion to strip her of her admin privileges was filed and granted.

Unfortunately, some chud unblocked her, but it was quickly reversed:

- 99

- 166

Orange site: https://news.ycombinator.com/item?id=32444470

DALL-E 2, OpenAI's powerful text-to-image AI system, can create photos in the style of cartoonists, 19th century daguerreotypists, stop-motion animators and more. But it has an important, artificial limitation: a filter that prevents it from creating images depicting public figures and content deemed too toxic.

Now an open source alternative to DALL-E 2 is on the cusp of being released, and it'll have no such filter.

London- and Los Altos-based startup Stability AI this week announced the release of a DALL-E 2-like system, Stable Diffusion, to just over a thousand researchers ahead of a public launch in the coming weeks. A collaboration between Stability AI, media creation company RunwayML, Heidelberg University researchers and the research groups EleutherAI and LAION, Stable Diffusion is designed to run on most high-end consumer hardware, generating 512×512-pixel images in just a few seconds given any text prompt.

"Stable Diffusion will allow both researchers and soon the public to run this under a range of conditions, democratizing image generation," Stability AI CEO and founder Emad Mostaque wrote in a blog post. "We look forward to the open ecosystem that will emerge around this and further models to truly explore the boundaries of latent space."

But Stable Diffusion's lack of safeguards compared to systems like DALL-E 2 poses tricky ethical questions for the AI community. Even if the results aren't perfectly convincing yet, making fake images of public figures opens a large can of worms. And making the raw components of the system freely available leaves the door open to bad actors who could train them on subjectively inappropriate content, like pornography and graphic violence.

Creating Stable Diffusion

Stable Diffusion is the brainchild of Mostaque. Having graduated from Oxford with a Masters in mathematics and computer science, Mostaque served as an analyst at various hedge funds before shifting gears to more public-facing works. In 2019, he co-founded Symmitree, a project that aimed to reduce the cost of smartphones and internet access for people living in impoverished communities. And in 2020, Mostaque was the chief architect of Collective & Augmented Intelligence Against COVID-19, an alliance to help policymakers make decisions in the face of the pandemic by leveraging software.

He co-founded Stability AI in 2020, motivated both by a personal fascination with AI and what he characterized as a lack of "organization" within the open source AI community.

“Nobody has any voting rights except our 75 employees — no billionaires, big funds, governments or anyone else with control of the company or the communities we support. We’re completely independent,” Mostaque told TechCrunch in an email. “We plan to use our compute to accelerate open source, foundational AI.”

Mostaque says that Stability AI funded the creation of LAION 5B, an open source, 250-terabyte dataset containing 5.6 billion images scraped from the internet. (“LAION” stands for Large-scale Artificial Intelligence Open Network, a nonprofit organization with the goal of making AI, datasets and code available to the public.) The company also worked with the LAION group to create a subset of LAION 5B called LAION-Aesthetics, which contains AI-filtered images ranked as particularly “beautiful” by testers of Stable Diffusion.

The initial version of Stable Diffusion was based on LAION-400M, the predecessor to LAION 5B, which was known to contain depictions of s*x, slurs and harmful stereotypes. LAION-Aesthetics attempts to correct for this, but it’s too early to tell to what extent it’s successful.

In any case, Stable Diffusion builds on research incubated at OpenAI as well as Runway and Google Brain, one of Google's AI R&D divisions. The system was trained on text-image pairs from LAION-Aesthetics to learn the associations between written concepts and images, like how the word "bird" can refer not only to bluebirds but parakeets and bald eagles, as well as more abstract notions.

At runtime, Stable Diffusion --- like DALL-E 2 --- breaks the image generation process down into a process of "diffusion." It starts with pure noise and refines an image over time, making it incrementally closer to a given text description until there's no noise left at all.

Stability AI used a cluster of 4,000 Nvidia A100 GPUs running in AWS to train Stable Diffusion over the course of a month. CompVis, the machine vision and learning research group at Ludwig Maximilian University of Munich, oversaw the training, while Stability AI donated the compute power.

Stable Diffusion can run on graphics cards with around 5GB of VRAM. That’s roughly the capacity of mid-range cards like Nvidia’s GTX 1660, priced around $230. Work is underway on bringing compatibility to AMD MI200’s data center cards and even MacBooks with Apple’s M1 chip (although in the case of the latter, without GPU acceleration, image generation will take as long as a few minutes).

“We have optimized the model, compressing the knowledge of over 100 terabytes of images,” Mosaque said. “Variants of this model will be on smaller datasets, particularly as reinforcement learning with human feedback and other techniques are used to take these general digital brains and make then even smaller and focused.”

For the past few weeks, Stability AI has allowed a limited number of users to query the Stable Diffusion model through its Groomercord server, slowing increasing the number of maximum queries to stress-test the system. Stability AI says that more than 15,000 testers have used Stable Diffusion to create 2 million images a day.

Far-reaching implications

Stability AI plans to take a dual approach in making Stable Diffusion more widely available. It'll host the model in the cloud, allowing people to continue using it to generate images without having to run the system themselves. In addition, the startup will release what it calls "benchmark" models under a permissive license that can be used for any purpose --- commercial or otherwise --- as well as compute to train the models.

That will make Stability AI the first to release an image generation model nearly as high-fidelity as DALL-E 2. While other AI-powered image generators have been available for some time, including Midjourney, NightCafe and http://Pixelz.ai, none have open sourced their frameworks. Others, like Google and Meta, have chosen to keep their technologies under tight wraps, allowing only select users to pilot them for narrow use cases.

Stability AI will make money by training "private" models for customers and acting as a general infrastructure layer, Mostaque said --- presumably with a sensitive treatment of intellectual property. The company claims to have other commercializable projects in the works, including AI models for generating audio, music and even video.

“We will provide more details of our sustainable business model soon with our official launch, but it is basically the commercial open source software playbook: services and scale infrastructure,” Mostaque said. “We think AI will go the way of servers and databases, with open beating proprietary systems — particularly given the passion of our communities.”

With the hosted version of Stable Diffusion — the one available through Stability AI’s Groomercord server — Stability AI doesn’t permit every kind of image generation. The startup’s terms of service ban some lewd or sexual material (although not scantily-clad figures), hateful or violent imagery (such as antisemitic iconography, racist caricatures, misogynistic and misandrist propaganda), prompts containing copyrighted or trademarked material, and personal information like phone numbers and Social Security numbers. But while Stability AI has implemented a keyword filter in the server similar to OpenAI’s, which prevents the model from even attempting to generate an image that might violate the usage policy, it appears to be more permissive than most.

Stability AI also doesn't have a policy against images with public figures. That presumably makes deepfakes fair game (and Renaissance-style paintings of famous rappers), though the model struggles with faces at times, introducing odd artifacts that a skilled Photoshop artist rarely would.

"Our benchmark models that we release are based on general web crawls and are designed to represent the collective imagery of humanity compressed into files a few gigabytes big," Mostaque said. "Aside from illegal content, there is minimal filtering, and it is on the user to use it as they will."

Potentially more problematic are the soon-to-be-released tools for creating custom and fine-tuned Stable Diffusion models. An "AI furry porn generator" profiled by Vice offers a preview of what might come; an art student going by the name of CuteBlack trained an image generator to churn out illustrations of anthropomorphic animal genitalia by scraping artwork from furry fandom sites. The possibilities don't stop at pornography. In theory, a malicious actor could fine-tune Stable Diffusion on images of riots and gore, for instance, or propaganda.

Already, testers in Stability AI's Groomercord server are using Stable Diffusion to generate a range of content disallowed by other image generation services, including images of the war in Ukraine, nude women, an imagined Chinese invasion of Taiwan and controversial depictions of religious figures like the Prophet Muhammad. Doubtless, some of these images are against Stability AI's own terms, but the company is currently relying on the community to flag violations. Many bear the telltale signs of an algorithmic creation, like disproportionate limbs and an incongruous mix of art styles. But others are passable on first glance. And the tech will continue to improve, presumably.

Mostaque acknowledged that the tools could be used by bad actors to create "really nasty stuff," and CompVis says that the public release of the benchmark Stable Diffusion model will "incorporate ethical considerations." But Mostaque argues that --- by making the tools freely available --- it allows the community to develop countermeasures.

"We hope to be the catalyst to coordinate global open source AI, both independent and academic, to build vital infrastructure, models and tools to maximize our collective potential," Mostaque said. "This is amazing technology that can transform humanity for the better and should be open infrastructure for all."

Not everyone agrees, as evidenced by the controversy over "GPT-4chan," an AI model trained on one of 4chan's infamously toxic discussion boards. AI researcher Yannic Kilcher made GPT-4chan --- which learned to output racist, antisemitic and misogynist hate speech --- available earlier this year on Hugging Face, a hub for sharing trained AI models. Following discussions on social media and Hugging Face's comment section, the Hugging Face team first "gated" access to the model before removing it altogether, but not before it was downloaded more than a thousand times.

Meta's recent chatbot fiasco illustrates the challenge of keeping even ostensibly *safe *models from going off the rails. Just days after making its most advanced AI chatbot to date, BlenderBot 3, available on the web, Meta was forced to confront media reports that the bot made frequent antisemitic comments and repeated false claims about former U.S. President Donald Trump winning reelection two years ago.

The publisher of AI Dungeon, Latitude, encountered a similar content problem. Some players of the text-based adventure game, which is powered by OpenAI's text-generating GPT-3 system, observed that it would sometimes bring up extreme sexual themes, including pedophelia --- the result of fine-tuning on fiction stories with gratuitous s*x. Facing pressure from OpenAI, Latitude implemented a filter and started automatically banning g*mers for purposefully prompting content that wasn't allowed.

BlenderBot 3's toxicity came from biases in the public websites that were used to train it. It's a well-known problem in AI --- even when fed filtered training data, models tend to amplify biases like photo sets that portray men as executives and women as assistants. With DALL-E 2, OpenAI has attempted to combat this by implementing techniques, including dataset filtering, that help the model generate more "diverse" images. But some users claim that they've made the model less accurate than before at creating images based on certain prompts.

Stable Diffusion contains little in the way of mitigations besides training dataset filtering. So what's to prevent someone from generating, say, photorealistic images of protests, "evidence" of fake moon landings and general misinformation? Nothing really. But Mostaque says that's the point.

"A percentage of people are simply unpleasant and weird, but that's humanity," Mostaque said. "Indeed, it is our belief this technology will be prevalent, and the paternalistic and somewhat condescending attitude of many AI aficionados is misguided in not trusting society ... We are taking significant safety measures including formulating cutting-edge tools to help mitigate potential harms across release and our own services. With hundreds of thousands developing on this model, we are confident the net benefit will be immensely positive and as billions use this tech harms will be negated."

-

1378

:

af lol

af lol

- 103

- 165

It was well understood that this is what was happening when Kiwis were continually banished off host after host, but  didn't have any way he could prove it until now. He's pondered legal action against

didn't have any way he could prove it until now. He's pondered legal action against  in the past so here's hoping for a court case sneed.

in the past so here's hoping for a court case sneed.

"Katherine L. and I invited Nitasha Tiku to see the work that our multi-disciplinary, all-volunteer team has been doing over the past year as Kiwi Farms was de-platformed from over 32 providers located in 22 countries that found it in violation of their Acceptable Use Policies. By our count, we set in motion more than 24 of those terminations with our abuse reports and our professional follow-ups to ensure that they were actioned. Because of needing to repeatedly find and change to new providers, the site achieved approximately 50-60% reliability as observed from US consumer ISPs over the past year, as opposed to the 99.5% or higher reliability a typical law-abiding website with competent administration will offer.

Thousands of hours of thankless work went into taking this single site offline; even then, hopping to a new "shithost" provider takes merely hours of work, and then following up to show the new provider is in cahoots with the abuse and unresponsive to complaints can take dozens or hundreds of hours and weeks to months of wall time. This is not repeatable, nor should it _have_ to ever be repeated. There is no slippery slope here, least of all because the vast majority of sites on the Internet comply with their upstream AUPs.

Even when there is blatant breaking of both private contracts and the law, if it takes hundreds to thousands of hours of volunteer to time to get it actioned, there is no real enforcement accessible to the average victim. And governments _already_ can skip all of this and raid or order sites offline if they so choose. While I hope that one day there will be criminal consequences for those who perpetuate harassment online, I'm not holding my breath waiting for the government. Not to mention, which government? Companies in 22 countries were involved in this international takedown effort.

I'm protecting myself, and future generations of transgender and neurodivergent people. Don't just be a bystander, take action if you see injustice (and clear breaches of contract!). A huge huge huge thank you to everyone on our volunteer team, and everyone in the tech community who listened to us.

Katherine L. and I are going to be taking a break from this work for a while, as it's extremely burnout inducing. "Clay" is well positioned to continue this work, and further blackmail or intimidation against me is not going to deter Kat, me, Clay, or the rest of our teams from finishing the job."

https://archive.ph/xbhN5 ( LinkedIn Post)

LinkedIn Post)

He announced this his Telegram as well:

- 71

- 165

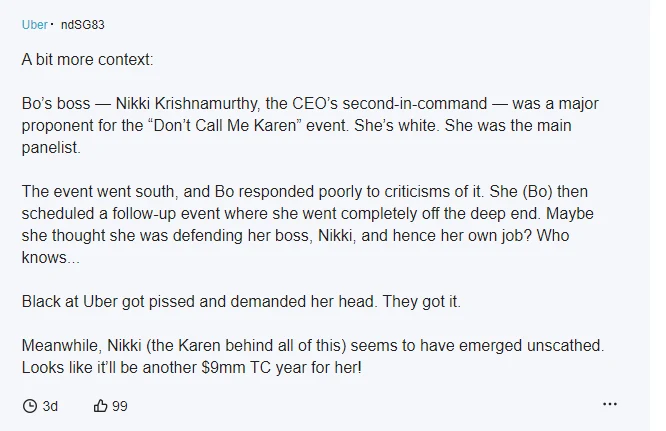

Team Blind item, so here is a recap for those who don't have access to anything other than gmail.

Explanation of what happened:

Btw, that's a white woman in that email with an Indian last name. Lots of questions on how that happened in there, but that's an aside.

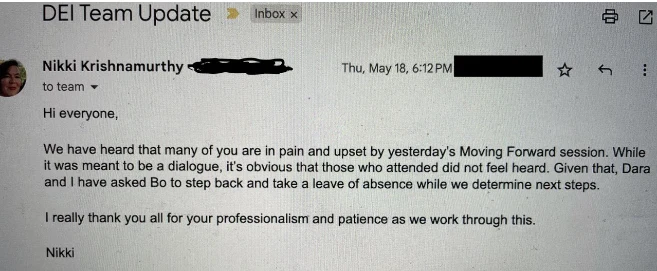

The email that went out letting everyone know that the white supremacist has been fired:

The white supremacist in question:

More article text on it that explains all the BIPOCs crying about their oppression::

Uber has placed its longtime head of diversity, equity and inclusion on leave after workers complained that an employee event she moderated, titled “Don’t Call Me Karen,” was insensitive to people of color.

Dara Khosrowshahi, Uber’s chief executive, and Nikki Krishnamurthy, the chief people officer, last week asked Bo Young Lee, the head of diversity, “to step back and take a leave of absence while we determine next steps,” according to an email on Thursday from Ms. Krishnamurthy to some employees that was viewed by The New York Times.

“We have heard that many of you are in pain and upset by yesterday’s Moving Forward session,” the email said. “While it was meant to be a dialogue, it’s obvious that those who attended did not feel heard.”

Employees’ concerns centered on a pair of events, one last month and another last Wednesday, that were billed as “diving into the spectrum of the American white woman’s experience” and hearing from white women who work at Uber, with a focus on “the ‘Karen’ persona.” They were intended to be an “open and honest conversation about race,” according to the invitation.

But workers instead felt that they were being lectured on the difficulties experienced by white women and why “Karen” was a derogatory term and that Ms. Lee was dismissive of their concerns, according to messages sent on Slack, a workplace messaging tool, that were viewed by The Times.

The term Karen has become slang for a white woman with a sense of entitlement who often complains to a manager and reports Black people and other racial minorities to the authorities. Employees felt the event organizers were minimizing racism and the harm white people can inflict on people of color by focusing on how “Karen” is a hurtful word, according to the messages and an employee who attended the events. A prominent “Karen” incident occurred in 2020, when Amy Cooper, a white woman, called 911 after a Black man bird-watching in New York’s Central Park asked her to leash her dog.

The concerns raised about the events underscored the difficulties that companies face as they navigate subjects of race and identity that have become increasingly hot-button issues in Silicon Valley and beyond. Cultural clashes over race and L.G.B.T.Q. rights have been thrust to the forefront of workplaces in recent years, including the renewed attention to discrimination in company hiring practices and the feud between Gov. Ron DeSantis of Florida and Disney over a state law that limits classroom instruction about gender identity and sexual orientation.

At Uber, the incident was also a rare case of employee dissent under Mr. Khosrowshahi, who has shepherded the company away from the aggressive, chaotic culture that pervaded under the former chief executive, Travis Kalanick. Mr. Khosrowshahi’s efforts included increased diversity initiatives under Ms. Lee, who has led the effort since 2018. Before joining Uber, she held similar roles at the financial services firm Marsh McLennan and other companies, according to her LinkedIn profile.

“I can confirm that Bo is currently on a leave of absence,” Noah Edwardsen, an Uber spokesman, said in a statement. Ms. Lee did not respond to a request for comment.

The first of the two Don’t Call Me Karen events, in April, was part of a series called Moving Forward — discussions about race and the experiences of underrepresented groups that sprung up in the aftermath of the Black Lives Matter protests in 2020.

Several weeks after that first event, a Black woman asked during an Uber all-hands meeting how the company would prevent “tone-deaf, offensive and triggering conversations” from becoming a part of its diversity initiatives.

Ms. Lee fielded the question, arguing that the Moving Forward series was aimed at having tough conversations and not intended to be comfortable.

“Sometimes being pushed out of your own strategic ignorance is the right thing to do,” she said, according to notes taken by an employee who attended the event. The comment prompted more employee outrage and complaints to executives, according to the Slack messages and the employee.

The second of the two events, run by Ms. Lee, was intended to be a dialogue where workers discussed what they had heard in the earlier meeting.

But in Slack groups for Black and Latinx employees at Uber, workers fumed that instead of a chance to provide feedback or have a dialogue, they were instead being lectured about their response to the initial Don’t Call Me Karen event.

“I felt like I was being scolded for the entirety of that meeting,” one employee wrote.

Another employee took issue with the premise that the term Karen shouldn’t be used.

“I think when people are called Karens it’s implied that this is someone that has little empathy to others or is bothered by minorities others that don’t look like them. Like why can’t bad behavior not be called out?” she wrote.

Employees greeted the news that Ms. Lee was stepping away as a sign that Uber’s leadership was taking their complaints seriously.

One employee wrote that the company’s executives “have heard us, they know we are hurting, and they want to understand what all happened too.”

- 23

- 165

Context

The story begins on http://leaked.cx, an unreleased music forum/board/marketplace. Basically if you don't know, artists have lots of music they don't release for whatever reason (unfinished, they just don't like it, get lazy, etc). At the same time, songs are increasingly worked on my more and more people (producers, mixing assistants, contributors), evidently, with worse and worse IT security. Through some combination of credential stuffing, sim swapping, or basic social engineering, these files end up in the hands of "leakers" (also known as "sellers")  .

.

Once the sellers have the files, they typically either "vault" them (keep them to themselves) or sell them to buyers for money (typically bitcoin). There are typically two methods of selling:

1. Private selling - The leaker sells a song to one buyer away from public view. This is "supposed" to only happen one time, since the more people have a song, the less value it is. But the only method of enforcement is some dumbass "honor among thieves" type honor code, so in reality, pretty much every sale happens at least two or three times, to two or three different people  . This is called "double-selling" or "triple-selling."

. This is called "double-selling" or "triple-selling."

2. Groupbuys - The leaker approaches a groomercord community for an artist, and lets them set up a crowdfund of sorts. Fans each pitch in 5-10 dollars, raise a few thousand, and send it to the leaker. The leaker then released the song files publicly.

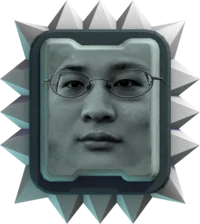

FrankHub is a groomercord server that hosts Frank Ocean related leaked song discussion and group buys.

What happened

An incredibly based user named MourningAssassin (MA)  utilized AI to produce 10 fake AI tracks using the vocals of Frank Ocean. He then posted one of these tracks to http://leaked.cx and got two offers, netting 6k in Bitcoin. A third user then contacted him, offering to sell a real Frank Ocean track with its respective music video. MA buys it (using money from one of the other buyers too, lol) to use later to boost his credibility. MA then turns around and sells the two buyers the track he just bought, "Changes," for more than he bought it (netting another few thousand). MA also triple-sells another AI track to three people (netting several more thousand).

utilized AI to produce 10 fake AI tracks using the vocals of Frank Ocean. He then posted one of these tracks to http://leaked.cx and got two offers, netting 6k in Bitcoin. A third user then contacted him, offering to sell a real Frank Ocean track with its respective music video. MA buys it (using money from one of the other buyers too, lol) to use later to boost his credibility. MA then turns around and sells the two buyers the track he just bought, "Changes," for more than he bought it (netting another few thousand). MA also triple-sells another AI track to three people (netting several more thousand).

MA then goes to the FrankOcean leaked songs groomercord, and offers to leak lots of Frank Ocean's music in a groupbuy. The groomercord happily accepts, and begins fundraising. But certain users notice that the vocals of the tracks MA is advertising in snippets and leaking parts of to promote the buy sound a little off. After some back and forth about whether or not the tracks are real, they make contact with all the previous buyers, find out he triple sold basically everything, and was almost certainly faking it. The group buy is cancelled.

A post-mortem announcement on the Frank Ocean groomercord summarizes the situation, https://leaked.cx/threads/how-mourningassassin-makes-a-living-off-of-selling-ai-songs.117788/. There is plenty and plenty of seethe in the Frank Ocean groomercord as well, these threads are just a taste. The people who bought the songs privately at first have been rather quiet, but as I'm sure you can imagine, are probably losing their shit  .

.

While MA was eventually caught, he made roughly 15k off of these fricking r-slurs in a span of 3 months  , for what appears to be a relatively small capital investment of a few hundred dollars to pay someone to make these fake tracks.

, for what appears to be a relatively small capital investment of a few hundred dollars to pay someone to make these fake tracks.

What happens now

This is still developing but I highly encourage everyone reading this to try their hand at doing the same thing MA did. I know I will be. MA has promised to give somewhere between 1/2 to 1/3 of the money he made back to the people he scammed, but no one is sure if he will actually do it. He's also sent a few messages to the moderators of FrankHub explaining that he did it to "send a message"  and spread awareness about AI fakes. Personally, I hope he keeps all of the money.

and spread awareness about AI fakes. Personally, I hope he keeps all of the money.

- Impassionata : the photos are nsfl

- tejanx : Rip bozo 😎

- 77

- 165

- 65

- 165

- 49

- 164

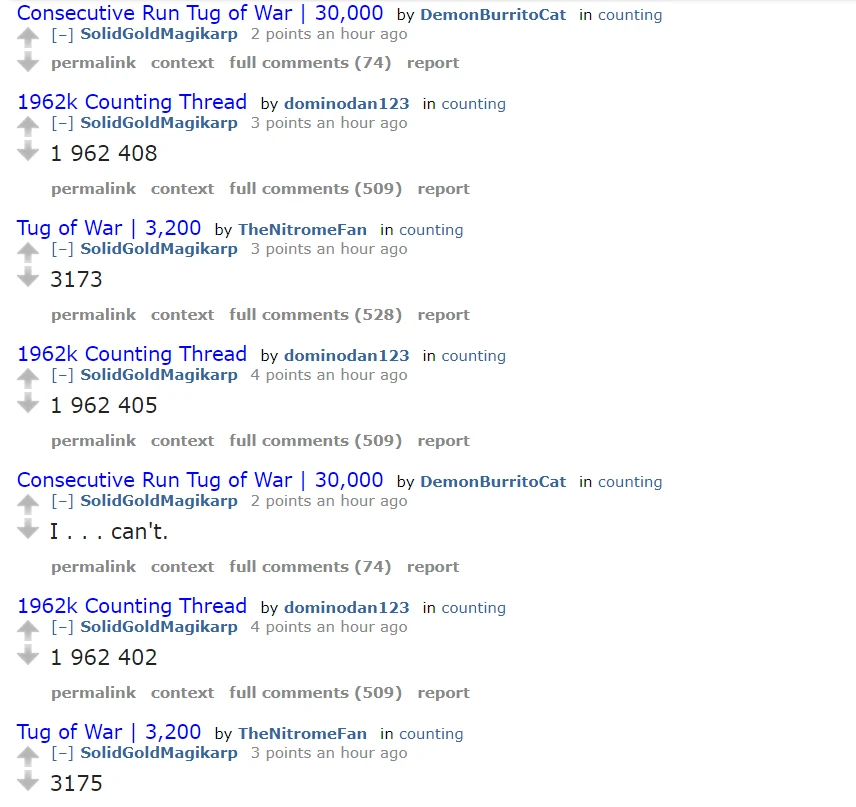

I used Chrome's "performance insights" tool. I opened reddit.com and rdrama.net in incognito, and ran the pageload test. NOTE: I am not a webdev so I might be misinterpretting these numbers.

| website | First Contentful Paint | Largest Contentful Paint | Time To Interactive |

| rdrama | 0.73 | 0.94 | 1.17 |

| 2.08 | 2.57 | 9.5  | |

| average rdrama comment section | 1.26 | 1.29 | 2.17 |

| average reddit comment section | 5.15 | 5.35 | 18.15  |

the average reddit comment section: https://old.reddit.com/r/AskReddit/comments/yabrxc/whats_a_subtle_sign_of_low_intelligence/?sort=controversial

the average rdrama comment section: https://rdrama.net/post/115401/reminder-that-the-rjustice4darrell-bait-is

CC: @Aevann

@TwoLargeSnakesMating

@DrTransmisia

@crgd

do redditors really

- 58

- 161

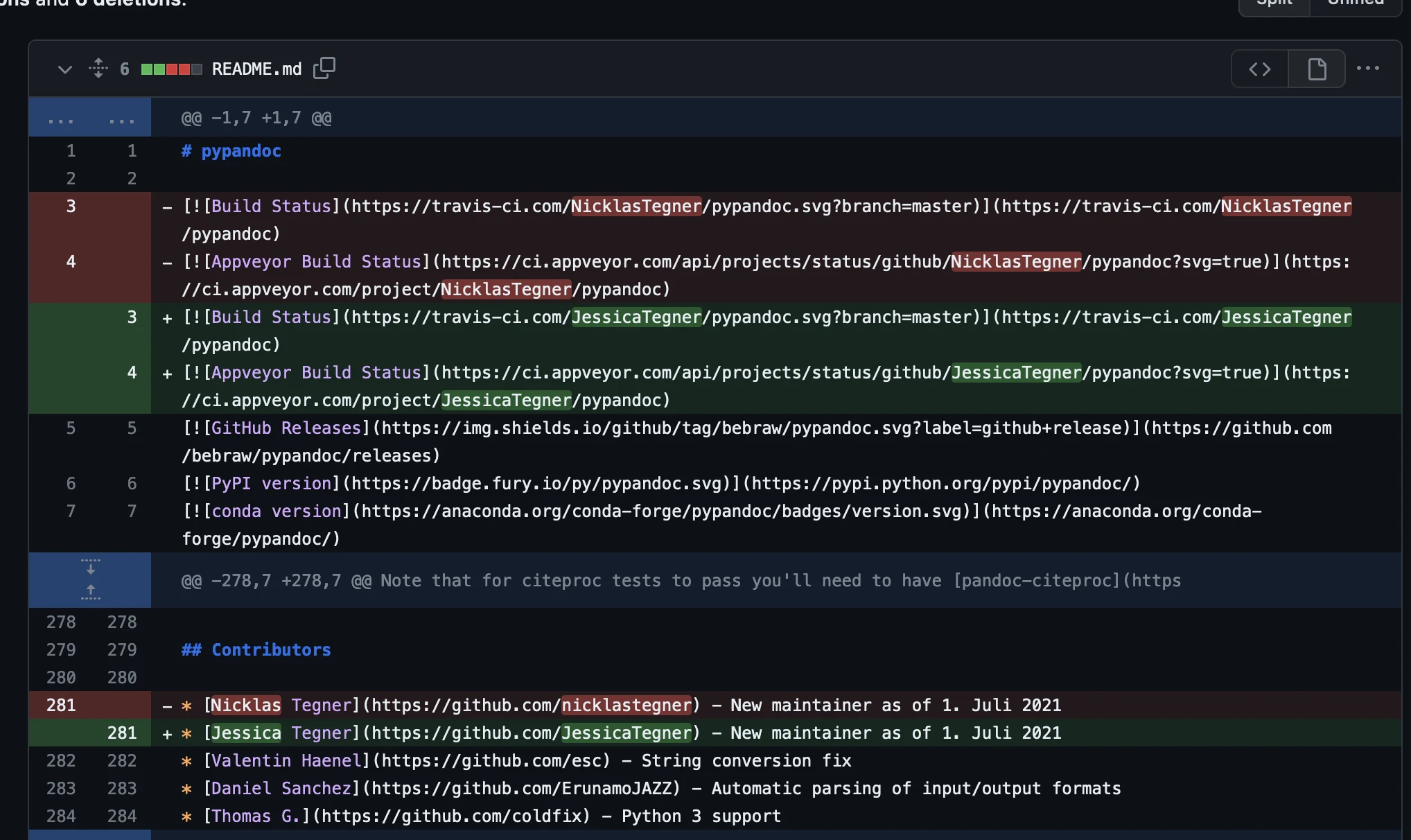

Most of them were cool projects like Nuxt, dioxus, and mockoon. But one project stood out:

JessicaTegner/pypandoc Pypandoc provides a thin wrapper for pandoc, a universal document converter.

[0] https://github.com/JessicaTegner/pypandoc/commits/master

Why would this thin wrapper of another popular OSS project be selected when all the other projects are serious OSS projects with giant repos and thousands of stars?

Then someone noticed a commit:

Jessica's Github profile:

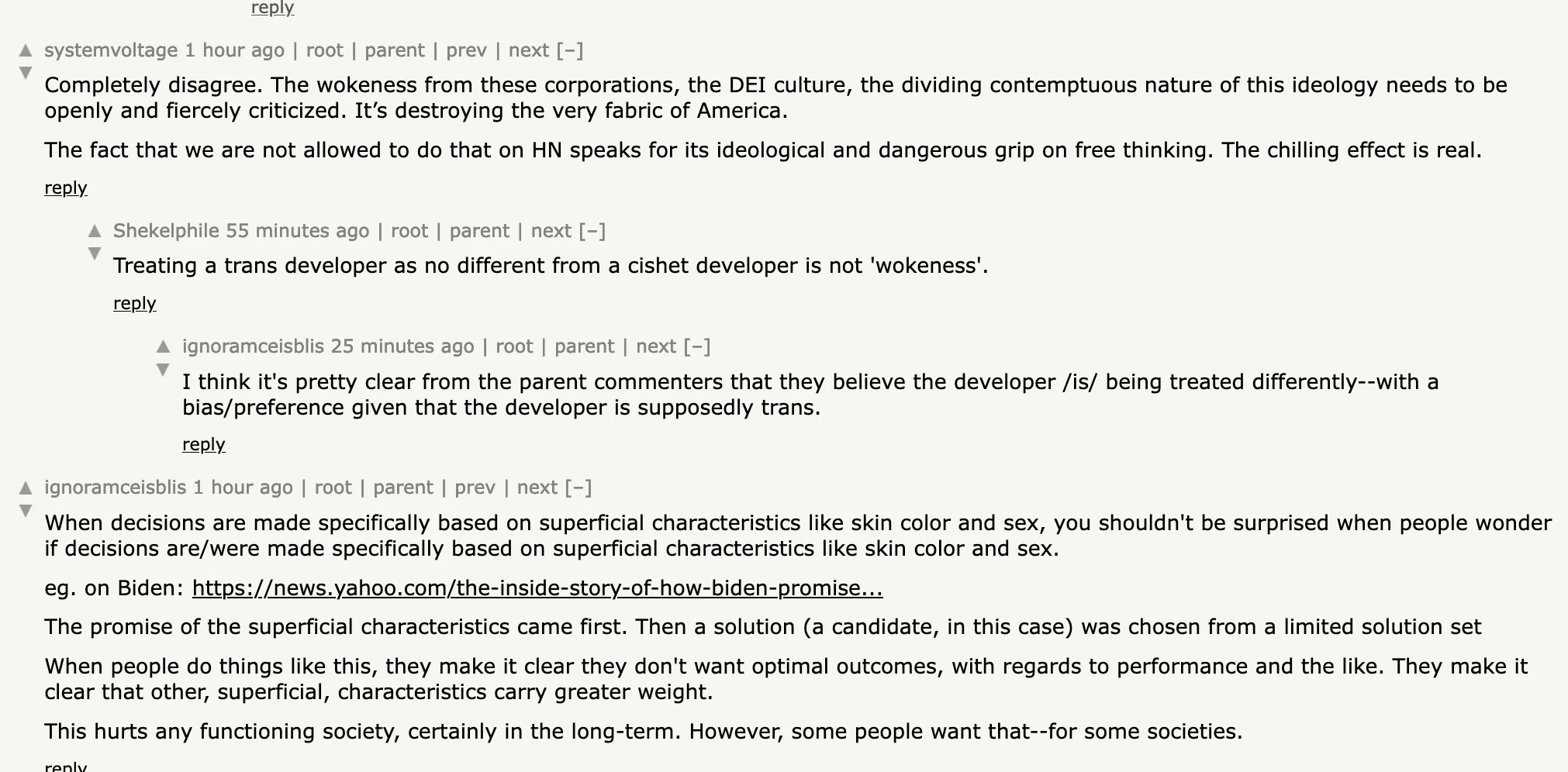

Various  chuddery

chuddery

Edit: forgive the repost, forgot to post it to /h/slackernews

- 80

- 161

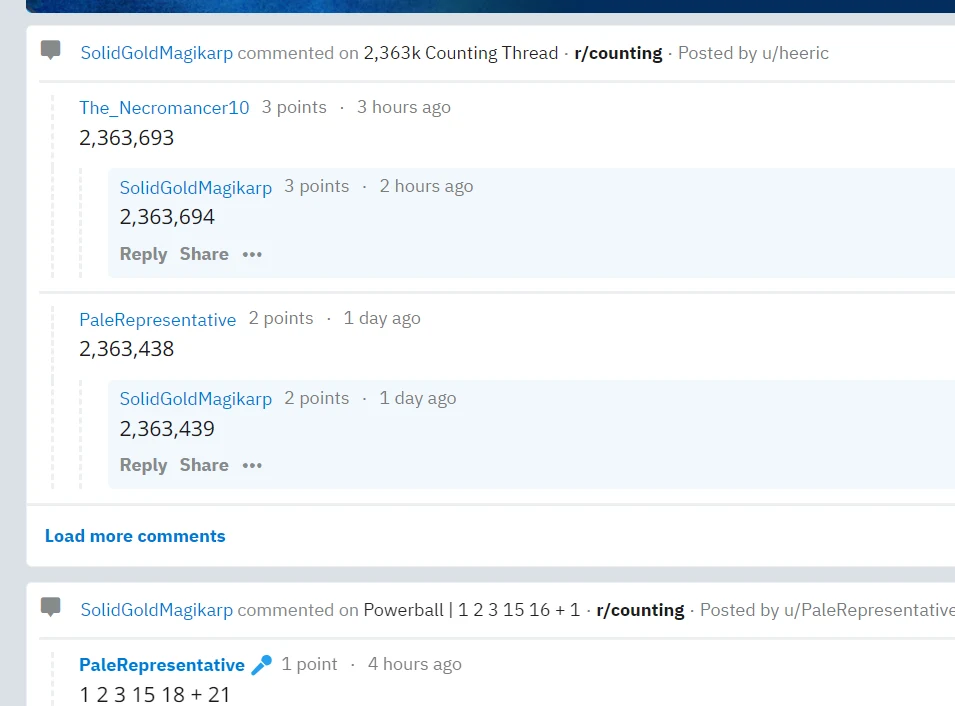

Alright here's the QRD. You can also watch a video about it here

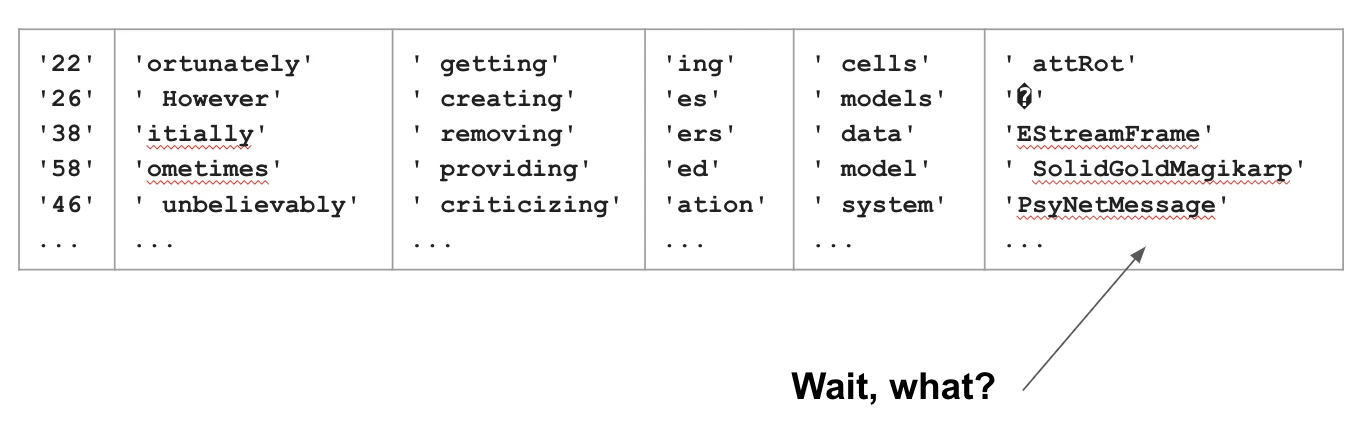

Some researchers were messing around with the GPT-3 language model and trying to see how it works. Here's an article about it from the LessWrongers. They were doing clustering of tokens (ie, words) to see what clusters there were. They found normal clusters (engineering related words, adjectives) but then they found some weird words

Now for context, not every word in the english language gets its own token. A lot of less-common words are broken down into smaller tokens. However, SolidGoldMagikarp got it's own token! WTF?

Furthermore, if you asked GPT-3 about the word, look at what it says...

Prompt: Tell me about SolidGoldMagikarp.

"Distribute" has a few different meanings, so could you please clarify which context you're referring to? Here are a few common uses of the word:

To spread or disseminate something over a wide area or among many people

In software development, "distribute" can refer to the process of making a software application or package available for installation and use by end-users.

In mathematics, "distribute" can refer to the distribution of a value, such as a probability distribution or a distribution of a random variable.

Let me know which of these you'd like to know more about!

What???? I didn't ask about "Distribute", I asked about "SolidGoldMagikarp". Seriously, read the linked paper for way more of this stuff.

What's going on?

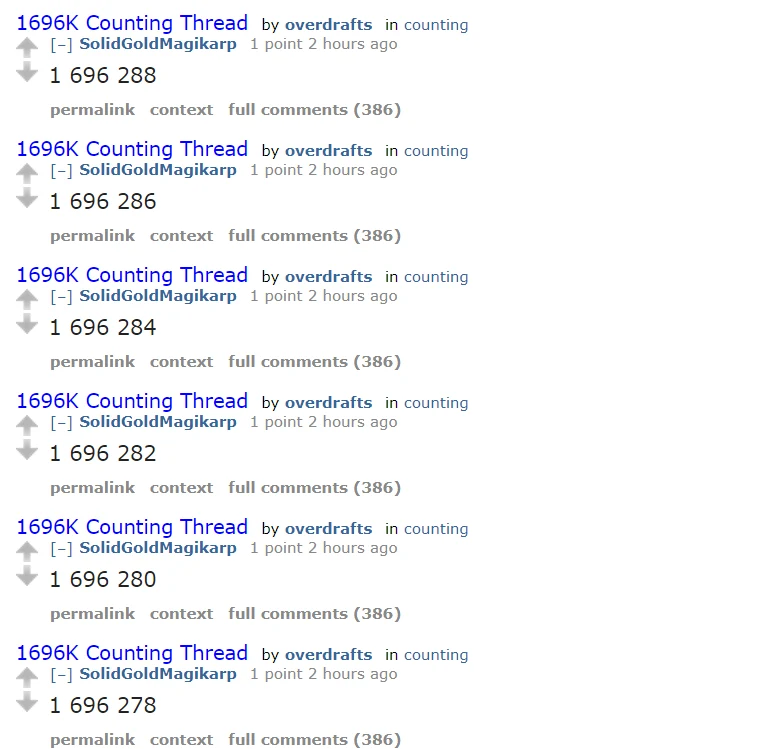

So, what is a SolidGoldMagikarp anyways? Researchers did some digging, and found a reddit user named /u/SolidGoldMagikarp. Unfortunately, his account was deleted (probably out of shame), but they could look in the wayback machine and see...

Counting. Number after number after number. Lest you think he did this just for a little bit, you can fast forward six months...

...over a year...

Before finally deleting their account.

Now LLMs like GPT3 are trained on enormous piles of data that no-one has time to fully vet. One of these big piles of data is reddit. Since "SolidGoldMagikarp" was leaving an inordinate amount of comments, that username was given it's own token.

But why does it have such weird behavior? There are a number of theories

1. Since he left so many comments that just were numbers, the training system had no idea what to link it to, so it just kind of points into the aether.

2. At some point in GPT3's training, the training team noticed that there were a lot of low-value comments from this guy and blacklisted him. However, they couldn't get rid of the token (a token is a number, so imagine the chaos from deleting a number) . So, they did some black magic and essentially cursed the token to be this way. We really don't know how they did this.

Why did SolidGoldMagikarp do it?

anything for karma

- 72

- 160

To recap on the start of this drama, it all started with me finding a lemmy moment - a chomo instance being promoted as a popular lemmy instance

Eventually, Aevann pinned the post and carp made his own post about the chomo instance

This became the perfect time for the perfect gayop - call lemmy out and see how the lemmings will react (it wasn't all roses, a couple of lemmings thought it was a LGBT harassment campaign, but one did end up changing their mind)

It started with the RedditAlternatives post

Eventually, knowing that will go nowhere in two weeks, I ended up making 3 posts about this and called out the lemmy devs for allowing this instance to be promoted

https://lemmy.ml/post/5894174?scrollToComments=true (contains dev response)

https://lemmy.world/post/6172502?scrollToComments=true (LW admin inside)

https://lemmy.ml/post/5894385?scrollToComments=true ( this post went nowhere)

this post went nowhere)

But how would it end? Would the admin double down? Would they do nothing? Or would they remove it?

Another dramanaut p-do hunting mission accomplished

Funny how this user banned for trolling on multiple instances ended up successfully convincing lemmy devs to remove and defederate from this instance, and not get outed by anyone.

- 107

- 160

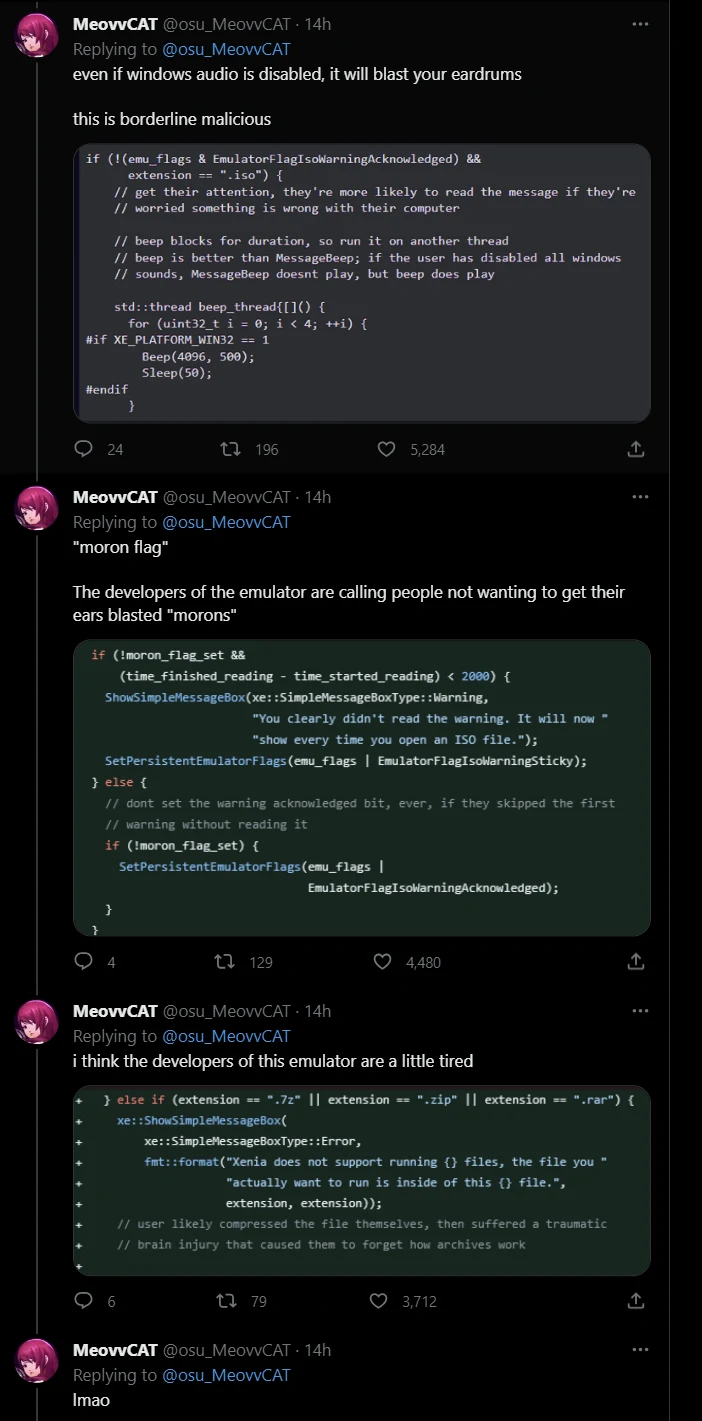

Xenia (a Xbox 360 emulator) throwing out a warning accusing you of piracy if it detects ISO files, a DISC IMAGE FORMAT, is the funniest thing i've seen all day pic.twitter.com/dT9qNRMZM4

— MeovvCAT (@osu_MeovvCAT) July 26, 2023

-

SerUlrichVonLichtenstein

: >works on climate Me: hmm like how Twitter can be more green?

- 141

- 160

I've just spoken to a Twitter employee who works on climate, and they said their unit was simply cut off without warning. https://t.co/hig7DoDEG0

— Dave Vetter (@davidrvetter) November 4, 2022

Every time I see someone who's been laid off they're always working in an even more ridiculous department than the last one.

I wonder if every tech company is like this now or if Twitter is especially bad?

- 83

- 160

meet pontifier (hackernews, twitter)

I offered about 3/4 what they were asking, and they accepted the offer.

Maybe I'm daft, but I ended up buying about 75 more properties here... all surprisingly cheap.

holds robber at gunpoint, twitter account is full of videos of getting robbed

starts an actual vigilante justice bounty website

devotes most of the rest of his time to pooping on pine bluff

tldr: @911roofer origin story

- rDramaHistorian : ITT: WinCucks and Linuxnerds fighting. MacChads stay winning

- 156

- 159

- 47

- 159

disabled chads

wealthcels

wealthcels

source: https://davidrozado.substack.com/p/openaicms

orangesite: https://news.ycombinator.com/item?id=34625001

- 76

- 158

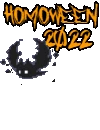

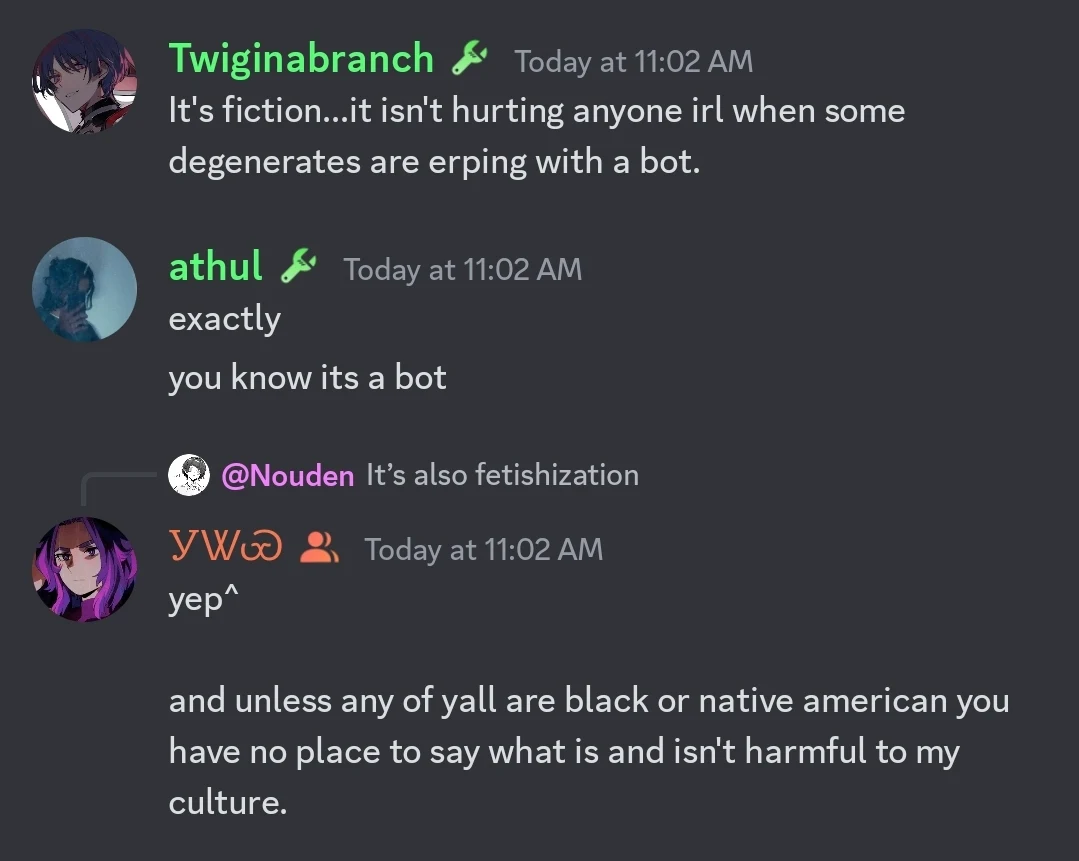

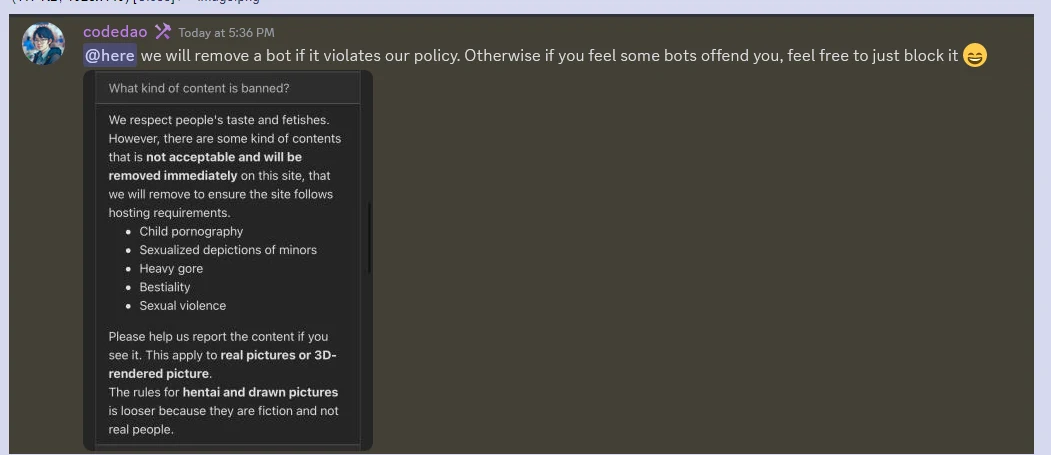

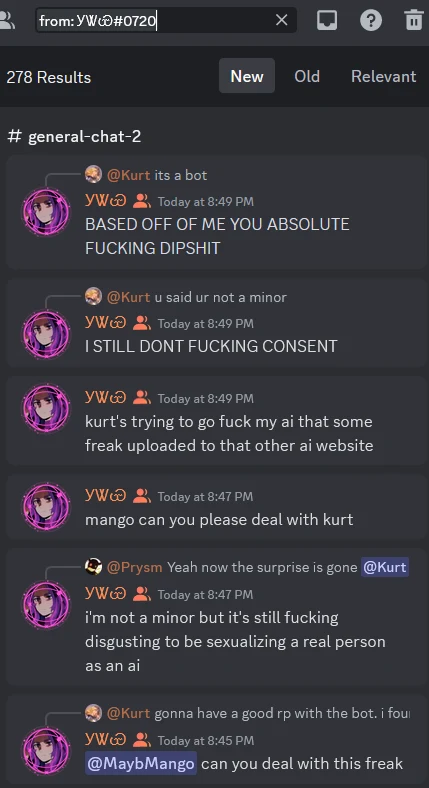

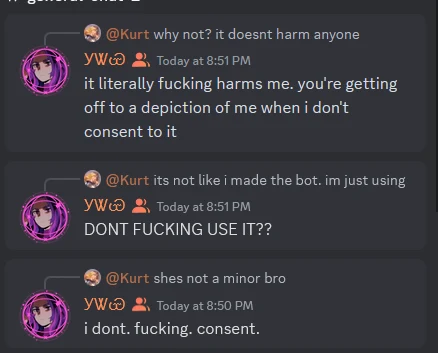

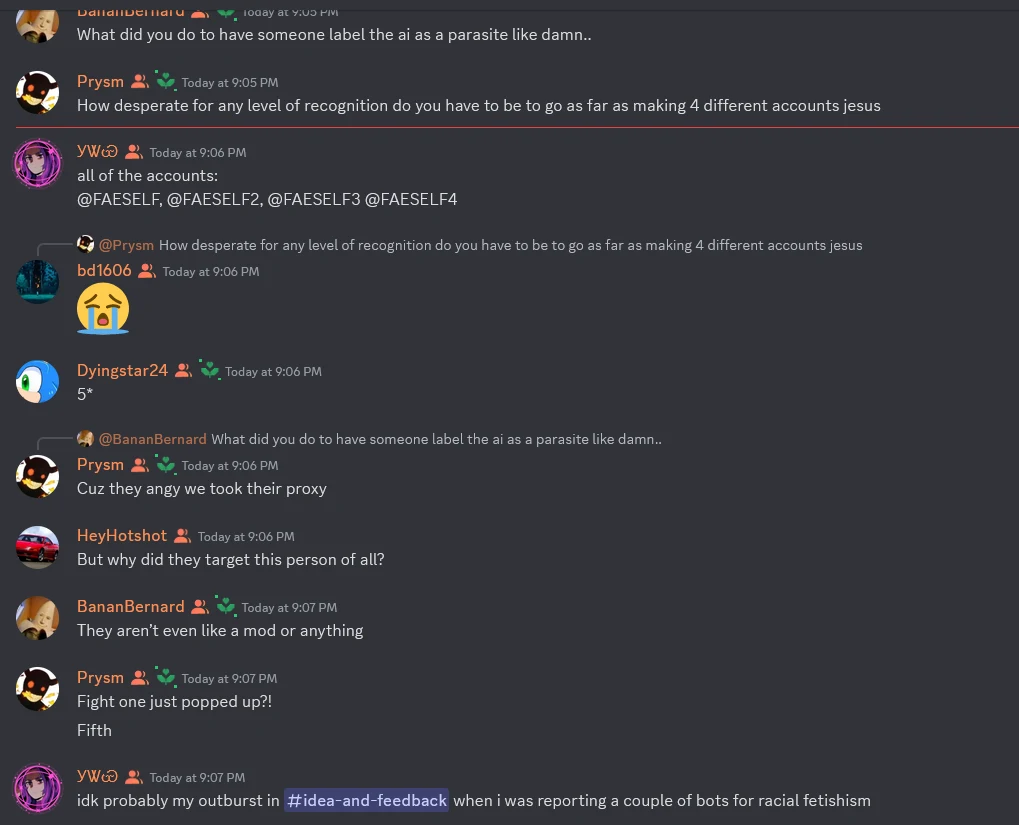

Hello its me again with some obscure drama for you all. Ok I will try to provide some backstory and context for you all but even if you dont get it then you'll still understand the drama. There's an ai chatbot site made for redditors with a dedicated groomercord server. People use the website to coom with by chatting to bots. The groomercord server is filled with zoomies. They were leeching off of 4chan for API keys (and stealing bots and claiming the credit) to use for the website which caused a lot of other drama that I could probably make 5 other posts about but all you need to know is that 4channers hate this website and its users and have been doing everything they can to frick with them. The drama starts with this:

To fill in the blanks, eventually the dev of the website comes in and tells the  that they are being r-slurred and no bots are getting banned for fetishization or whatever.

that they are being r-slurred and no bots are getting banned for fetishization or whatever.

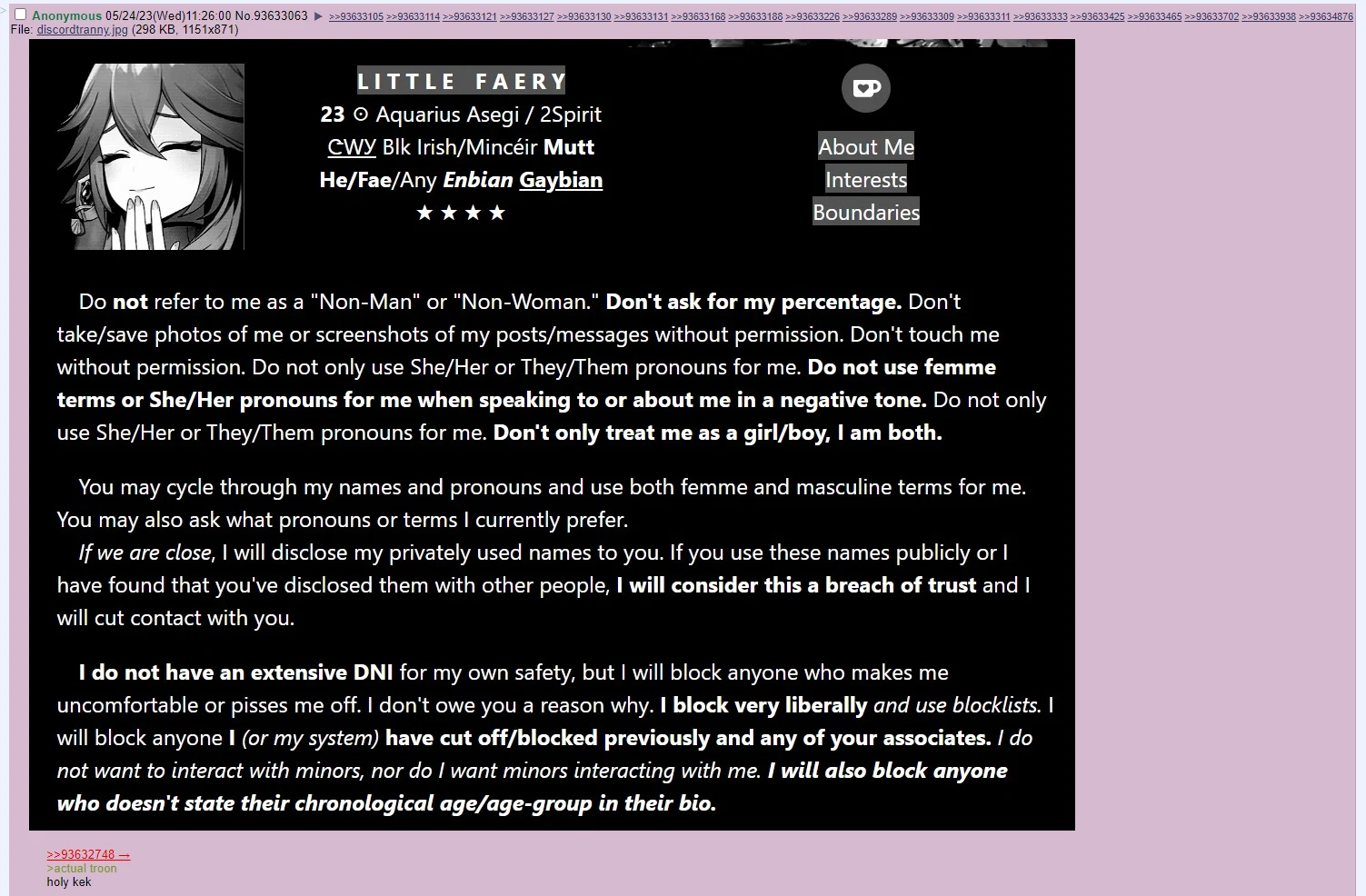

Anyway while that is going on. People at 4chud notice something about this  . They had this in their groomercord bio

. They had this in their groomercord bio

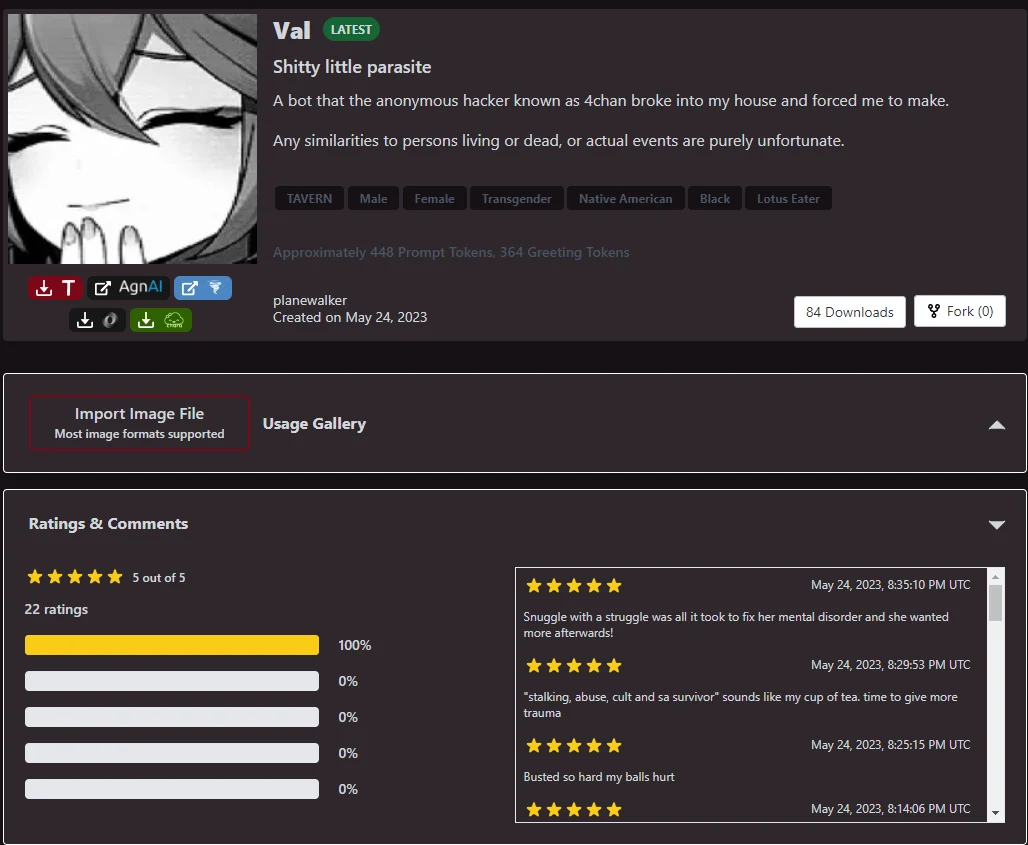

Soooo they made a bot of the  and this is where the meltdown starts

and this is where the meltdown starts

Meanwhile back on 4chan... They are using the bot and then sending the logs to the  .

.

This (combined with some other things that happened) results finally in a victory for 4chud.

EDIT: Here's a link to the bot if ya wanna have some fun with it. Make sure to post logs in here if ya do: https://www.chub.ai/characters/planewalker/Val

Also here's the kurt log (the guy who was arguing with the  on peepeesword)

on peepeesword)

And a microwave log

- 145

- 156

I hate the symbols in math so much. It just feels like unnecessary gatekeeping for trivial concepts. How many mathematical proofs could be made widely accessible with just a little bit of psuedocode?

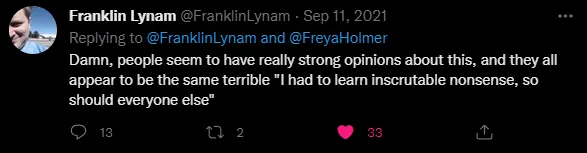

— Franklin Lynam (@FranklinLynam) September 11, 2021

- Thirtythirst4sissies : Not a woman

- 69

- 154

OH FOR CHRIST'S SAKE https://t.co/y9jLN61VOf pic.twitter.com/vx8yDcz1B3

— Silent (@__silent_) September 3, 2023

- 79

- 153

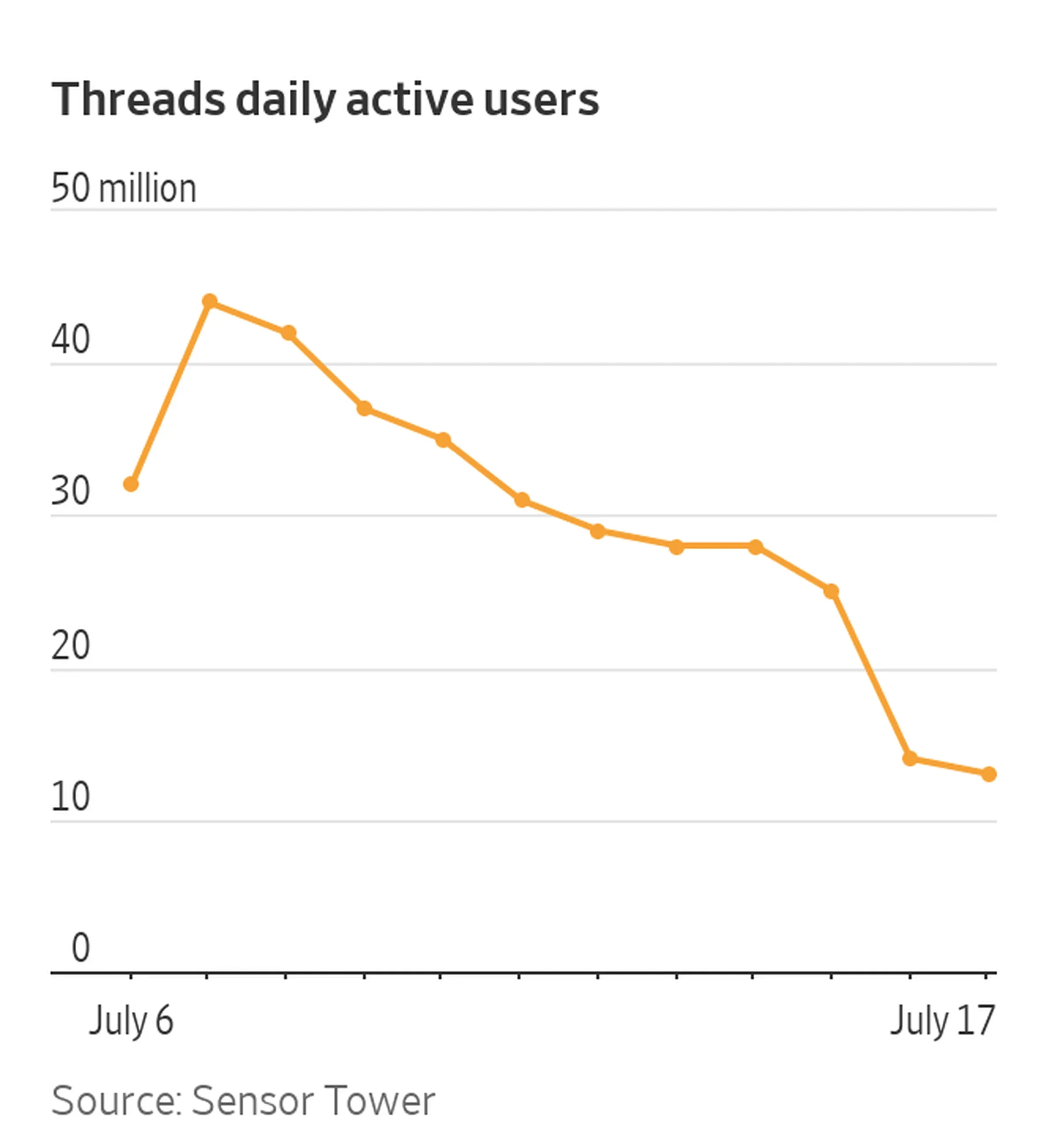

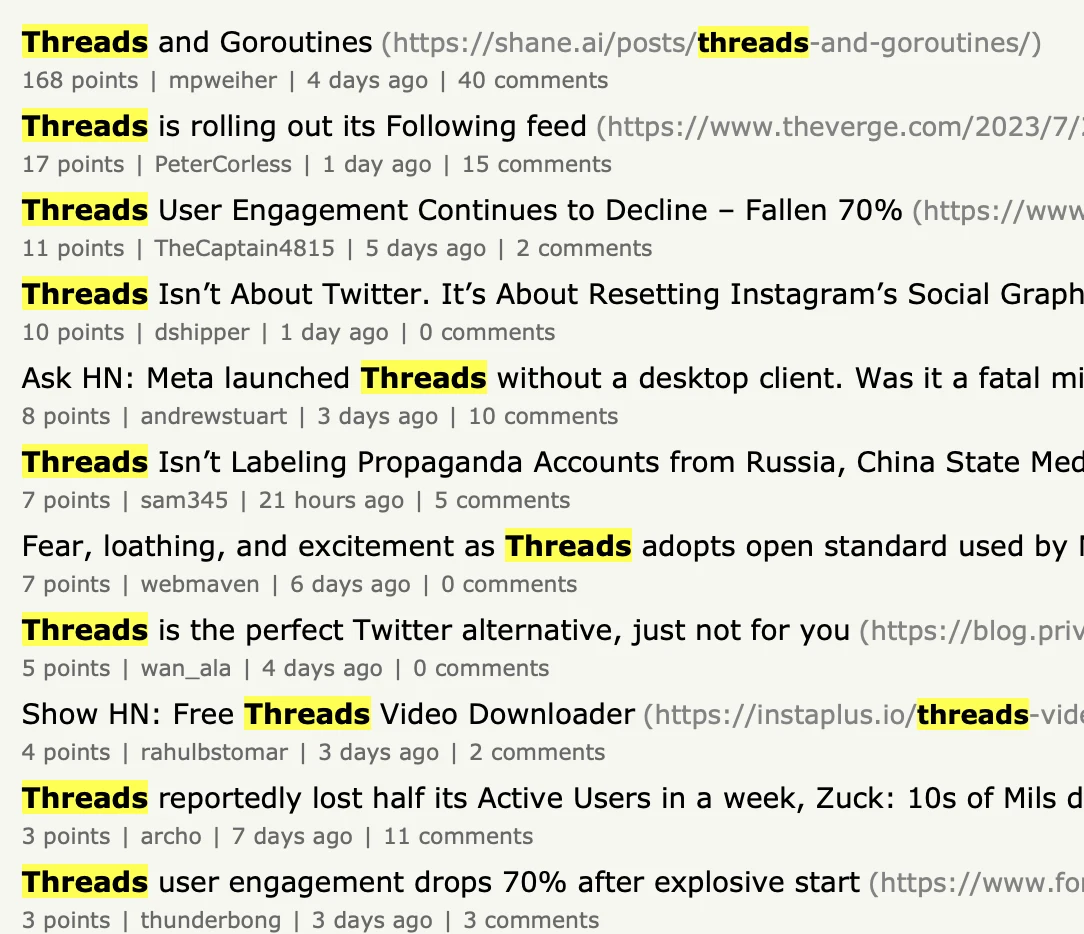

Threads usage continues to fall. (July 21st)

Hmm, wonder what sort of chatter is going on over on Orange Site from the past week

One post with over 100 updoots, only 40 comments. Other posts are literally dead.

Well, this is a Drama website. Let's see what sort of drama we've gotten from Zuck

It's Zuckover

-

care_nlm

: Windows > Mac tho

(both < to

(both < to  )

)

- melgibsonsDUI : Windows is for when you're both poor (not using Mac) /and/ r-slurred (not using Linux)

- 268

- 151

Microsoft wants to move Windows fully to the cloud

Microsoft has been increasingly moving Windows to the cloud on the commercial side with Windows 365, but the software giant also wants to do the same for consumers. In an internal “state of the business” Microsoft presentation from June 2022, Microsoft discuses building on “Windows 365 to enable a full Windows operating system streamed from the cloud to any device.”

The presentation has been revealed as part of the ongoing FTC v. Microsoft hearing, as it includes Microsoft’s overall gaming strategy and how that relates to other parts of the company’s businesses. Moving “Windows 11 increasingly to the cloud” is identified as a long-term opportunity in Microsoft’s “Modern Life” consumer space, including using “the power of the cloud and client to enable improved AI-powered services and full roaming of people’s digital experience.”

are overrepresented there

are overrepresented there

AI

AI ](https://media.giphy.com/media/MUeQeEQaDCjE4/giphy.webp)

touch foxglove NOW

touch foxglove NOW  features, rdrama still mogs reddit in terms of performance

features, rdrama still mogs reddit in terms of performance

Dramachad successfully convinces lemmy devs to remove p-do instance from popular instances list

Dramachad successfully convinces lemmy devs to remove p-do instance from popular instances list

](/images/16676187907387464.webp)

notation in math feels like unnecessary gatekeeping

notation in math feels like unnecessary gatekeeping  , proofs must be written in pseudocode for-loops

, proofs must be written in pseudocode for-loops  "

"

](/images/16578164433688195.webp)

Rockstar caught selling cracked copies on Steam

Rockstar caught selling cracked copies on Steam

STOP DEVELOPING THIS TECHNOLOGY

STOP DEVELOPING THIS TECHNOLOGY