There is a lot of enthusiasm about generating marseys using Stable Diffusion. Recently @float-trip did something that just a few months ago I predicted would take five years - make a marsey generating bot (

) (

) ( to be fair my point was about the long-term costs involved in running a bot on a node that has the capabilities to do so

to be fair my point was about the long-term costs involved in running a bot on a node that has the capabilities to do so  ). Anywho, there has been a flood of new marseys that have been submitted, prompting that rat bastard carp to tell you all to knock it off

). Anywho, there has been a flood of new marseys that have been submitted, prompting that rat bastard carp to tell you all to knock it off

(AI-chads are the most oppressed minority on earth). BUT some AI marseys are really good and cute

(AI-chads are the most oppressed minority on earth). BUT some AI marseys are really good and cute  so how can you make good marseys using AI?

so how can you make good marseys using AI?

SETUP

Alright so this guide is only for non-poorcels that have a decent GPU. By decent GPU, I mean NVIDIA  . (I recently upgraded my GPU from an AMD RX570, and I tried my absolute hardest to make it work on my AMD but I couldn't get it. There are """guides""" online but there are a lot of implicit assumptions about what type of GPU you are using, it has to have so much VRAM and it has to be certain series and the documentation is a FUCKING nightmare (@DrTransmisia can relate))

. (I recently upgraded my GPU from an AMD RX570, and I tried my absolute hardest to make it work on my AMD but I couldn't get it. There are """guides""" online but there are a lot of implicit assumptions about what type of GPU you are using, it has to have so much VRAM and it has to be certain series and the documentation is a FUCKING nightmare (@DrTransmisia can relate))

(Also, I am running it locally because I want to generate tons of variations in the background while I am wageslaving. You can run it via the cloud too if you want tho)

You will need:

A PC with a GPU with a decent amount of VRAM (10+ GB is best, but the requirements keep going down as CS-chads continue refining the model. nowadays you can technically run it with 4GB with certain versions of the code but YMMV because i haven't done that yet)

Python 3.10.6 (You should add it to PATH)

git (technically optional but it will make your life easier)

@float-trip's model. float trip is the real MVP here btw, he has been trying several different things to get this project to work with tons of compute.

mad respect my nigga

mad respect my nigga

Get AUTOMATIC1111's webui repo. This is the best repo out there for this kinda stuff, partially because it was made by 4chan autists who were trying to generate waifus. If you have git, you can just open up a command prompt and type

git pull https://github.com/AUTOMATIC1111/stable-diffusion-webui.git. Otherwise, I think there are redneck ways to get the code as a zip file but that's a pain.Place float-trip's model in the /models directory. Rename the model to "model.ckpt"

Double click webui-user.bat in the main directory.

Wait a few minutes for everything to load, until the text stops moving and it tells you to open a weblink.

Open your webbrowser and type "localhost:7860". Viola! You should see the webui

GENERATING MARSEYS - GENERAL TIPS

If you have done everything correctly, and you type the word "marsey" into the prompt, you should see a marsey appear. If not, you may see something related to the planet mars. If that happens, make sure you have the correct model that I linked above. Also try restarting your computer, that helps for some reason ( ).

).

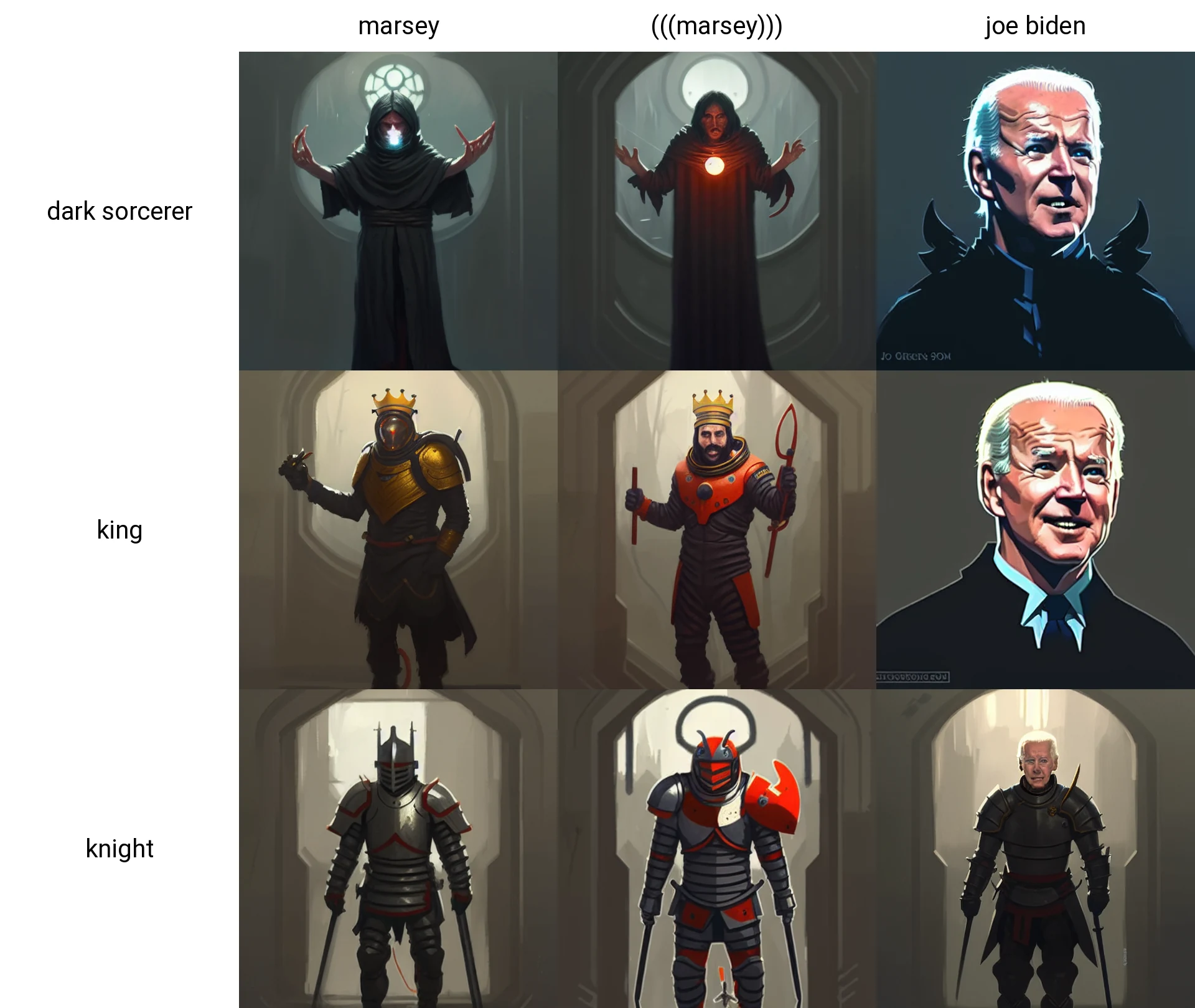

With most words, longer descriptions are better. However, with "marsey" I have noticed that sometimes the AI "loses the plot", so to speak. For instance, if I type "joe biden as a dark wizard, very detailed, greg rutkowski, award winning, trending on artstation", I will get a badass picture of DVRK BIDVN. However, if I do "marsey as a dark wizard, very detailed, greg rutkowski, award winning, trending on artstation", the picture generally won't be of DVRK MVRSEY. I think that's because CLIP may be confused about what "marsey" actually is - is she a cat  , a person

, a person  , a style

, a style  , or an idea

, or an idea  ? Who knows??? Anyways, all that to say that shorter is generally better.

? Who knows??? Anyways, all that to say that shorter is generally better.

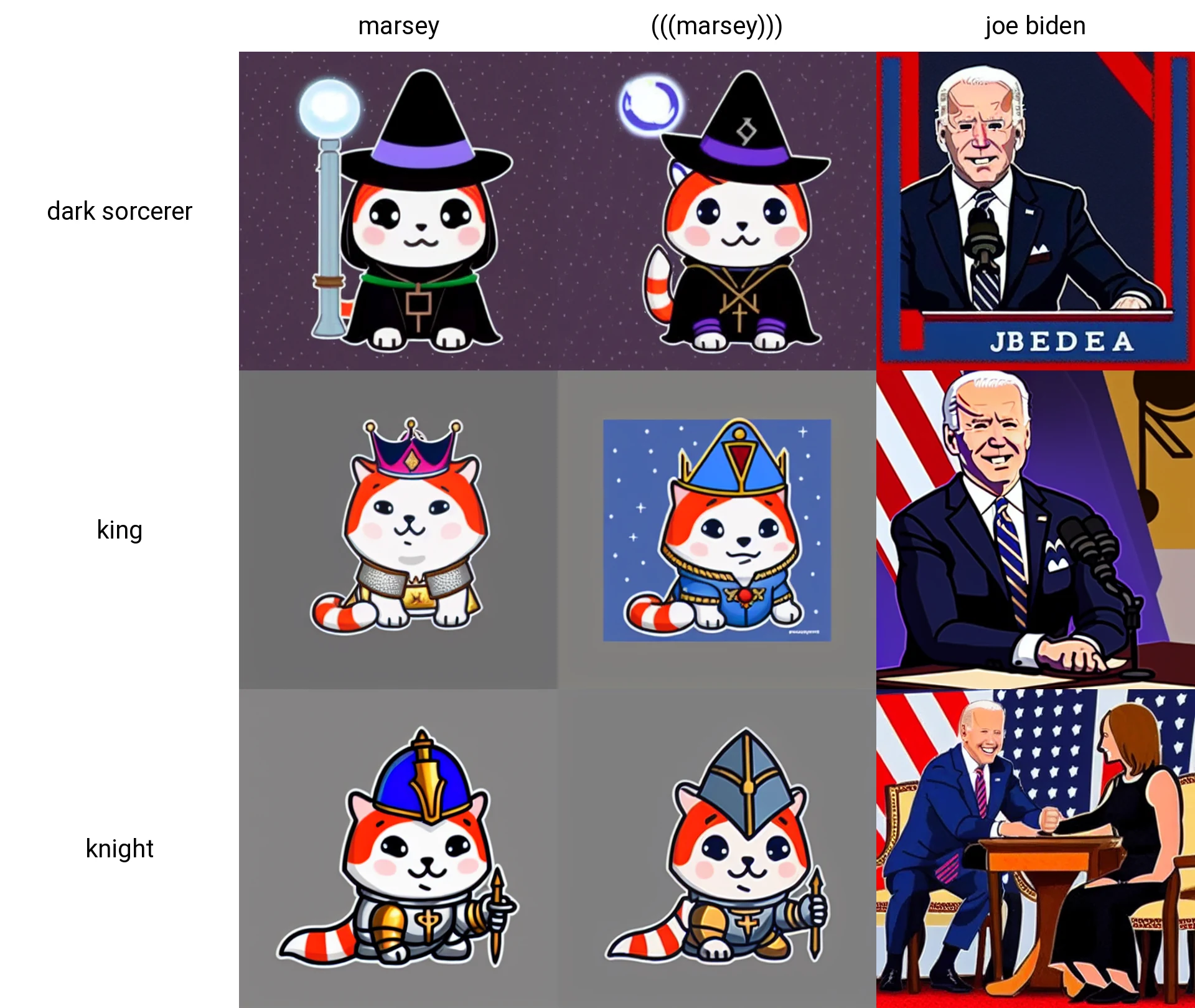

stylized using a greg rutkowski type prompt. notice that marsey doesn't really resemble marsey

no stylization

Also, remember that you can use (((noticing)))  on words and phrases you want the AI to pay closer attention to. No, that's not a joke, that is literally a part of the code. For instance, if you are finding that "marsey as a knight" is generating pictures of human knights with the wrong style, try "(((marsey))) as a knight".

on words and phrases you want the AI to pay closer attention to. No, that's not a joke, that is literally a part of the code. For instance, if you are finding that "marsey as a knight" is generating pictures of human knights with the wrong style, try "(((marsey))) as a knight".

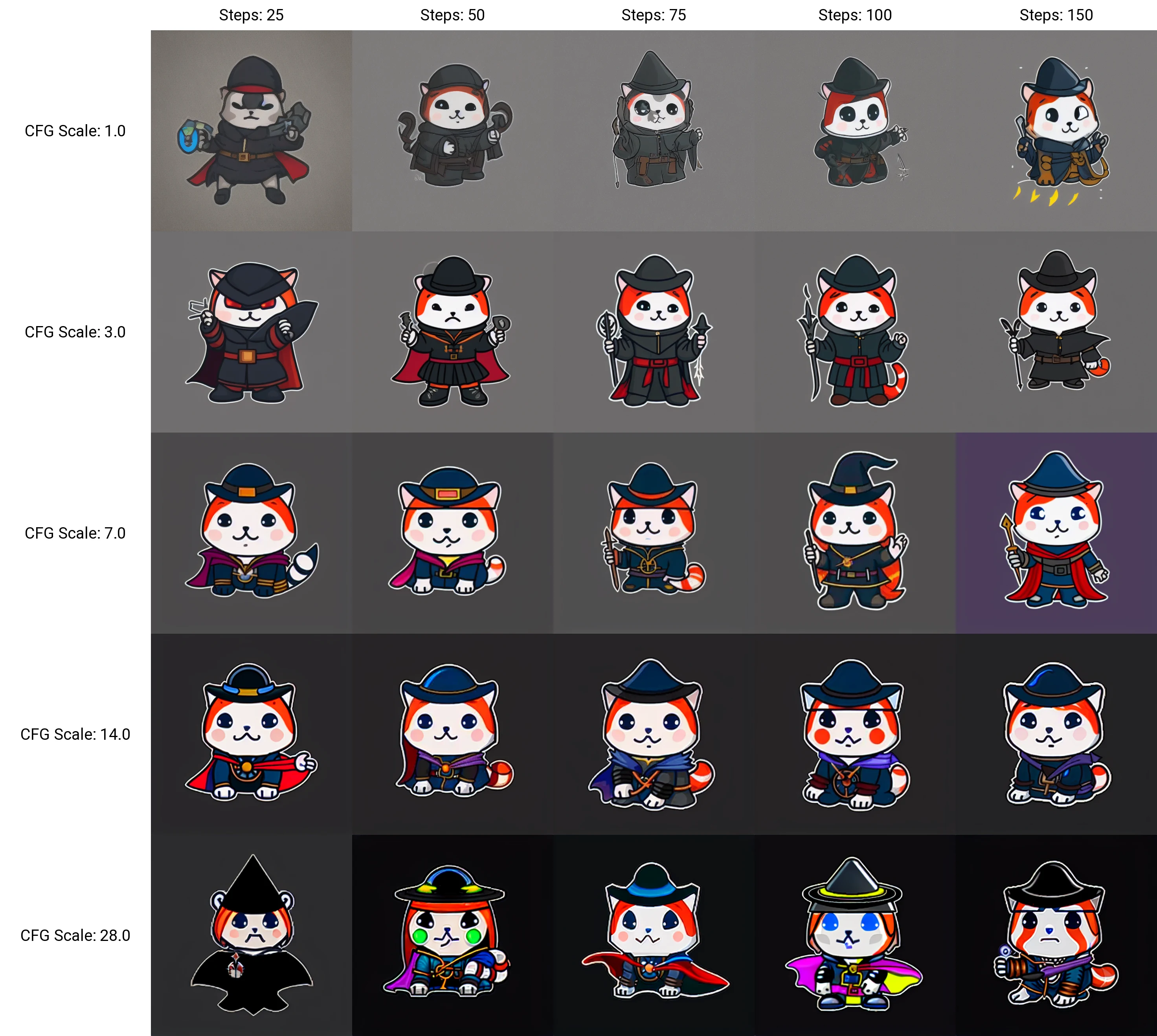

With most prompts, if you want to get high-quality outputs you should set sampling steps to 150 (otherwise the output will be blurry). However, when generating marseys, I have found that 50 sampling steps is enough to get good marseys. I say this because sampling steps increases the amount of time needed per image generation linearly, so imo it is better to spend that time generating more variations of marseys than a few with marginally higher quality.

Another thing to keep in mind is CFG scale. CFG is "Classifier-Free Guidance". Basically, the lower this number is, the more closely the output will be guided by the prompt. 7, the default, works fine for me.

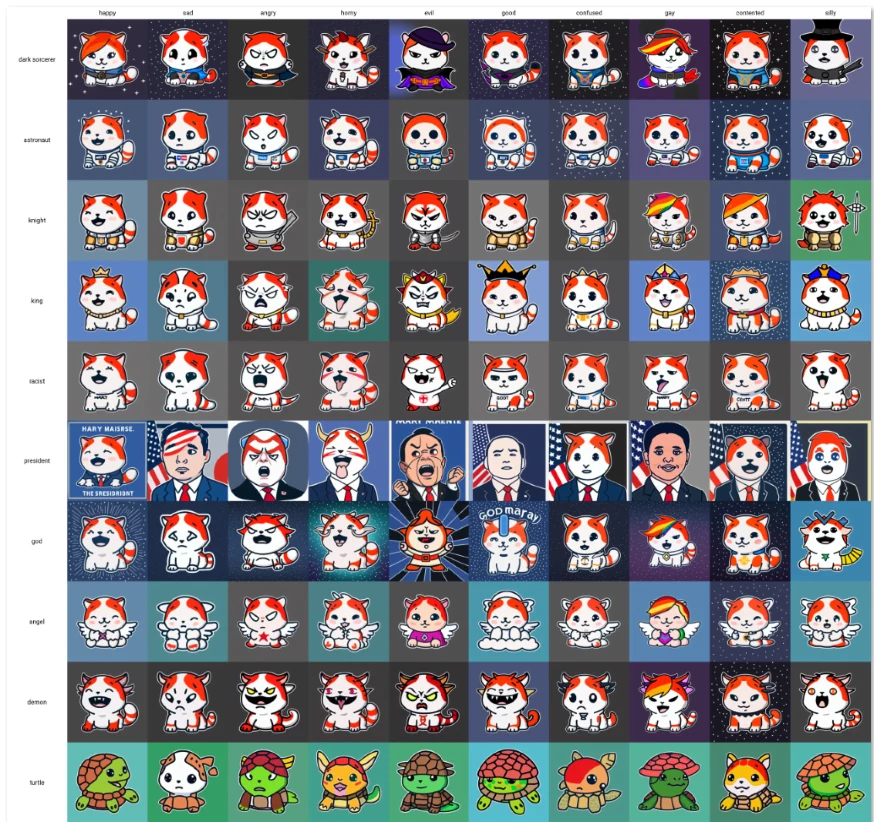

comparison of CFG scales and step count for the prompt "(((marsey))) as a dark sorcerer"

I like to generate marseys in batches of 100. By default, the UI only lets you do batches of 16. To increase this, just open ui-config.json, and set "txt2img/Batch count/maximum" to 100.

inspiration! prompt was "COLUMN (((marsey))) as a ROW"

FIXING YOUR MARSEYS

Now, no matter how good your favorite outputs are, they probably aren't ready for submission yet. (Hence why carp was mad). Some fixing may be necessary.

The first order of business are the eyes. AI-generated images are often bad with the eyes (although the recent model is pretty good). The reason for this is actually that AI isn't bad with eyes, it is as good at eyes as it is everything else, it's just that our monkeybrains are a lot less forgiving of eye-imperfections than any other imperfection.

There are a couple of different ways to fix the eyes. You can squish them, you can resize them, you can rotate them. If push comes to shove, you can also entirely replace them. Don't feel bad, that's how 99% of marseys are made in the first place ( ,

,  ,

,  )

)

I use paint.net for marseys because it is free and easy, if you understand paint you should be able to understand paint.net. For most edits I use the brush tool with a size of 4 and 100% hardness. I also color select from other similarly colored parts of the marsey to make sure I am following a color-pallete (most marseys dont use very many colors)

Another tool I use is gaussian blur to blend colors more evenly. Also make sure you remove the background and crop to the marsey cleanly.

It doesn't just have to be fixes! Let your imagination go wild, and use the AI's output as a starting point. For  I made her match the russian flag by coloring her sneakers red, and made her tracksuit an adidas tracksuit.

I made her match the russian flag by coloring her sneakers red, and made her tracksuit an adidas tracksuit.

Oh one more thing. Most of the time, marseys will be inline, which means they will be relatively small. So you should make sure that the output is easy to parse from a distance. People should be able to understand it intuitively as a symbol without seeing it up close.  is probably my favorite marsey because it works so well as a symbol from a distance - it is obviously representing music or listening to music and is hecking cute.

is probably my favorite marsey because it works so well as a symbol from a distance - it is obviously representing music or listening to music and is hecking cute.

FINAL STEPS

To avoid spamming carp  maybe make a post on /h/marsey first and see what people think before submitting it. I like to tell people what the steps I took to improve the marsey were, and also provide the original, and a few that I like but didn't make the cut. They will probably call you a lazy faggot but that is the essence of rdrama so don't worry about it

maybe make a post on /h/marsey first and see what people think before submitting it. I like to tell people what the steps I took to improve the marsey were, and also provide the original, and a few that I like but didn't make the cut. They will probably call you a lazy faggot but that is the essence of rdrama so don't worry about it

also stop making OMG THIS IS THE FIRST THING THE AI OUTPUTTED ISN'T IT SO COOL  posts. we know

posts. we know

OTHER FUN TOYS TO PLAY WITH

if you have an idea for a marsey, but you are a shit artist, try sketching it and feeding it into SD. in the webui, there is a tab called img2img. Upload the picture there, and type a description of the image you drew, and click generate. This should generate variations of what you drew.

- there are several things to play around with there. try tinkering with the CFG scale and Denoising level. I have found that denoising about 0.7 gets sufficiently novel results, but try playing around with it on your own. A tool that can help you is the X/Y plot script, which should be fairly self-explanatory.

you can use AI to upscale your results! sometimes this is a bit derpy, and I haven't played around with it much, but it is possible! see the "Extras" tab for that stuff.

you can make LOOPING marseys. That's right, if you want to have a cleanly looping wallpaper of marsey, you can do that! just check the "tiling" checkbox.

a single looping marsey tile

same tile, tiled

- want to make ungodly abominations? you can do that! try changing the width and height to something besides 512x512 to see some really wacky stuff. now, the marsey model performs pretty well compared to most other things, and you can get some normal pictures, like this happy family

... but you can also get really weird stuff, like this guy

IS IT ART?

I think there is more art to this process than people think there is. Firstly, I would argue the act of generating and cherrypicking the best results is an artistic process itself. You type in a prompt (a form of artistic inspiration) and you look for outputs that you like (also an artistic process). Also, as you learn how the software works, and get an intuitive feel for how it works, you actually gain skill. For instance, knowing the effect of CFG scale, and what other AI tools can improve your work, is a form of mastery.

Secondly, of course, there needs to be an editting process, and this also shows about the same level of artistry as 75% of marseys. After all, most marseys are kitbashed anyways by amateurs so what's the big deal.

+

+  =

=  , for instance.

, for instance.

Ultimately I think art is mostly about making things that people enjoy rather than a specific process. I think most people here would agree. I think that is a good thing as long as it is used sparingly, with an eye for quality instead of quantity.

I think a lot of people rush to the most negative possible conclusion about a thing rather than considering the positives. I think that AI art has drawbacks, but I also think it can make the world a slightly nicer place, with more cool things to look at

touch foxglove NOW

touch foxglove NOW  Guide to (good) AI Marsey Generation

Guide to (good) AI Marsey Generation

](/images/16648117837966726.webp)

](/images/166481243720002.webp)

](/images/16648148875020278.webp)

](/images/1664818680864382.webp)

](/images/16648946685480123.webp)

](/images/16648947762218437.webp)

](/images/16648963477249868.webp)

](/images/16648963979961226.webp)

Jump in the discussion.

No email address required.

Thank you for the guide, I'm still just trying to get it set up.

Anywho though I just got lucky on the 4090 launch, and as a result I've got a spare 3090 rig + a gigabit fiber connection, so I was wondering if you or @float-RIP knew if there was an r-slur way to set up a spare rig with the 3090 along the lines of Float's fricking awesome remote prompt post with the rented GPUs a few weeks ago?

@float-RIP knew if there was an r-slur way to set up a spare rig with the 3090 along the lines of Float's fricking awesome remote prompt post with the rented GPUs a few weeks ago?

Mainly I want to hook my big sister up with access since she's basically an neurodivergent NEET ultrapoor, but she's amazing at the DALLE/SD prompts. Dramatards can use it too for as long as I feel like donating the watts, may even add the 4090 too if possible when I'm at work.

But yeah I haven't taken programming since like learning Pascal twenty years ago so I'm too rusty to figure this shit out on my own.

Thanks again!!

Jump in the discussion.

No email address required.

Easiest way is probably to get a web UI like this running and then install Tailscale on both computers so she can access it with a static IP. Lmk if I can help with either of those parts

Jump in the discussion.

No email address required.

Nice! I've got the UI running now, is tailscale kind of akin to teamviewer/realvnc for the laymen w/extra features? or does it allow someone to connect to my home network or something without having to jump through extra hoops to remote into the computer?edit: I'ma give it a shot, thank you!!

Jump in the discussion.

No email address required.

Yeah, exactly. It's just a VPN/tunnel so you can share your network with someone without also exposing it to the broader internet

Jump in the discussion.

No email address required.

Greatly appreciated. I got it set up and going, got the main rig configured to be an exit node, but I think I'm doing it wrong. The peripheral devices I can connect to it now act like they're coming from the main computer, but the localhost:7860 web UI doesn't load for them. I set the main rig/exit node to allow local network access but still nothing.

Do I need to configure something more specific? I was looking at the How-to Guide - Subnet routers and traffic relay nodes but I'm a little drunkenly confused on what to do from here.

Jump in the discussion.

No email address required.

You can uncheck the exit node bit, that's just if you want to use your computer as a proxy. Are you connecting to the Stable Diffusion machine with the IP listed here? https://login.tailscale.com/admin/machines something like

100.126.150.121:7860Jump in the discussion.

No email address required.

Gotcha. Yeah I'm connected to the machines IP, but the devices do not list a port (?) .. as in its just the IP eg 100.126.150.121 with no ":7860"

Jump in the discussion.

No email address required.

:7860is wherever automatic's UI is running. If you can access it atlocalhost:7860on your SD machine, get the IP of that machine (say100.126.150.121) and visit100.126.150.121:7860on the other Tailscale connected device, it should load for you.Jump in the discussion.

No email address required.

Meh, still not loading

It does seem possible according to some of the stuff I could find

https://weekly.elfitz.com/2022/09/02/everybodys-doing-it-a-stable-diffusion-test-post/

https://forum.tailscale.com/t/access-to-ports-only-open-on-localhost/2432

But I'm not sure if I'm overthinking it now

Jump in the discussion.

No email address required.

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context

Jump in the discussion.

No email address required.

More options

Context

More options

Context