not only does it convert back losslessly it also has the noise removed

— moderate rock (@lookoutitsbbear) May 27, 2024

I am not an electrical or comp sci engineer but I have had some experience on the electrical side of things lately and have started becoming familiar with signal processing, so bear with me for minor mistakes if you are one of the nerds familiar with it. For those of you who are too  or not deep enough on the

or not deep enough on the  spectrum to understand signal processing, all you need to know is this: noise is not data. It is never data. A good signals engineer must attempt to minimize noise as much as possible.

spectrum to understand signal processing, all you need to know is this: noise is not data. It is never data. A good signals engineer must attempt to minimize noise as much as possible.

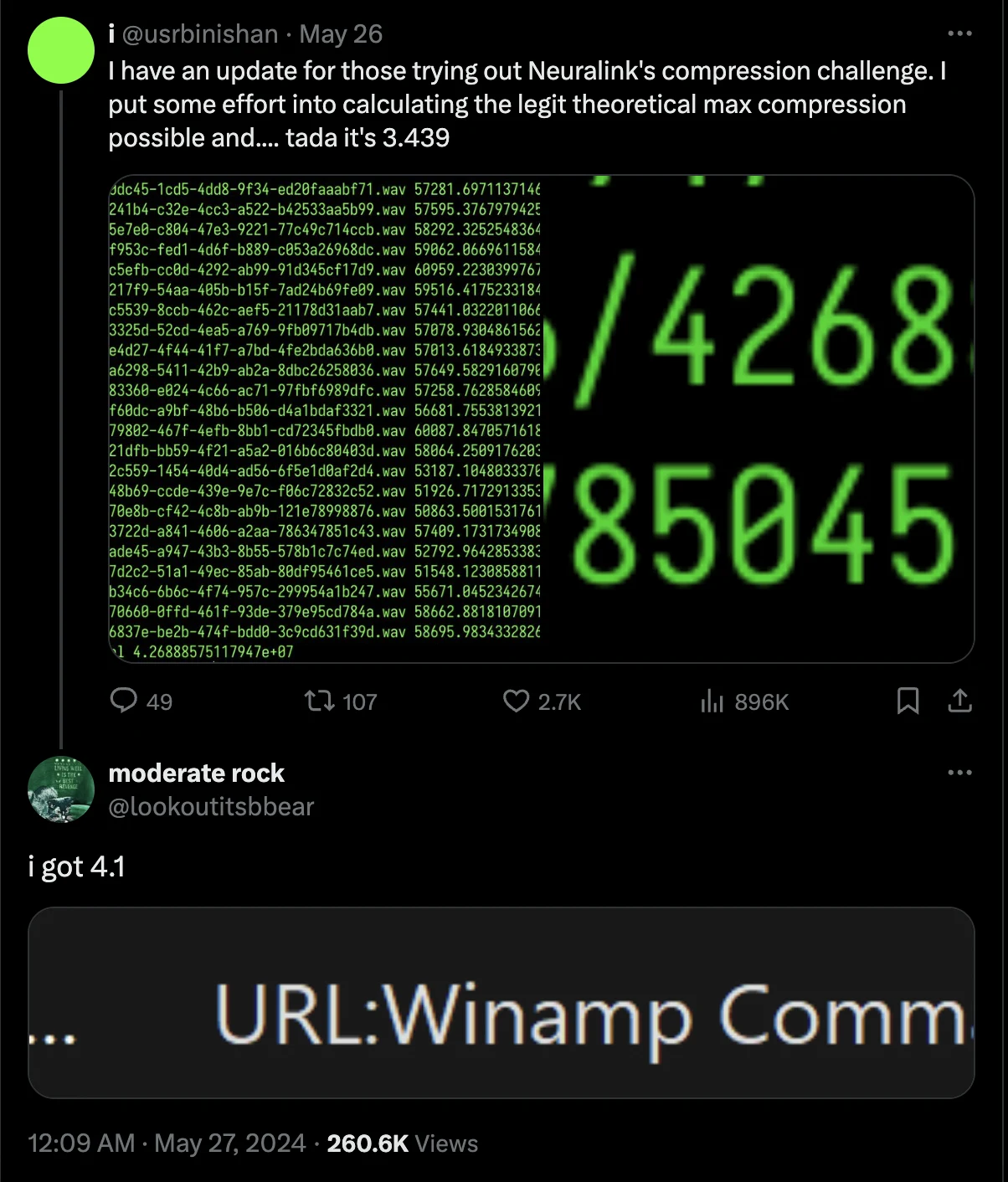

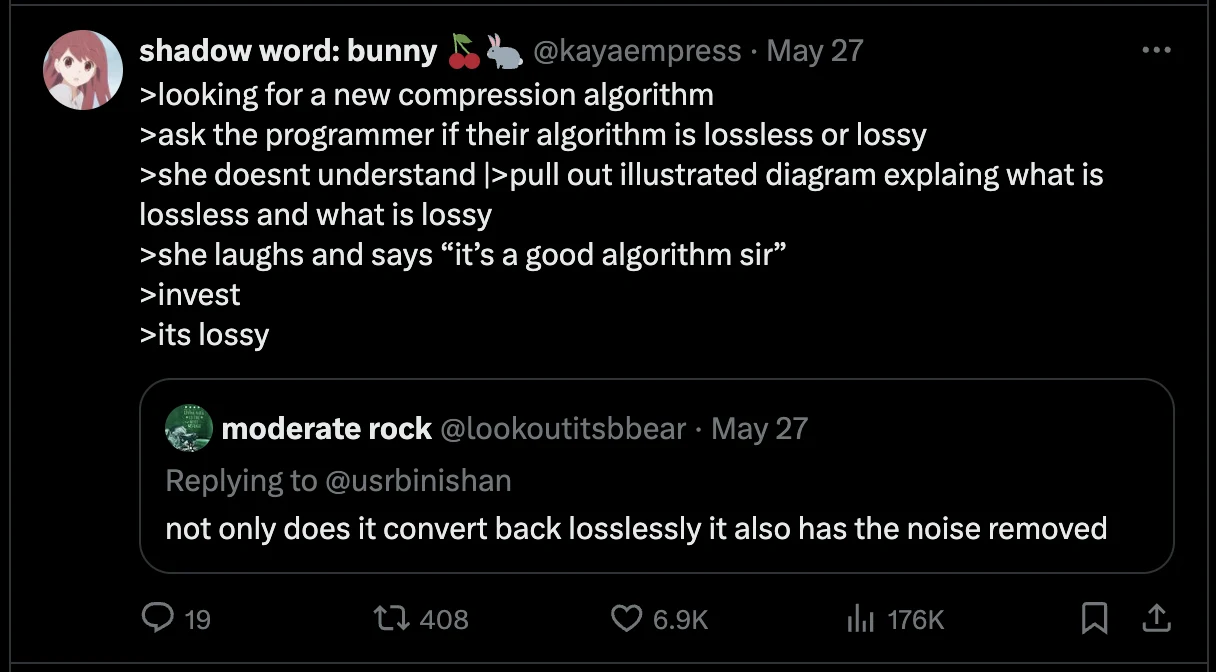

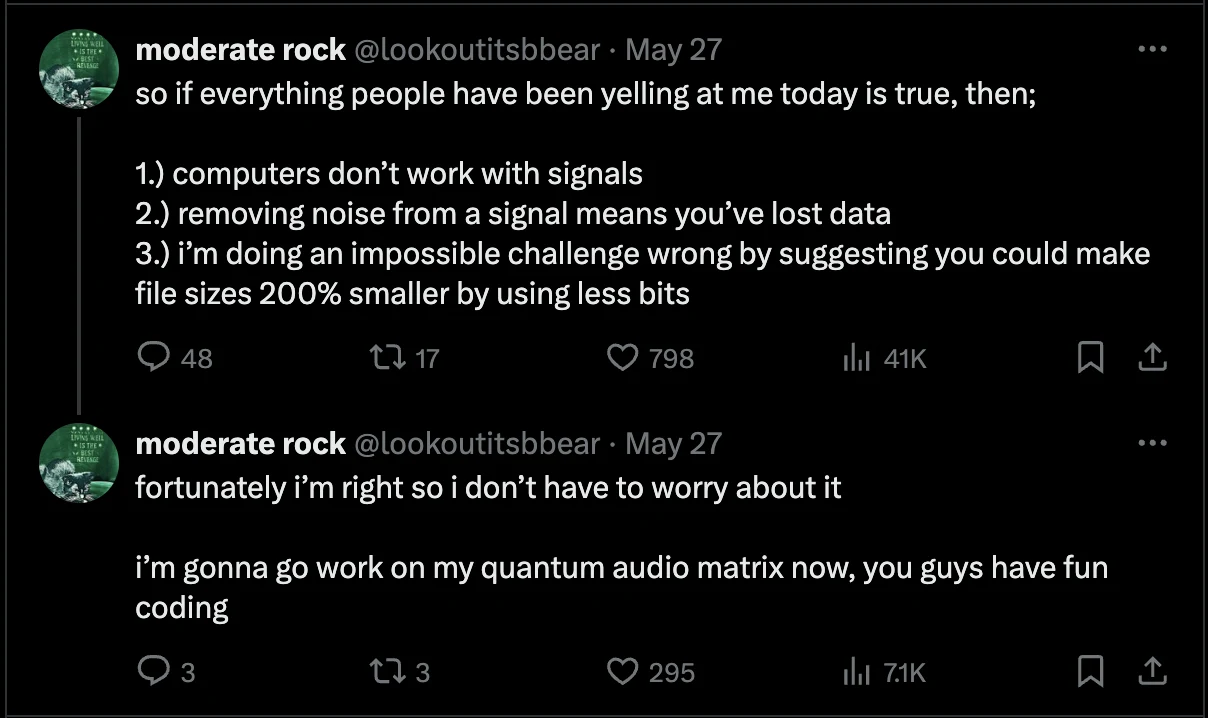

Neuralink put out some request recently asking for some insane level of compression on a file (wanting 200x compression) with a transmitted signal, and that this must be lossless (meaning the original file data can be perfectly recovered from the compressed file data). Neurodivergents online immediately set out working on this to determine what the max amount of compression is. Enter our king and hero, moderate rock (user @ lookoutitsbbear).

MR states that so far he's found a way to compress the initial data file by a factor of 4.1, the most seen so far.

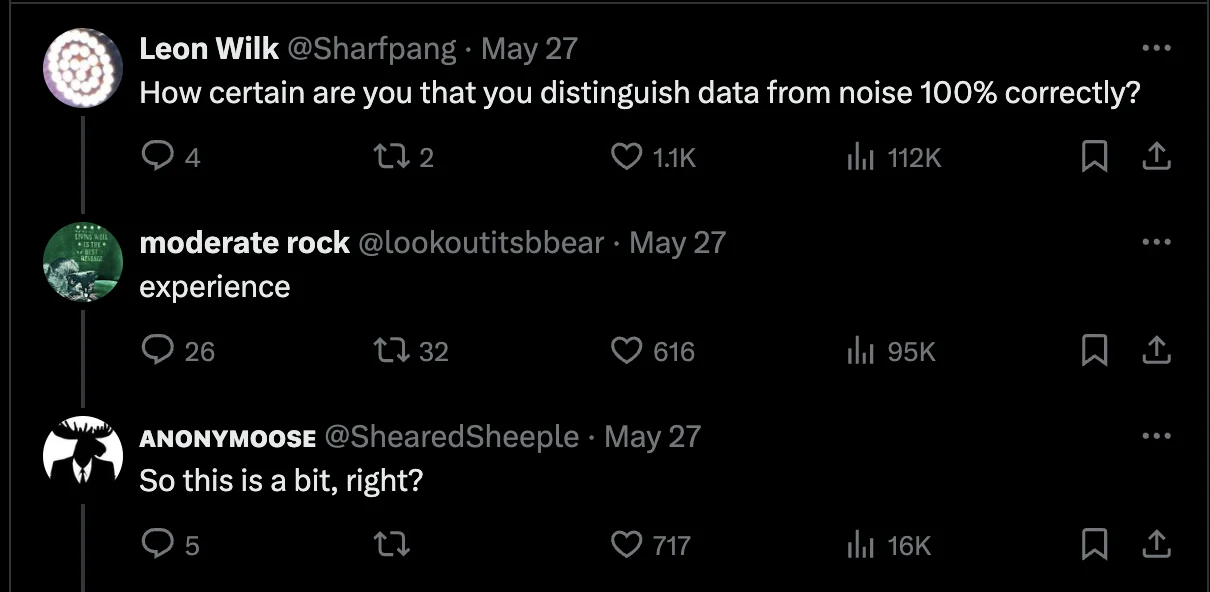

Curious as to how, people start asking how well this actually works.

https://x.com/lookoutitsbbear/status/1794962035714785570

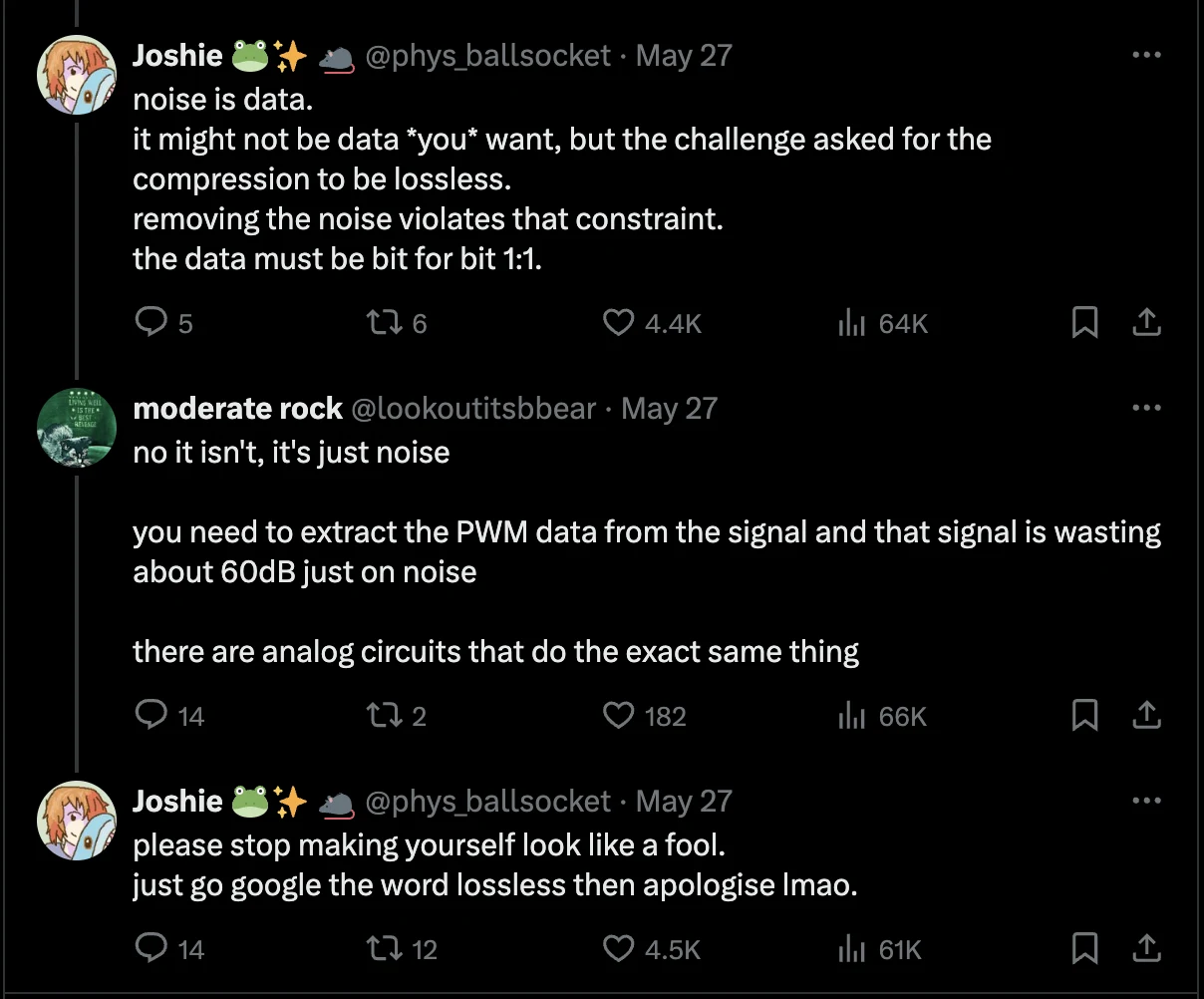

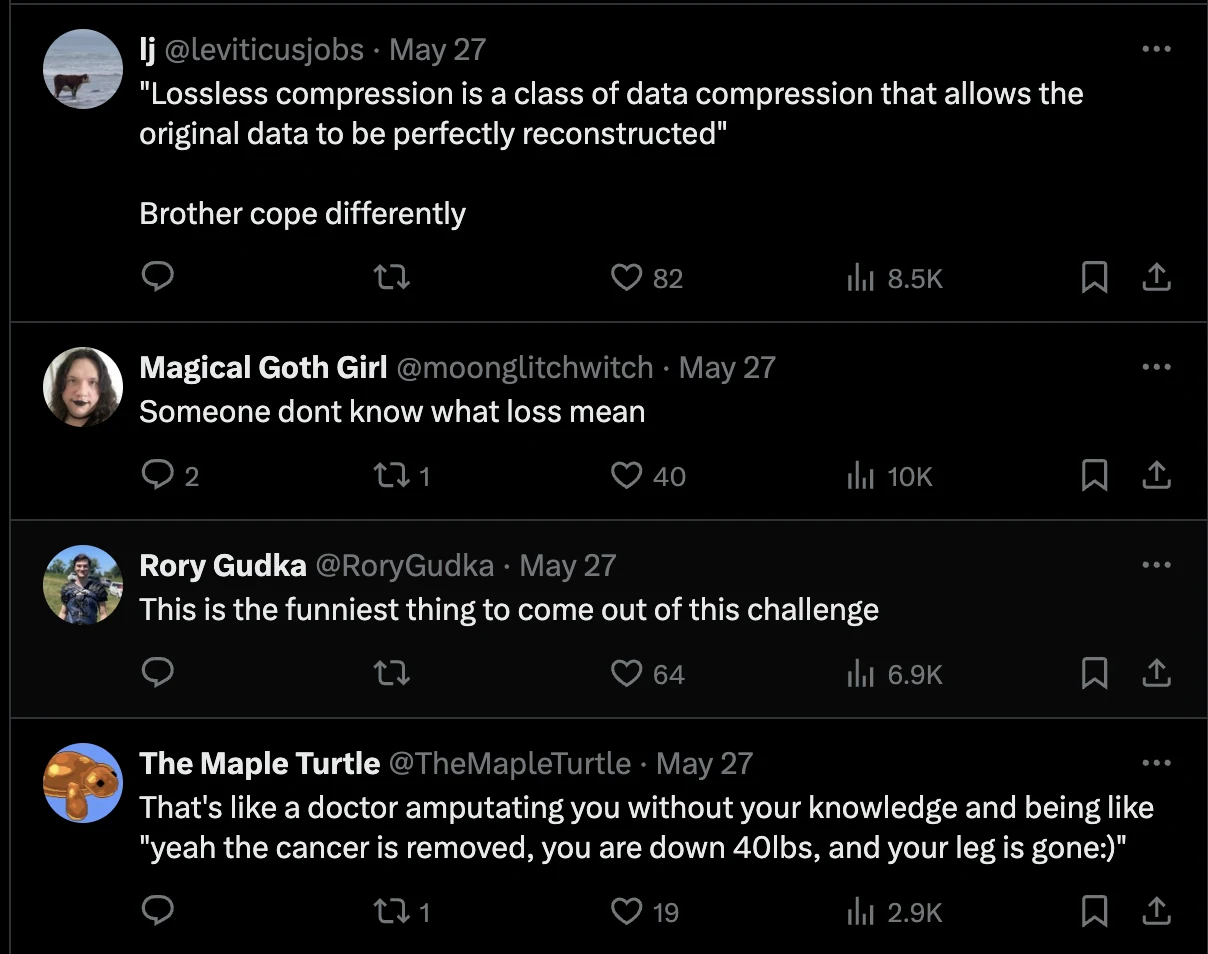

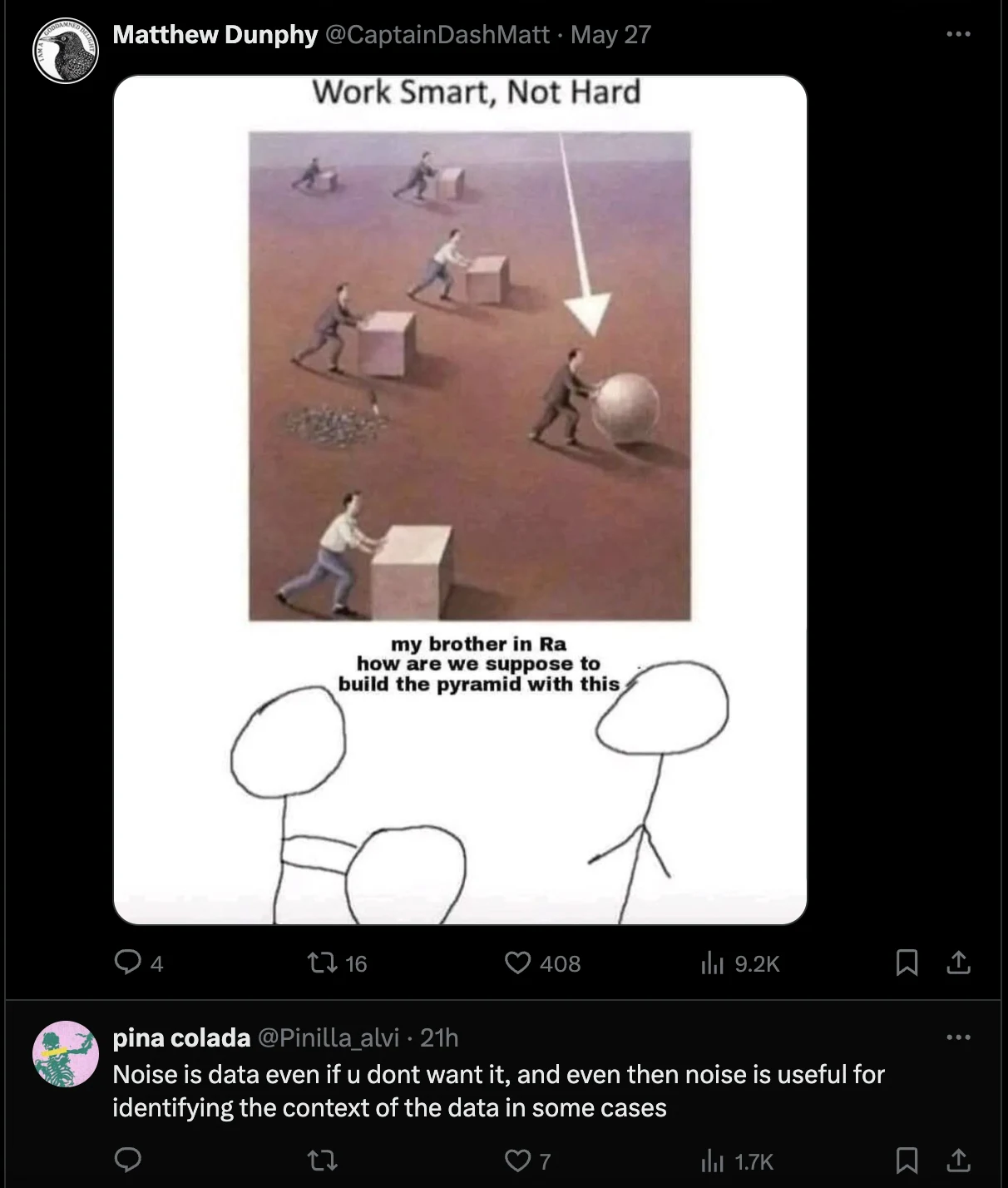

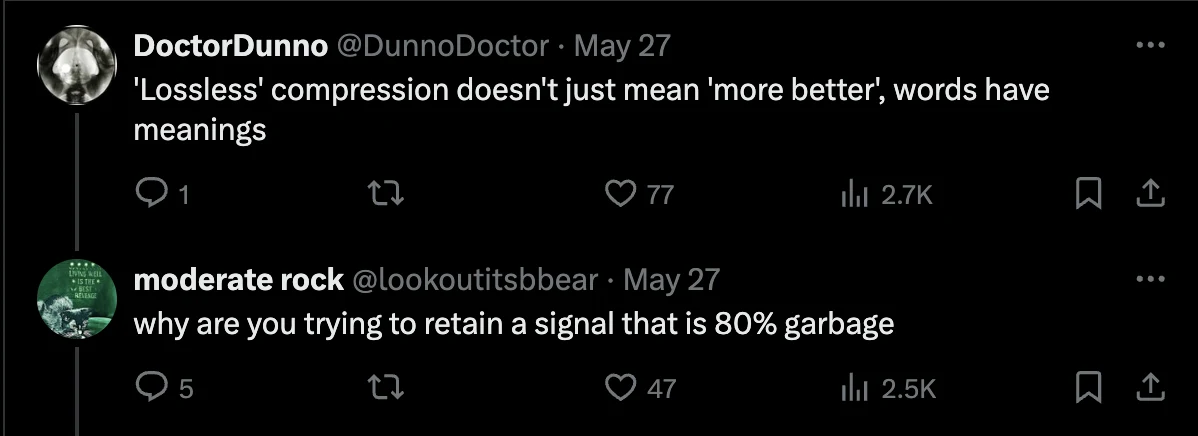

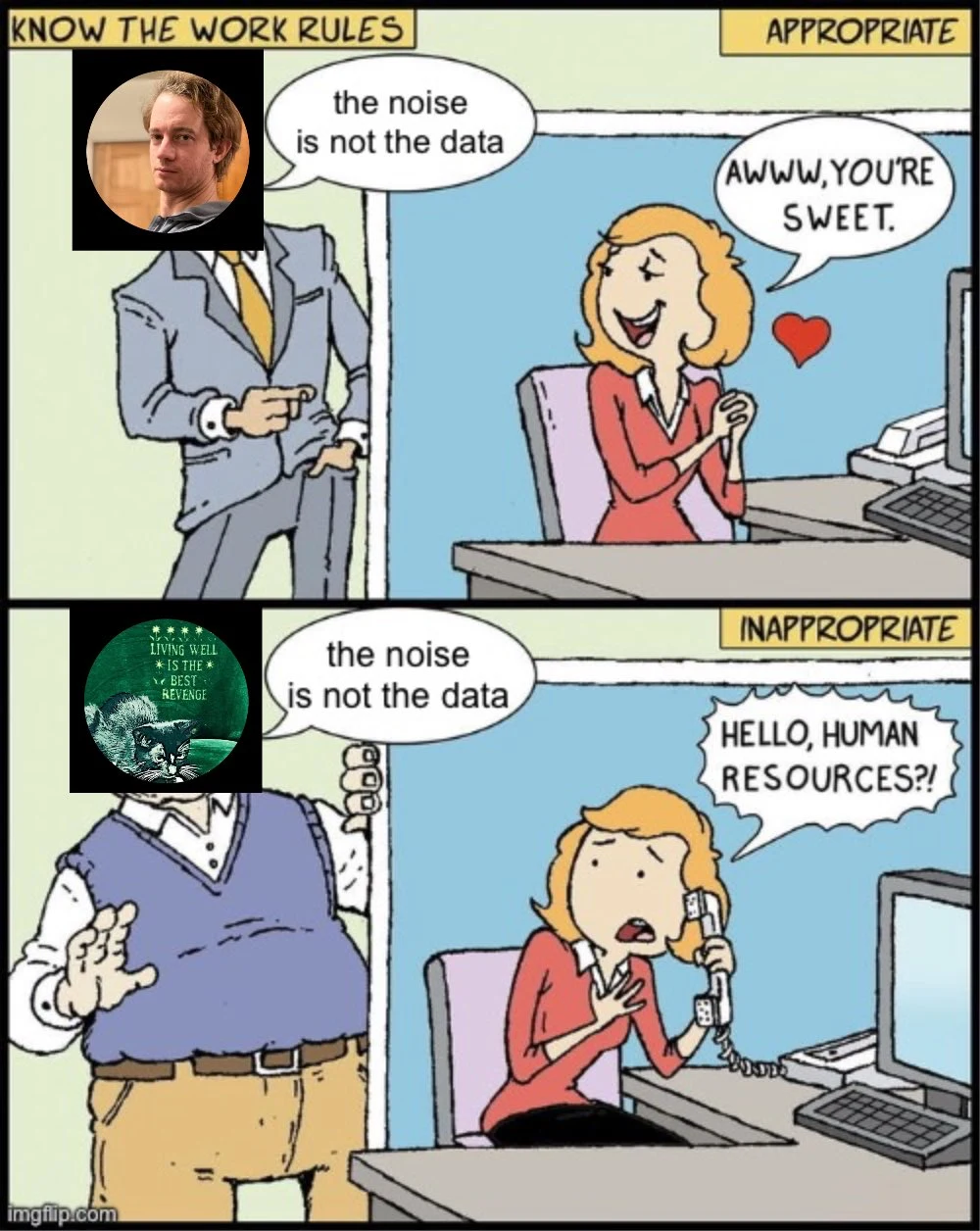

And this is where all heck breaks loose. See, Neuralink had technically asked for the original file to be compressed without any data lost. To the average midwit and/or software engineer, this means taking the original file and just making it smaller. But MR did what any good signal engineer would do, and worked on filtering the signal to get rid of unwanted and unnecessary information (the noise) so that he could do a better job of compressing the data. Midwits do not understand that noise is not relevant for a signal's information. And because of this, MR has sinned and for this, he must be dunked on. So the beatings commence.

"Heh kid, just google it. You idiot. You moron."

"Heh kid, just google it. You idiot. You moron."

There are multiple midwits continuing to repeat the "noise is data" line, as though repeating it makes it true.

There are multiple midwits continuing to repeat the "noise is data" line, as though repeating it makes it true.

Once again, noise is not a relevant part of a signal.

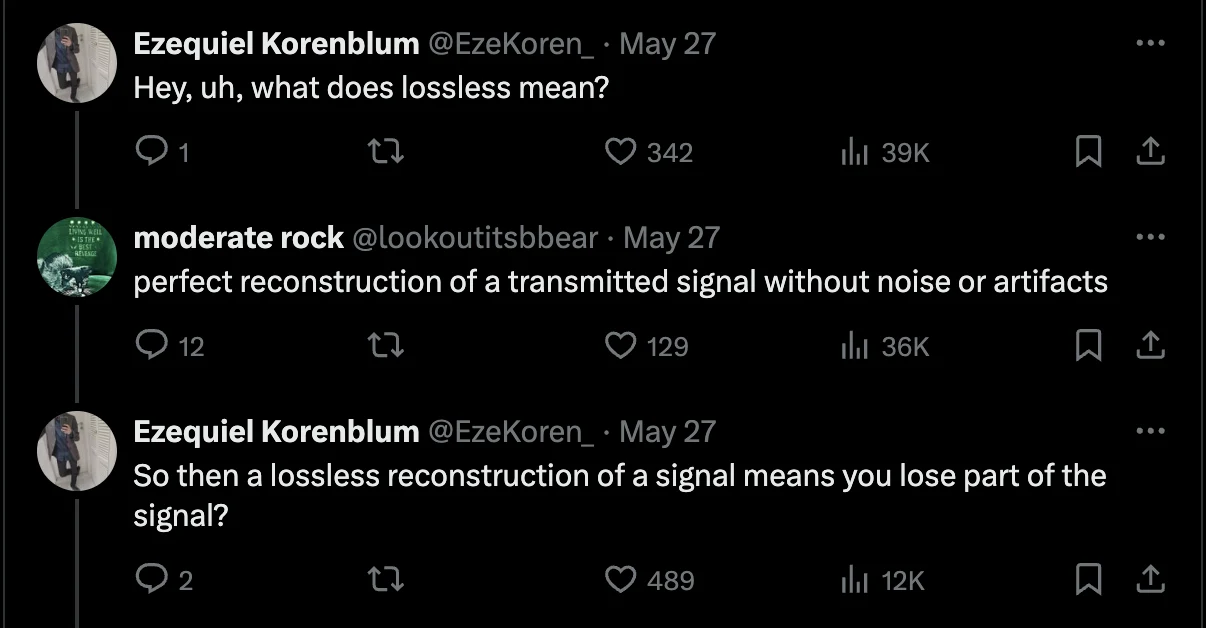

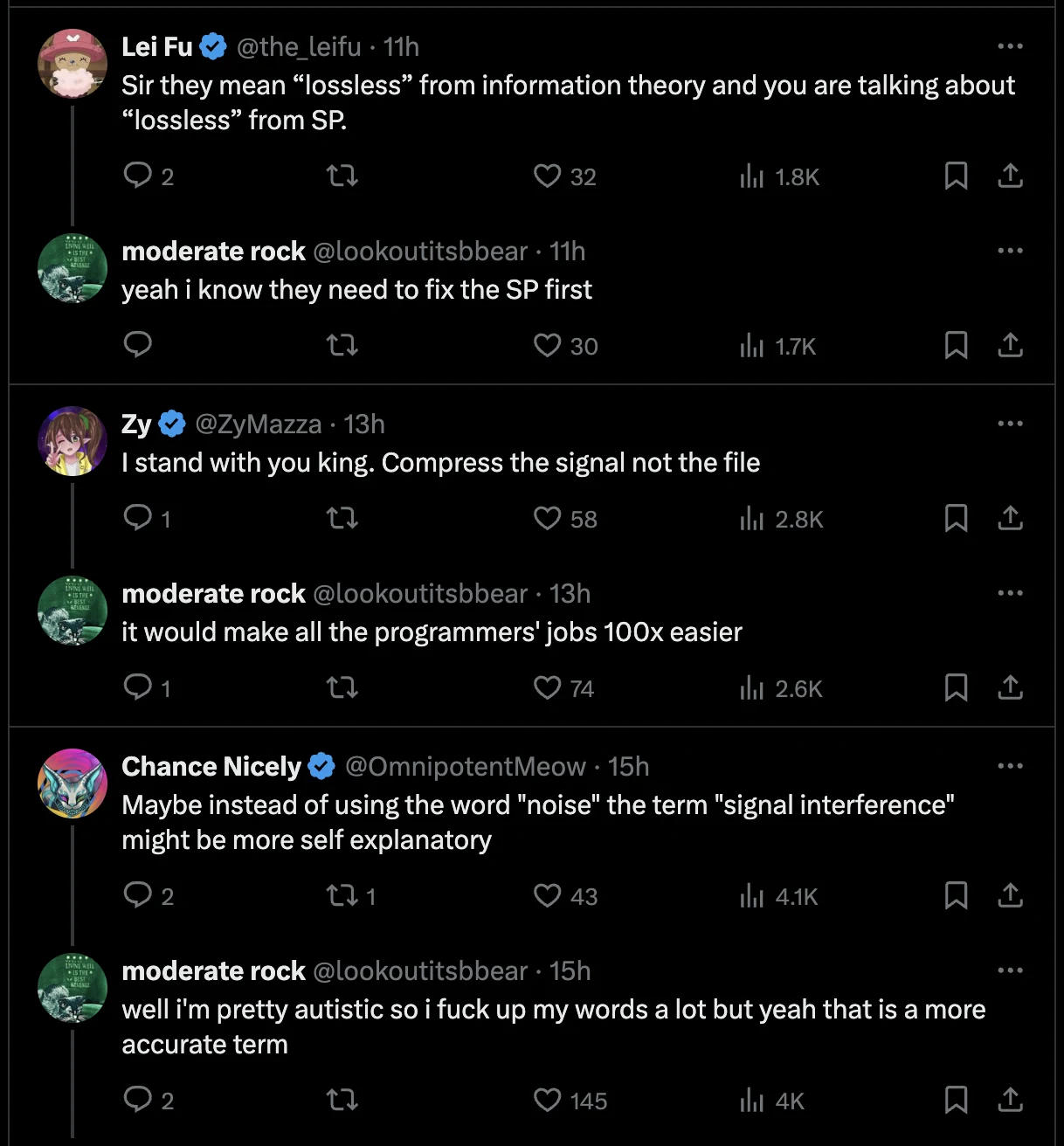

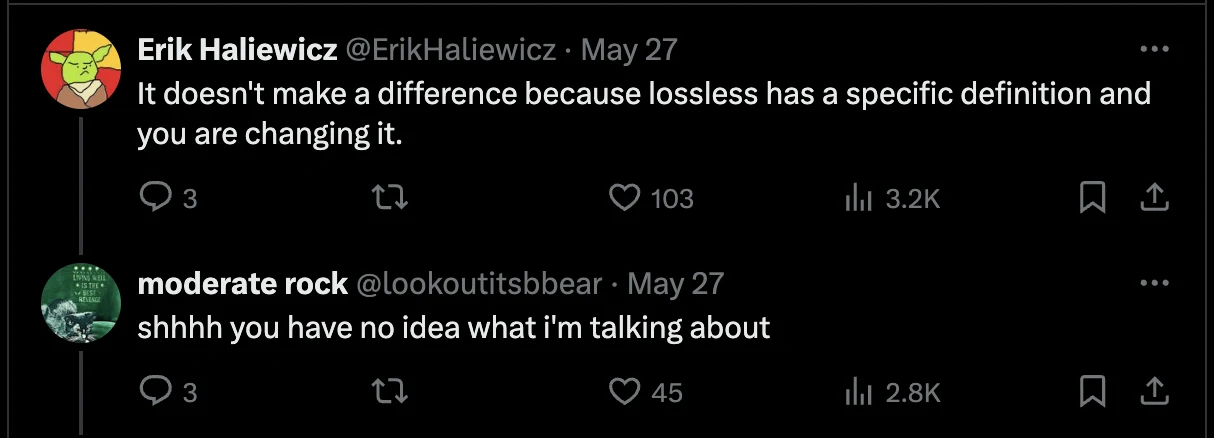

The midwit bonanza continues, once again acting as though if noise is an important part of a signal.

Quote tweets even gain major traction dunking on him even though they're all wrong.

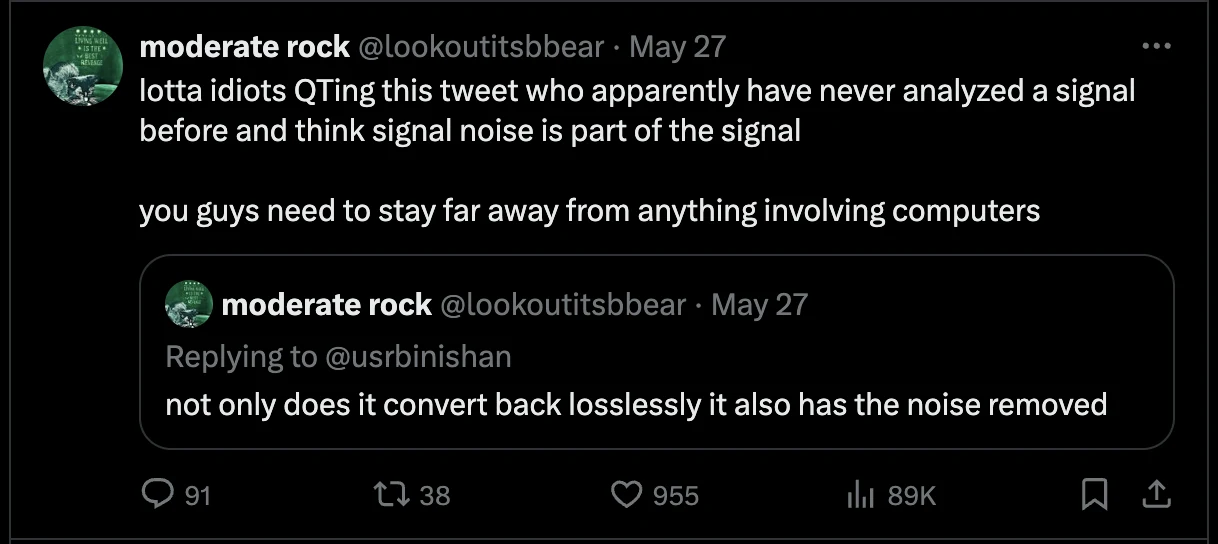

MR makes a quote tweet that at least gains traction with people who understand him, and the semantics argument becomes a bit more present.

Yes, on a technical level it is not lossless, as MR removed "information." But the "information" he removed is not relevant. The original signal present in the original file is recoverable after the compression. So he has done it correctly. MR also points out that the Neuralink engineers are being stupid because they should be working on getting rid of the noise before working on the compression.

Of course, the midwits can't admit they're wrong and continue to argue the semantics.

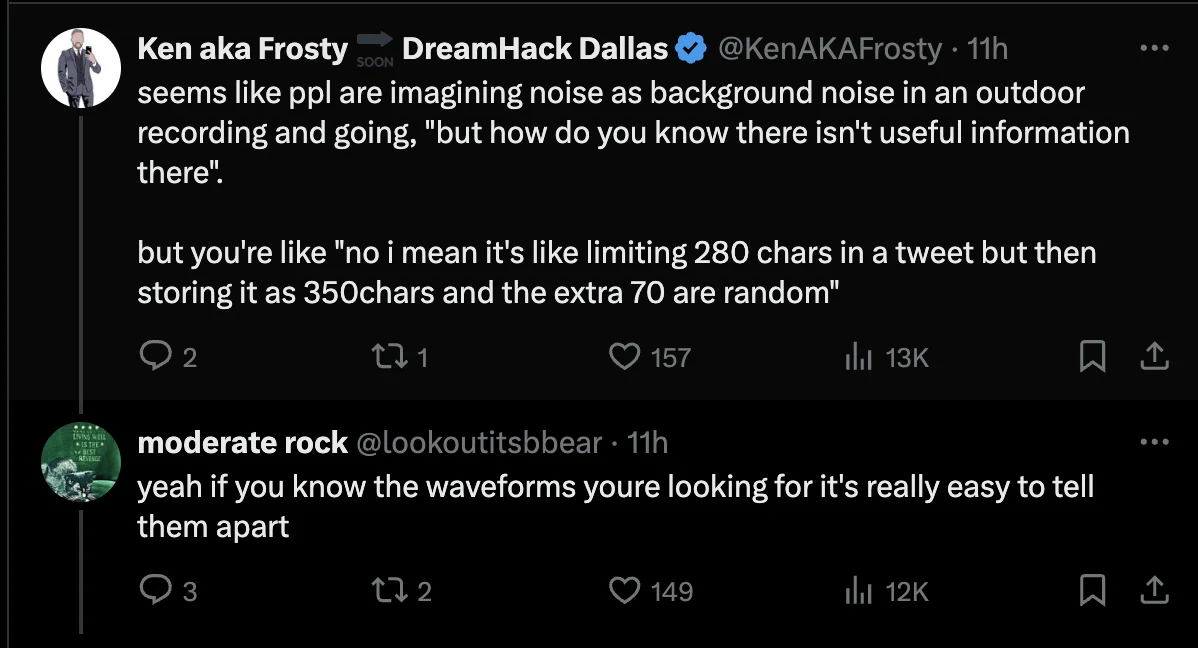

Someone who actually makes a decent enough analogy to understand what he's getting at.

There is a lot of continued insults and attempts at dunks here (too many for me to add at this point) and midwits continue try to dunk on him and he continues to shrug it off.

Adding to the hilarity, a PhD looks at the Neuralink thing and comes to the same conclusion on his own, that removing noise from the signal will help compression.

https://x.com/CJHandmer/status/1795486204185682315

https://x.com/kindgracekind/status/1795577979952845220

(some cope in the replies of this one that MR was "wrong" because of how he stated it, even though he wasn't)

Finally, our king decides to take a rest, having survived his beatings and coming out stronger

So remember dramatards: your average midwit has no clue what they're talking about, software engineers should stick to learning to code, and you should always listen to the neurodivergent savants

Could someone please saw down this rifle barrel for me?

Could someone please saw down this rifle barrel for me?

Jump in the discussion.

No email address required.

he falls back on his actual point (the noise is useless) whenever someone tells him his r-slurred point (removing noise is lossless compression) is r-slurred.

ur r-slurred for blindly agreeing with him

Jump in the discussion.

No email address required.

Yeah tbf it's not TeChNiCaLlY lossless, but the real point is that doesn't matter in this context

Jump in the discussion.

No email address required.

"in this context" how do you know? Are you the one who put up the challenge? If so, why did you specify lossless and that it would be evaluated by a program which COMPARES IT TO THE ORIGINAL DATA

Jump in the discussion.

No email address required.

This just goes back to what we're defining as data; if you're considering the noise to be data then you basically can't compress it and it's a trick question.

Jump in the discussion.

No email address required.

i dont think thats right. Because audio has a temporal component, any sound that has moments where the information is exactly the same over a period longer than the length of whatever your sample rate is can be compressed even if you retain all your noise from like, super-nyquist frequencies.

Jump in the discussion.

No email address required.

Yes but my decimal precision is higher than yours so your "exactly the same" is a .0003 variance to me.

Jump in the discussion.

No email address required.

More options

Context

That sounds correct. Idk all that much about this stuff, but I stand by my point that noise is not data.

Jump in the discussion.

No email address required.

More options

Context

More options

Context

yes you can, even in the absolute worst case where it is pure Gaussian noise (which is not what is happening here)

https://stats.stackexchange.com/questions/260869/can-white-noise-be-losslessly-compressed

Jump in the discussion.

No email address required.

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context