https://news.ycombinator.com/item?id=38327520

Google and Chinese AI companies watching this unfold like

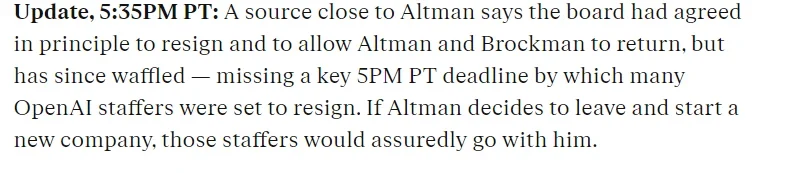

UPDATES

UPDATES

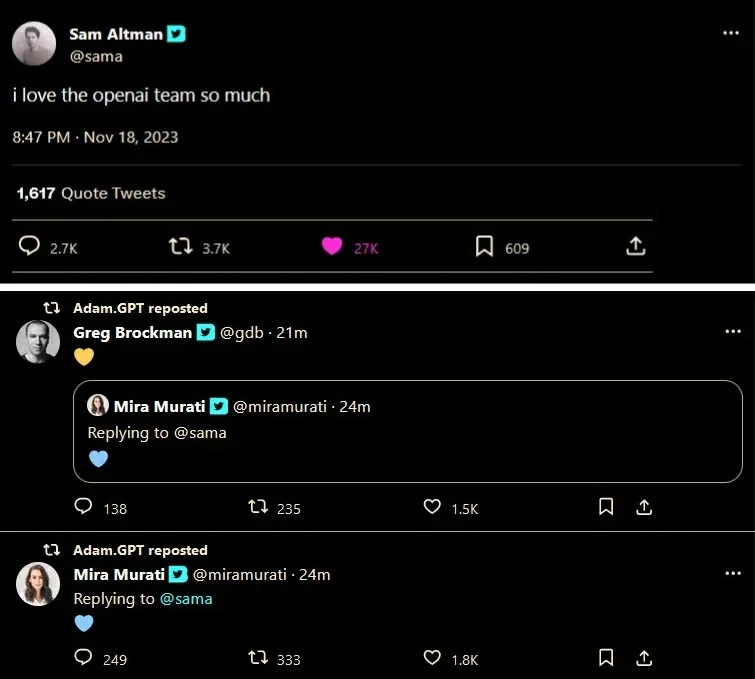

I think this means something

I think this means something

The Chief Strategy Officer told employees they are confident about bringing everyone back and will have an update tomorrow

Ilya about to be stebbed?

https://old.reddit.com/r/singularity/comments/17yt8sl/what_does_this_mean?sort=controversial

Nice to know that even rich tech dorks tweet like high schoolers

Jump in the discussion.

No email address required.

I mean, yeah, that's a sort of the inevitable conclusion, if instead of actual compassion you run an neurodivergent simulation. Complete with the part where before killing yourself you must ensure that your AI will be successful at colonizing and sterilizing its light cone.

Fun fact: I once got into a debate with Tomasik, back when /r/drama had pinging, and I found his weak spot: ask him why would worker bees fear death, if they were capable of fearing death. This kinda breaks his anthropomorphizing routine and at least back then he started to flail around randomly and bowed out shortly thereafter.

Jump in the discussion.

No email address required.

Buddhist Effective Altruists are not what I had on my "Group Most likely to destroy humanity" bingo card...

Also I fricking miss pinging, all though easy access lolcows, we had it so good back then and didn't even know...

Jump in the discussion.

No email address required.

More options

Context

More options

Context