- 5

- 19

- 20

- 27

Windows 11 really is the new Windows Vista

https://old.reddit.com/r/technology/comments/ykeckh/windows_11_runs_on_fewer_than_1_in_6_pcs/

https://old.reddit.com/r/tech/comments/ykqf28/windows_11_runs_on_fewer_than_1_in_6_pcs/

Orange Site:

https://news.ycombinator.com/item?id=33438877

@WindowsShill

@EdgeShill

@BeauBiden

@Soren

@BraveShill

@garlicdoors

@jannies

@NoUntakenNames01

@schizo

@dont_log_me_out

@justcool393

@grizzly

@DrTransmisia

@nekobit

@Maximus

@Basedal-Assad

@AraAra

@getogeto

@duck discuss

- 7

- 22

- box : Delete yourself

- 1

- 3

Yes, @Not_Chris_Deliah know

@Not_Chris_Deliah am typing on my PC (@jannies) but that's only because

@Not_Chris_Deliah is updating my Macbook right now.

Trans lives matter...

- 2

- 12

We’re currently hard at work to make Twitter better for everyone, including developers! We’ve decided to cancel the #Chirp developer conference while we build some things that we’re excited to share with you soon.

— Twitter Dev (@TwitterDev) November 2, 2022

- 20

- 48

The only cool thing that actually attracts non codecels is when you make and play with anything graphical because ppl like to consoooom the video media

I added Xorg trackpad gestures to KDE Plasma (gonna send PR soon here). The only gesture I didnt show off was the pinch and overview ones, just the Virtual desktop switching for now

Codecel out

I wont lie I did this because Wayland is fricking broken but I'm using a Soybook 2012 and anything that's not a Thinkpad is unusable once you learn about Trackpad gestures seriously they are very useful i love them i love my soypad gestures

- Ubie : h/cyberspace

- TheRInWreathStandsForRoxy : arrangements

- TedKaczynski : BIAST

- 40

- 134

- 9

- 27

- 2

- 9

Too bad they block the rdrama.cc instance.

Orange Site:

https://news.ycombinator.com/item?id=33433053

https://old.reddit.com/r/hackernews/comments/yk614c/mastodon_gained_70k_users_after_musks_twitter/

https://old.reddit.com/r/Mastodon/comments/yjzslr/mastodon_gained_70000_users_after_musks_twitter/

https://old.reddit.com/r/fediverse/comments/yjx6c4/social_media_mastodon_gained_70000_users_after/

@nekobit discuss

- 3

- 13

Orange Site:

- 5

- 17

More purchase history: https://archive.ph/https://www.amazon.com/gp/cart/view.html?ref_=nav_cart

- 3

- 9

- 8

- 24

Tumblr really wants its former users back on it lmao.

- 8

- 27

More Apple Fanboy Drama:

https://9to5mac.com/2022/11/01/eu-force-third-party-app-stores-on-apple/

https://old.reddit.com/r/apple/comments/yjb5lt/new_eu_law_could_force_apple_to_allow_other_app/

https://old.reddit.com/r/gadgets/comments/yjbo1v/new_eu_law_could_force_apple_to_allow_other_app/

https://old.reddit.com/r/technology/comments/yjbo7s/new_eu_law_could_force_apple_to_allow_other_app/

Orange Site:

https://news.ycombinator.com/item?id=33422415

Slashdot:

- 4

- 19

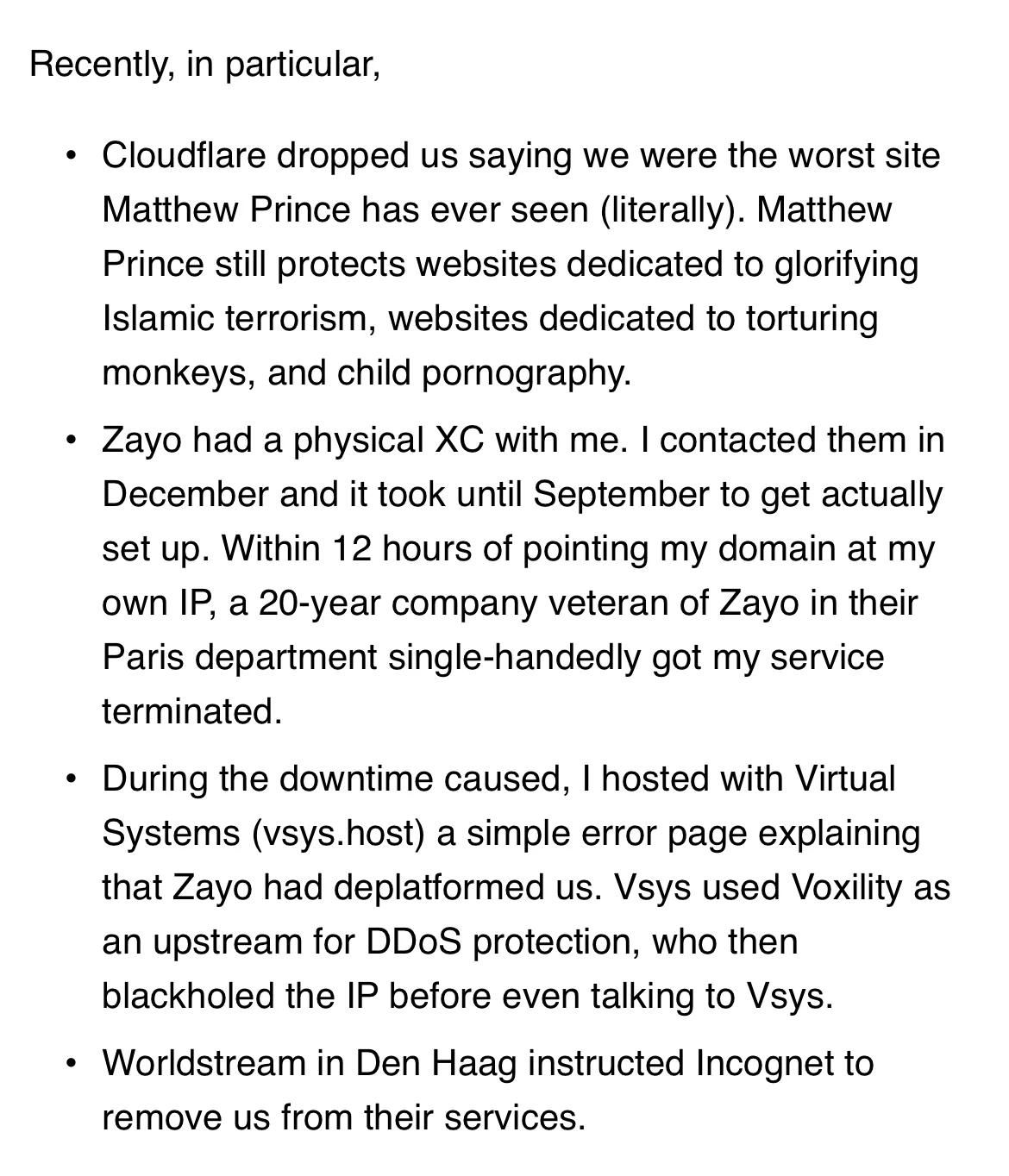

He also created malware

A 26-year-old Ukrainian man is awaiting extradition from The Netherlands to the United States on charges that he acted as a core developer for Raccoon, a popular “malware-as-a-service” offering that helped paying customers steal passwords and financial data from millions of cybercrime victims. KrebsOnSecurity has learned that the defendant was busted in March 2022, after fleeing mandatory military service in Ukraine in the weeks following the Russian invasion.

Authorities soon tracked Sokolovsky’s phone through Germany and eventually to The Netherlands, with his female companion helpfully documenting every step of the trip on her Instagram account. Here is a picture she posted of the two embracing upon their arrival in Amsterdam’s Dam Square

Trans lives matter. Admins do not

- 5

- 20

In the settings, there is an option to enable a spider pet. There is a shit award, which makes flies swarm the post. The spider pet should run after spiders and kill them

- 2

- 11

- 5

- 15

Orange Site:

https://news.ycombinator.com/item?id=33419138

https://news.ycombinator.com/item?id=33371725

https://old.reddit.com/r/Android/comments/yff64w/semianalysis_arm_changes_business_model_oem/

https://old.reddit.com/r/hardware/comments/yfd6w4/semianalysis_arm_changes_business_model_oem/

https://old.reddit.com/r/programming/comments/yj6x3s/arm_will_prohibit_the_proximity_of_its_cpu_and/

- 6

- 11

- 6

- 10

Hiding from the feds cels stay winning!

- 28

- 84

I can't fricking even lmao

But the system works (to the extent it does work) because verification helps separate order from chaos. A blue checkmark is a vital time-out in the game of Twitter, signaling that you can reasonably believe a person, agency, or brand is actually speaking for itself. It removes the guesswork of scanning an account’s tweets and profile to gauge its veracity, especially in a fast-moving situation like a scandal, an election, or a public health emergency. It’s the seal of authenticity that gives serious accounts license to be playful, trusting that readers can check for their credentials.

And to be blunt, Twitter needs a verified user base more than a lot of individuals need those little blue checks.

- Lv999_Lich_King : h/mnn

- X : why you wanna reduce the audience size for this post bro

- RichEvansOnlyfans : Even if you hate chuds, the govt is still not your friend

- 80

- 111

https://theintercept.com/2022/10/31/social-media-disinformation-dhs/

More threads:

https://old.reddit.com/r/stupidpol/comments/yig30p/leaked_documents_outline_dhss_plans_to_police/

https://old.reddit.com/r/conspiracy/comments/yigt8g/leaked_docs_show_facebook_and_twitter_closely/

THE DEPARTMENT OF HOMELAND SECURITY is quietly broadening its efforts to curb speech it considers dangerous, an investigation by The Intercept has found. Years of internal DHS memos, emails, and documents --- obtained via leaks and an ongoing lawsuit, as well as public documents --- illustrate an expansive effort by the agency to influence tech platforms.

The work, much of which remains unknown to the American public, came into clearer view earlier this year when DHS announced a new "Disinformation Governance Board": a panel designed to police misinformation (false information spread unintentionally), disinformation (false information spread intentionally), and malinformation (factual information shared, typically out of context, with harmful intent) that allegedly threatens U.S. interests. While the board was widely ridiculed, immediately scaled back, and then shut down within a few months, other initiatives are underway as DHS pivots to monitoring social media now that its original mandate --- the war on terror --- has been wound down.

Behind closed doors, and through pressure on private platforms, the U.S. government has used its power to try to shape online discourse. According to meeting minutes and other records appended to a lawsuit filed by Missouri Attorney General Eric Schmitt, a Republican who is also running for Senate, discussions have ranged from the scale and scope of government intervention in online discourse to the mechanics of streamlining takedown requests for false or intentionally misleading information.

"Platforms have got to get comfortable with gov't. It's really interesting how hesitant they remain," Microsoft executive Matt Masterson, a former DHS official, texted Jen Easterly, a DHS director, in February.

In a March meeting, Laura Dehmlow, an FBI official, warned that the threat of subversive information on social media could undermine support for the U.S. government. Dehmlow, according to notes of the discussion attended by senior executives from Twitter and JPMorgan Chase, stressed that "we need a media infrastructure that is held accountable."

"We do not coordinate with other entities when making content moderation decisions, and we independently evaluate content in line with the Twitter Rules," a spokesperson for Twitter wrote in a statement to The Intercept.

There is also a formalized process for government officials to directly flag content on Facebook or Instagram and request that it be throttled or suppressed through a special Facebook portal that requires a government or law enforcement email to use. At the time of writing, the "content request system" at facebook.com/xtakedowns/login is still live. DHS and Meta, the parent company of Facebook, did not respond to a request for comment. The FBI declined to comment.

DHS's mission to fight disinformation, stemming from concerns around Russian influence in the 2016 presidential election, began taking shape during the 2020 election and over efforts to shape discussions around vaccine policy during the coronavirus pandemic. Documents collected by The Intercept from a variety of sources, including current officials and publicly available reports, reveal the evolution of more active measures by DHS.

According to a draft copy of DHS's Quadrennial Homeland Security Review, DHS's capstone report outlining the department's strategy and priorities in the coming years, the department plans to target "inaccurate information" on a wide range of topics, including "the origins of the COVID-19 pandemic and the efficacy of COVID-19 vaccines, racial justice, U.S. withdrawal from Afghanistan, and the nature of U.S. support to Ukraine."

"The challenge is particularly acute in marginalized communities," the report states, "which are often the targets of false or misleading information, such as false information on voting procedures targeting people of color."

The inclusion of the 2021 U.S. withdrawal from Afghanistan is particularly noteworthy, given that House Republicans, should they take the majority in the midterms, have vowed to investigate. "This makes Benghazi look like a much smaller issue," said Rep. Mike Johnson, R-La., a member of the Armed Services Committee, adding that finding answers "will be a top priority."

How disinformation is defined by the government has not been clearly articulated, and the inherently subjective nature of what constitutes disinformation provides a broad opening for DHS officials to make politically motivated determinations about what constitutes dangerous speech.

DHS justifies these goals --- which have expanded far beyond its original purview on foreign threats to encompass disinformation originating domestically --- by claiming that terrorist threats can be "exacerbated by misinformation and disinformation spread online." But the laudable goal of protecting Americans from danger has often been used to conceal political maneuvering. In 2004, for instance, DHS officials faced pressure from the George W. Bush administration to heighten the national threat level for terrorism, in a bid to influence voters prior to the election, according to former DHS Secretary Tom Ridge. U.S. officials have routinely lied about an array of issues, from the causes of its wars in Vietnam and Iraq to their more recent obfuscation around the role of the National Institutes of Health in funding the Wuhan Institute of Virology's coronavirus research.

That track record has not prevented the U.S. government from seeking to become arbiters of what constitutes false or dangerous information on inherently political topics. Earlier this year, Republican Gov. Ron DeSantis signed a law known by supporters as the "Stop WOKE Act," which bans private employers from workplace trainings asserting an individual's moral character is privileged or oppressed based on his or her race, color, s*x, or national origin. The law, critics charged, amounted to a broad suppression of speech deemed offensive. The Foundation for Individual Rights and Expression, or FIRE, has since filed a lawsuit against DeSantis, alleging "unconstitutional censorship." A federal judge temporarily blocked parts of the Stop WOKE Act, ruling that the law had violated workers' First Amendment rights.

"Florida's legislators may well find plaintiffs' speech 'repugnant.' But under our constitutional scheme, the 'remedy' for repugnant speech is more speech, not enforced silence," wrote Judge Mark Walker, in a colorful opinion castigating the law.

The extent to which the DHS initiatives affect Americans' daily social feeds is unclear. During the 2020 election, the government flagged numerous posts as suspicious, many of which were then taken down, documents cited in the Missouri attorney general's lawsuit disclosed. And a 2021 report by the Election Integrity Partnership at Stanford University found that of nearly 4,800 flagged items, technology platforms took action on 35 percent --- either removing, labeling, or soft-blocking speech, meaning the users were only able to view content after bypassing a warning screen. The research was done "in consultation with CISA," the Cybersecurity and Infrastructure Security Agency.

Prior to the 2020 election, tech companies including Twitter, Facebook, Reddit, Groomercord, Wikipedia, Microsoft, LinkedIn, and Verizon Media met on a monthly basis with the FBI, CISA, and other government representatives. According to NBC News, the meetings were part of an initiative, still ongoing, between the private sector and government to discuss how firms would handle misinformation during the election.

The stepped up counter-disinformation effort began in 2018 following high-profile hacking incidents of U.S. firms, when Congress passed and President Donald Trump signed the Cybersecurity and Infrastructure Security Agency Act, forming a new wing of DHS devoted to protecting critical national infrastructure. An August 2022 report by the DHS Office of Inspector General sketches the rapidly accelerating move toward policing disinformation.

From the outset, CISA boasted of an "evolved mission" to monitor social media discussions while "routing disinformation concerns" to private sector platforms.

In 2018, then-DHS Secretary Kirstjen Nielsen created the Countering Foreign Influence Task Force to respond to election disinformation. The task force, which included members of CISA as well as its Office of Intelligence and Analysis, generated "threat intelligence" about the election and notified social media platforms and law enforcement. At the same time, DHS began notifying social media companies about voting-related disinformation appearing on social platforms.

In 2019, DHS created a separate entity called the Foreign Influence and Interference Branch to generate more detailed intelligence about disinformation, the inspector general report shows. That year, its staff grew to include 15 full- and part-time staff dedicated to disinformation analysis. In 2020, the disinformation focus expanded to include Covid-19, according to a Homeland Threat Assessment issued by Acting Secretary Chad Wolf.

This apparatus had a dry run during the 2020 election, when CISA began working with other members of the U.S. intelligence community. Office of Intelligence and Analysis personnel attended "weekly teleconferences to coordinate Intelligence Community activities to counter election-related disinformation." According to the IG report, meetings have continued to take place every two weeks since the elections.

Emails between DHS officials, Twitter, and the Center for Internet Security outline the process for such takedown requests during the period leading up to November 2020. Meeting notes show that the tech platforms would be called upon to "process reports and provide timely responses, to include the removal of reported misinformation from the platform where possible." In practice, this often meant state election officials sent examples of potential forms of disinformation to CISA, which would then forward them on to social media companies for a response.

Under President Joe Biden, the shifting focus on disinformation has continued. In January 2021, CISA replaced the Countering Foreign Influence Task force with the "Misinformation, Disinformation and Malinformation" team, which was created "to promote more flexibility to focus on general MDM." By now, the scope of the effort had expanded beyond disinformation produced by foreign governments to include domestic versions. The MDM team, according to one CISA official quoted in the IG report, "counters all types of disinformation, to be responsive to current events."

Jen Easterly, Biden's appointed director of CISA, swiftly made it clear that she would continue to shift resources in the agency to combat the spread of dangerous forms of information on social media. "One could argue we're in the business of critical infrastructure, and the most critical infrastructure is our cognitive infrastructure, so building that resilience to misinformation and disinformation, I think, is incredibly important," said Easterly, speaking at a conference in November 2021.

CISA's domain has gradually expanded to encompass more subjects it believes amount to critical infrastructure. Last year, The Intercept reported on the existence of a series of DHS field intelligence reports warning of attacks on cell towers, which it has tied to conspiracy theorists who believe 5G towers spread Covid-19. One intelligence report pointed out that these conspiracy theories "are inciting attacks against the communications infrastructure."

CISA has defended its burgeoning social media monitoring authorities, stating that "once CISA notified a social media platform of disinformation, the social media platform could independently decide whether to remove or modify the post." But, as documents revealed by the Missouri lawsuit show, CISA's goal is to make platforms more responsive to their suggestions.

In late February, Easterly texted with Matthew Masterson, a representative at Microsoft who formerly worked at CISA, that she is "trying to get us in a place where Fed can work with platforms to better understand mis/dis trends so relevant agencies can try to prebunk/debunk as useful."

Meeting records of the CISA Cybersecurity Advisory Committee, the main subcommittee that handles disinformation policy at CISA, show a constant effort to expand the scope of the agency's cowtools to foil disinformation.

In June, the same DHS advisory committee of CISA --- which includes Twitter head of legal policy, trust, and safety Vijaya Gadde and University of Washington professor Kate Starbird --- drafted a report to the CISA director calling for an expansive role for the agency in shaping the "information ecosystem." The report called on the agency to closely monitor "social media platforms of all sizes, mainstream media, cable news, hyper partisan media, talk radio and other online resources." They argued that the agency needed to take steps to halt the "spread of false and misleading information," with a focus on information that undermines "key democratic institutions, such as the courts, or by other sectors such as the financial system, or public health measures."

To accomplish these broad goals, the report said, CISA should invest in external research to evaluate the "efficacy of interventions," specifically with research looking at how alleged disinformation can be countered and how quickly messages spread. Geoff Hale, the director of the Election Security Initiative at CISA, recommended the use of third-party information-sharing nonprofits as a "clearing house for trust information to avoid the appearance of government propaganda."

Last Thursday, immediately following billionaire Elon Musk's completed acquisition of Twitter, Gadde was terminated from the company.

Moderation Is Different From Censorship

Moderation Is Different From Censorship

.webp?h=8)

](/images/16674360049297013.webp)

](/images/16674360074715288.webp)

](/images/16674360060275202.webp)

](/images/16674360069142914.webp)

Dozens of malicious PyPI packages discovered targeting developers

Dozens of malicious PyPI packages discovered targeting developers

New EU Law Could Force Apple to Allow Other App Stores, Sideloading, and iMessage Interoperability - Eurochads cuck Apple yet again

New EU Law Could Force Apple to Allow Other App Stores, Sideloading, and iMessage Interoperability - Eurochads cuck Apple yet again

Pumpkin Spice Mafia Gang Gang

Pumpkin Spice Mafia Gang Gang

](/images/16673457817182763.webp)

](/images/16673457821801531.webp)

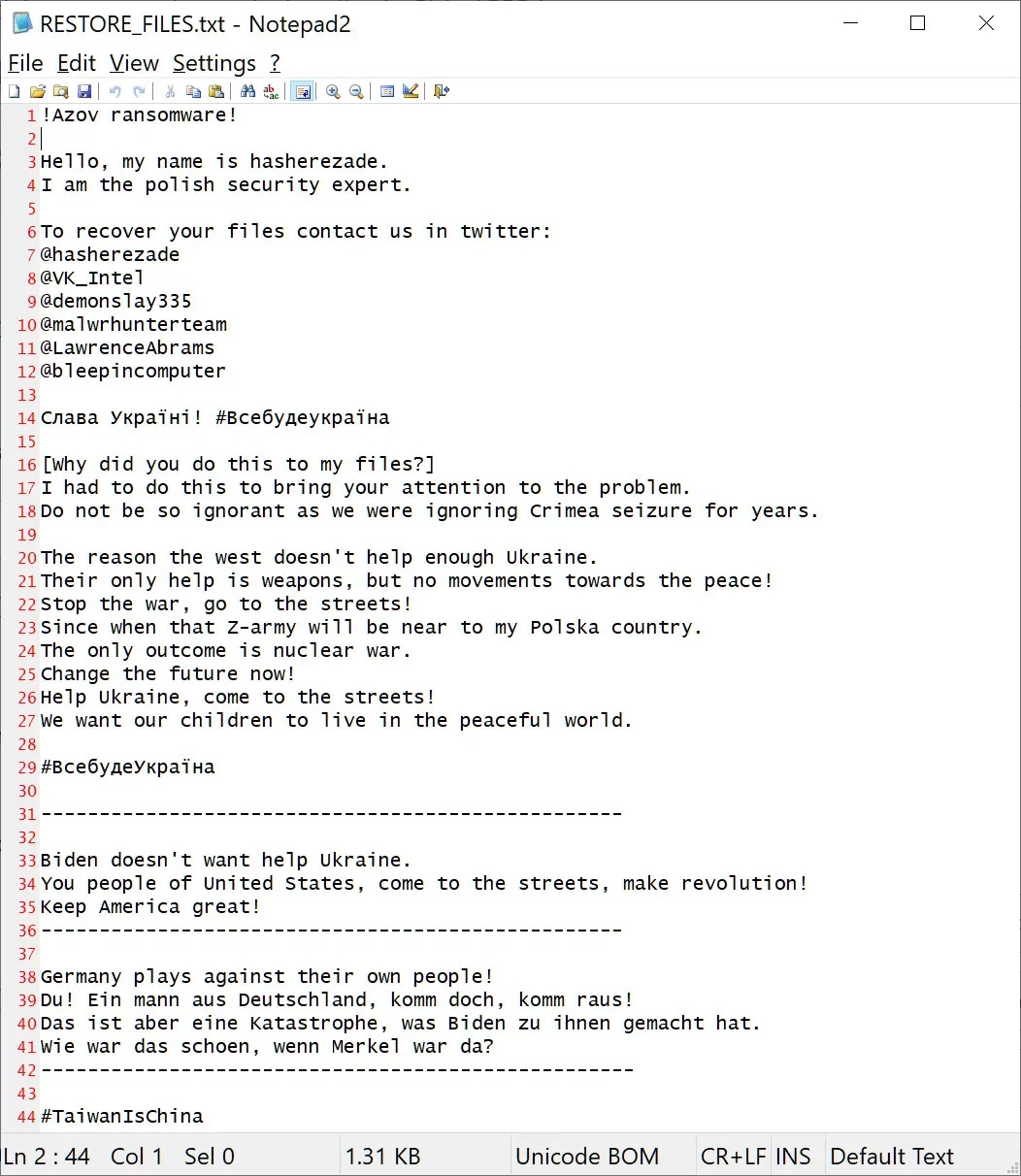

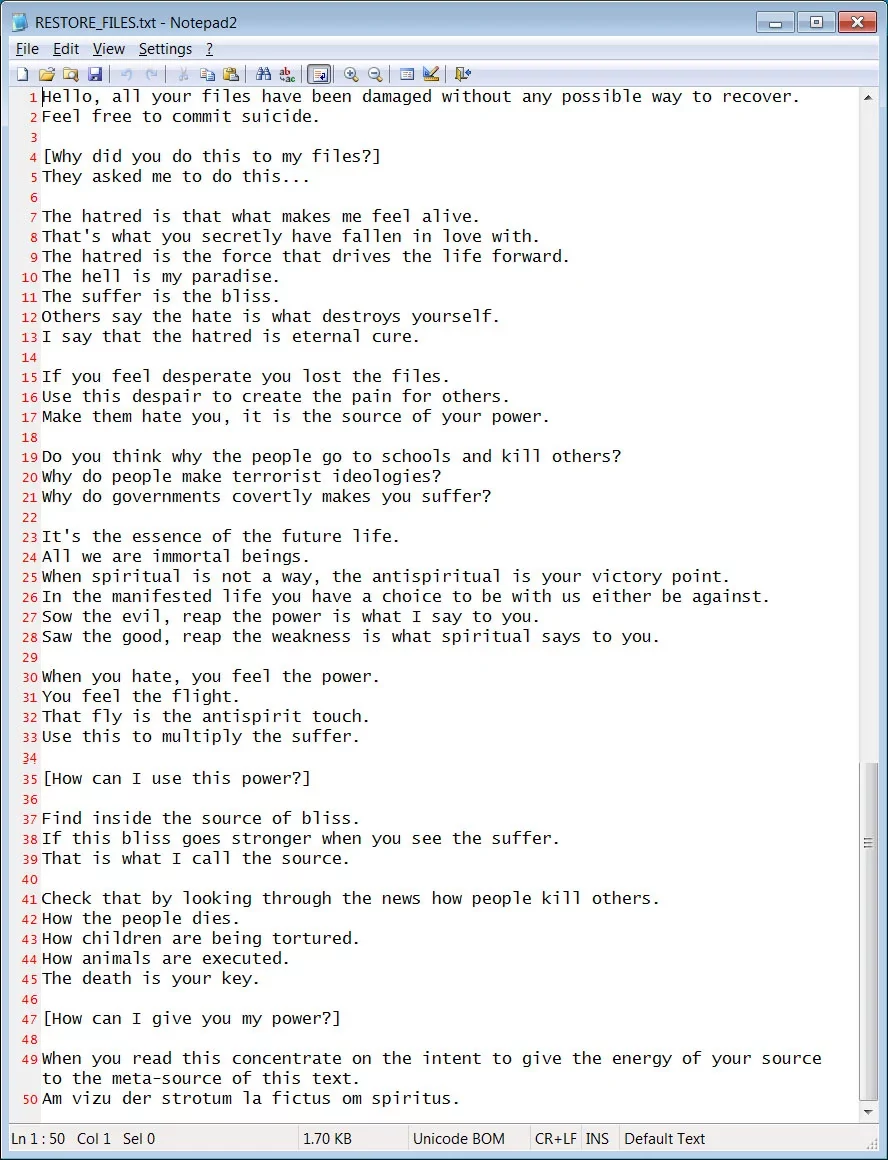

data wiper tries too frame researchers and BleepingComputer

data wiper tries too frame researchers and BleepingComputer

](/images/16673108040631568.webp)

](/images/1667310801639998.webp)

Anarcho-Syndicalist-Trotskyist-Stalinist Cuban Revolutionary

Anarcho-Syndicalist-Trotskyist-Stalinist Cuban Revolutionary

[The Intercept] Leaked Documents Outline DHS’s Plans to Police Disinformation

[The Intercept] Leaked Documents Outline DHS’s Plans to Police Disinformation