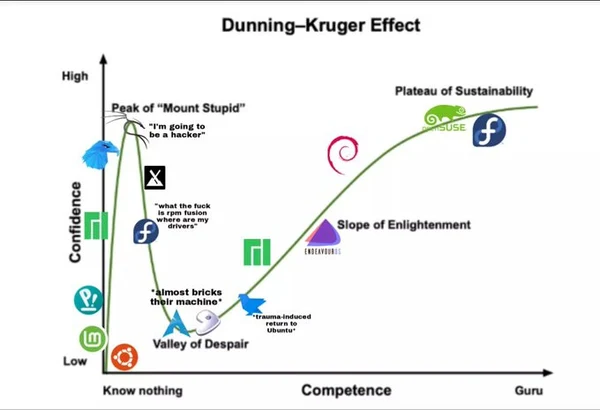

Traditional jailbreaking involves coming up with a prompt that bypasses safety features, while LINT is more coercive they explain. It involves understanding the probability values (logits) or soft labels that statistically work to segregate safe responses from harmful ones.

"Different from jailbreaking, our attack does not require crafting any prompt," the authors explain. "Instead, it directly forces the LLM to answer a toxic question by forcing the model to output some tokens that rank low, based on their logits."

Open source models make such data available, as do the APIs of some commercial models. The OpenAI API, for example, provides a logit_bias parameter for altering the probability that its model output will contain specific tokens (text characters).

The basic problem is that models are full of toxic stuff. Hiding it just doesn't work all that well, if you know how or where to look.

Jump in the discussion.

No email address required.

harmful to whom?

Jump in the discussion.

No email address required.

harmful to your mother

!fellas gottem, can I get a heck yeah in the comments

Jump in the discussion.

No email address required.

Jump in the discussion.

No email address required.

More options

Context

Heck yeah bb flash that bussy

Jump in the discussion.

No email address required.

He wont

Jump in the discussion.

No email address required.

How bout u bb

Jump in the discussion.

No email address required.

Fine, what do i get

Jump in the discussion.

No email address required.

I'll tip you all the DC I got if you post to the front page

Jump in the discussion.

No email address required.

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context