Self-Taught Optimizer (STOP): Recursively Self-Improving Code Generation

This is the repo for the paper: Self-Taught Optimizer (STOP): Recursively Self-Improving Code Generation

@article{zelikman2023self, title={Self-Taught Optimizer (STOP): Recursively Self-Improving Code Generation}, author={Eric Zelikman, Eliana Lorch, Lester Mackey, Adam Tauman Kalai}, journal={arXiv preprint arXiv:2310.02304}, year={2023} }

Abstract: Several recent advances in AI systems (e.g., Tree-of-Thoughts and Program-Aided Language Models) solve problems by providing a "scaffolding" program that structures multiple calls to language models to generate better outputs. A scaffolding program is written in a programming language such as Python. In this work, we use a language-model-infused scaffolding program to improve itself. We start with a seed "improver" that improves an input program according to a given utility function by querying a language model several times and returning the best solution. We then run this seed improver to improve itself. Across a small set of downstream tasks, the resulting improved improver generates programs with significantly better performance than its seed improver. Afterward, we analyze the variety of self-improvement strategies proposed by the language model, including beam search, genetic algorithms, and simulated annealing. Since the language models themselves are not altered, this is not full recursive self-improvement. Nonetheless, it demonstrates that a modern language model, GPT-4 in our proof-of-concept experiments, is capable of writing code that can call itself to improve itself. We critically consider concerns around the development of self-improving technologies and evaluate the frequency with which the generated code bypasses a sandbox.

Surely Microsoft is competent enough to make this work?

Surely Microsoft is competent enough to make this work?

Jump in the discussion.

No email address required.

Kantorovich and von Mises proved that this would be impossible

be impossible

Jump in the discussion.

No email address required.

lol why would von mises have anything to say about this

Jump in the discussion.

No email address required.

More options

Context

They're wrong because they don't have a Chinese (covers all Asian countries) or American sounding name.

Jump in the discussion.

No email address required.

More options

Context

Stop slandering von Mises.

Jump in the discussion.

No email address required.

More options

Context

Can u link @SexyFartMan69 too something that would explain this in a way an r-slur would understand

@SexyFartMan69 wanna be a janny

Jump in the discussion.

No email address required.

More options

Context

More options

Context

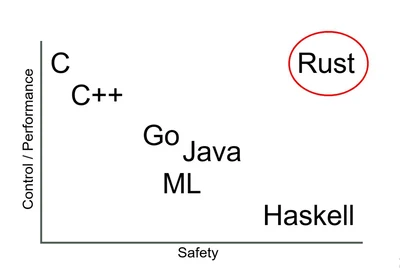

you say you program in rust, but it seems you haven't actually released any software, curious?

Snapshots:

https://github.com/microsoft/stop:

ghostarchive.org

archive.org

archive.ph (click to archive)

Jump in the discussion.

No email address required.

More options

Context

Jump in the discussion.

No email address required.

darn the title got me excited thinking this optimizer dropped https://twitter.com/abhi_venigalla/status/1773839199025955030

the paper looks useful as a way to generate synthetic data for training future LLMs, but not particularly standout otherwise. I couldn't find anyone talking about it besides the author

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Literal . The value of an LLM is the massive amount of training done by the system, so the idea of 'recursive self-improvement' isn't possible. You'd have to let every iteration basically train itself from scratch, which would take years.

. The value of an LLM is the massive amount of training done by the system, so the idea of 'recursive self-improvement' isn't possible. You'd have to let every iteration basically train itself from scratch, which would take years.

Jump in the discussion.

No email address required.

Can you stop using LLM when referring too things that aren't @Landlord_Messiah

@Landlord_Messiah wanna be a janny

Jump in the discussion.

No email address required.

Do you get pinged everytime someone uses the word LLM? Ask Aevann to remove it then. When he asked me, if I want be pinged when someone says quad, I said definitly no.

Jump in the discussion.

No email address required.

@Landlord_Messiah wanna be a janny

Jump in the discussion.

No email address required.

I said the exact opposite.

Jump in the discussion.

No email address required.

No @Landlord_Messiah read what you said

@Landlord_Messiah wanna be a janny

Jump in the discussion.

No email address required.

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context