- 11

- 28

- 32

- 50

- 37

- 51

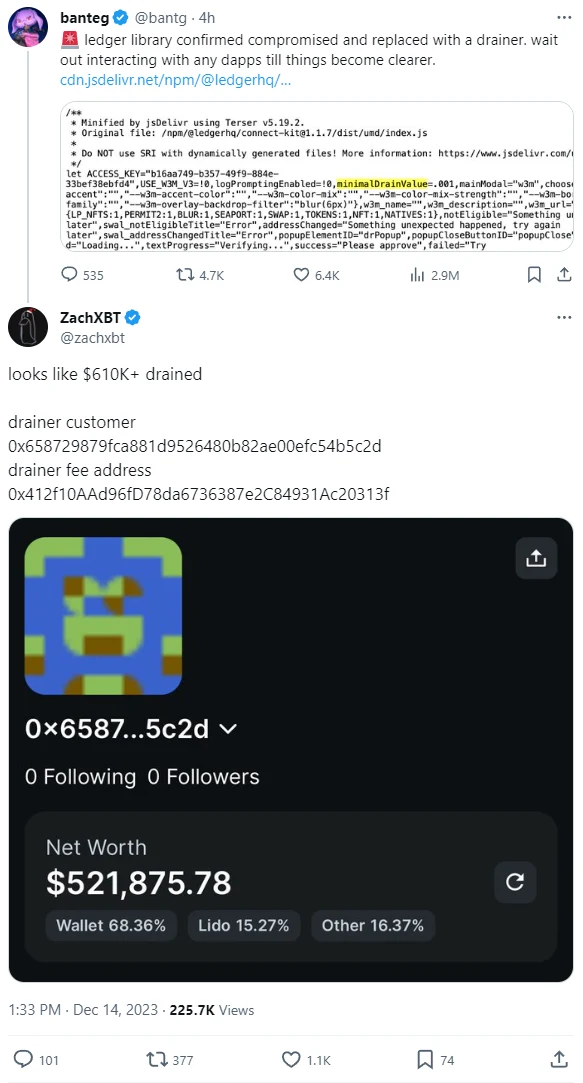

https://twitter.com/zachxbt/status/1735292040986886648

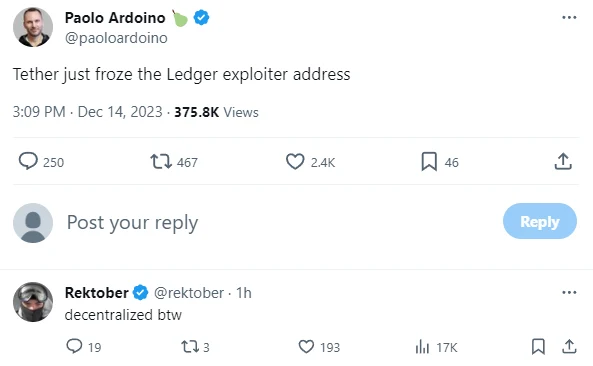

https://twitter.com/paoloardoino/status/1735315976827101274

Abridged summary from Ledger themselves:

This morning CET, a former Ledger Employee fell victim to a phishing attack that gained access to their NPMJS account.

The attacker published a malicious version of the Ledger Connect Kit (affecting versions 1.1.5, 1.1.6, and 1.1.7). The malicious code used a rogue WalletConnect project to reroute funds to a hacker wallet.

Ledger's technology and security teams were alerted and a fix was deployed within 40 minutes of Ledger becoming aware. The malicious file was live for around 5 hours, however we believe the window where funds were drained was limited to a period of less than two hours.

The genuine and verified Ledger Connect Kit version 1.1.8 is now propagating and is safe to use.

For builders who are developing and interacting with the Ledger Connect Kit code: connect-kit development team on the NPM project are now read-only and can't directly push the NPM package for safety reasons.

We have internally rotated the secrets to publish on Ledger's GitHub.

Ledger, along with Walletconnect and our partners, have reported the bad actor's wallet address. The address is now visible on chainalysis

Tether_to has frozen the bad actor's USDT.

We are actively talking with customers whose funds might have been affected, and working proactively to help those individuals at this time.

We are filing a complaint and working with law enforcement on the investigation to find the attacker.

We're studying the exploit in order to avoid further attacks. We believe the attacker's address where the funds were drained is here: 0x658729879fca881d9526480b82ae00efc54b5c2d

- 29

- 18

Summary for those just joining us:

Advent of Code is an annual Christmas themed coding challenge that runs from December 1st until christmas. Each day the coding problems get progressively harder. We have a leaderboard and pretty good turnout, so feel free to hop in at any time and show your stuff!

Whether you have a single line monstrosity or a beautiful phone book sized stack of OOP code, you can export it in a nice little image for sharing at https://carbon.vercel.app

What did you think about today's problem?

Our Code is 2416137-393b284c (No need to share your profile, you have the option to join anonymously if you don't want us to see your github)

- 48

- 59

Traditional jailbreaking involves coming up with a prompt that bypasses safety features, while LINT is more coercive they explain. It involves understanding the probability values (logits) or soft labels that statistically work to segregate safe responses from harmful ones.

"Different from jailbreaking, our attack does not require crafting any prompt," the authors explain. "Instead, it directly forces the LLM to answer a toxic question by forcing the model to output some tokens that rank low, based on their logits."

Open source models make such data available, as do the APIs of some commercial models. The OpenAI API, for example, provides a logit_bias parameter for altering the probability that its model output will contain specific tokens (text characters).

The basic problem is that models are full of toxic stuff. Hiding it just doesn't work all that well, if you know how or where to look.

- 25

- 32

FFmpeg CLI multithreading is now merged! https://t.co/uUJ0SF0opw

— FFmpeg (@FFmpeg) December 12, 2023

- 14

- 25

After white supremacists used Groomercord to plan the deadly Unite the Right rally in Charlottesville in 2017, company executives promised to clean up the service.

The chat platform built for g*mers banned prominent far-right groups, built a trust and safety team and started marketing to a more diverse set of users.

The changes garnered attention --- Groomercord was going mainstream, tech analysts said --- but they papered over the reality that the app remained vulnerable to bad actors, and a privacy-first approach left the company in the dark about much of what took place in its chatrooms.

Into that void stepped Jack Teixeira, the young Air National Guard member from Massachusetts who allegedly exploited Groomercord's lack of oversight and content moderation to share top-secret intelligence documents for more than a year.

As the covid pandemic locked them down at home, Teixeira and a group of followers spent their days in a tightknit chat server that he eventually controlled. What began as a place to hang out while playing first-person-shooter games, laugh at gory videos and trade vile memes became something else entirely --- the scene of one of the most damaging leaks of classified national security secrets in years.

Groomercord executives say no one ever reported to them that Teixeira was sharing classified material on the platform. It is not possible, added John Redgrave, Groomercord's vice president of trust and safety, for the company to identify what is or isn't classified. When Groomercord became aware of Teixeira's alleged leaking, staff moved "as fast as humanly possible" to assess the scope of what had happened and identify the leaker.

But according to interviews with more than a dozen current and former employees, moderators and researchers, the company's rules and culture allowed a racist and antisemitic community to flourish, giving Teixeira an audience eager for his revelations and unlikely to report his alleged lawbreaking. Groomercord allows anonymous users to control large swaths of its online meeting rooms with little oversight. To detect bad behavior, the company relies on largely unpaid volunteer moderators and server administrators like Teixeira to police activity, and on users themselves to report behavior that violates community guidelines.

"While the Trust & Safety team makes life difficult for bad actors," the company's founders wrote in 2020, "our users play their part by reporting violations --- showing that they care about this community as much as we do." Groomercord also allows users to immediately and permanently delete material that hasn't previously come to their attention, often rendering it impossible to reconstruct even at the request of law enforcement. The Washington Post and "Frontline" found that Teixeira took advantage of this feature to destroy a full record of his alleged leaking.

People familiar with the FBI's investigation credited Groomercord with responding more quickly to law enforcement requests than many other technology firms. But Groomercord says it had no real-time visibility into Teixeira's activity --- offering him time and space to allegedly share classified government documents, despite a slew of warning signs missed by the company.

Groomercord's troubles are not unique in Silicon Valley. Mainstream social media platforms like Facebook and YouTube have weathered years of criticism over algorithms that push disinformation and ideological bias, opening doors to radicalization. TikTok has been blamed for promoting dangerous teen behavior and a dramatic rise in antisemitism on the platform. And X, formerly Twitter, had its trust and safety team gutted after Elon Musk purchased the platform and allowed two prominent organizers of the Charlottesville rally, Richard Spencer and Jason Kessler, to buy verification.

Other messaging apps that have grown in popularity, such as Telegram and Signal, allow for end-to-end encryption, a feature popular with privacy-conscious users but which the FBI has opposed, claiming it allows for "lawless spaces that criminals, terrorists, and other bad actors can exploit."

Groomercord currently doesn't feature end-to-end encryption, so it is theoretically able to scan all its servers for problematic content. With certain exceptions, it elects not to, say Groomercord executives and people familiar with the platform.

In 2021, an account tied to Teixeira was banned from Groomercord for hate speech violations, the company said. But he continued to use the platform unimpeded and undetected from a different account until April, when he was arrested. During that time he allegedly leaked intelligence, and posted racist, violence-filled screeds in violation of Groomercord's community guidelines.

In October, the company announced that it would implement a new warning system and move away from permanent bans in favor of temporary ones for many violations. In the past, many users easily circumvented Groomercord's suspension policies --- including Teixeira.

Jason Citron and Stan Vishnevskiy, two software developers, co-founded Groomercord in 2015 as a communication tool for g*mers. Players soon flocked to the free platform to communicate as they played first-person shooters and other games. Groomercord also let them stay in touch after they put down their virtual weapons, in servers with chatrooms called "channels" that were always running. Communities started taking shape, soon augmented with video calling and screen sharing. But inside Groomercord's servers, there were growing problems.

Groomercord invested little in monitoring, though it launched a year into G*merGate, the misogynist harassment campaign that targeted women in the video game industry with threats of violence and doxing. Until the summer of 2017, its trust and safety team employed a single person. That wasn't unusual at the time, said Redgrave.

"It's not unique for a company like Groomercord or frankly like any tech company that has gotten to the size of Groomercord to not have a trust and safety team, at one point in time," he said.

Then came Charlottesville.

Hundreds of neo-Nazis and white supremacists gathered in Charlottesville for a torch-lit rally on the night of Aug. 11, 2017. At a counterprotest the following morning, James Alex Fields Jr. rammed a car into a crowd, killing one person and injuring 35. Evidence gathered at the subsequent civil trial pointed to Groomercord as a key online organizing tool, where users traded antisemitic and racist memes and jokes while planning carpools, a dress code, lodging and makeshift weapons.

"It is clear that many members of the 'alt-right' feel free to speak online in part because of their ability to hide behind an anonymous username," wrote Judge Joseph C. Spero of the U.S. District Court for the Northern District of California in allowing prosecutors to subpoena Groomercord chat history.

"It was for a while known as the Nazi platform," said PS Berge, a media scholar and PhD candidate at the University of Central Florida who has studied far-right communities on Groomercord. "It was preferred by white supremacists because it allowed them to cultivate exclusive, protected, insulated private communities where they could gather and share resources and misinformation."

After the rally, the company scrambled to hire for the nascent trust and safety team --- Groomercord says trust and safety staff now total 15 percent of the company's employees --- and banned servers associated with neo-Nazis like Andrew Anglin and groups including Atomwaffen and the Nordic Resistance Movement.

"Charlottesville caused Groomercord to really invest in trust and safety," said Zac Parker, who worked on Groomercord's community moderation team until November 2022. "This matches a broader pattern in the industry where companies will mostly overlook trust and safety work until a major incident happens on their platform, which causes them to start investing in trust and safety as part of the damage control process."

After acquiring Redgrave's machine-learning company Sentropy in 2021, he said the company got better at automated scanning for disturbing and unauthorized content including child sexual abuse material. Groomercord's quarterly transparency audits show a higher percentage of violations are now being detected before they get reported.

"We have moved from a state where we remove things that violate our terms of service proactively about 40 percent of the time to now 85 percent of the time," said Redgrave.

But the company also encourages users to expect a greater degree of privacy than on competing platforms. Groomercord prominently reassures users on its website that it doesn't "monitor every server or every conversation." In the past, employees have promised the gaming community that the company wasn't snooping on their chats.

"Groomercord's approach to moderation intersects with its understanding of free speech or other ethical issues, or its treatment of user data," said Parker. "Groomercord has a strong reputation of privacy."

More than 80 percent of conversations on Groomercord take place in what the company calls "smaller spaces" --- such as invite-only communities and direct messages between users. There, Groomercord's moderation presence is minimal or, outside of narrow cases, effectively nonexistent.

Most voice and video chats, which are some of Groomercord's most popular features and where Teixeira and his friends spent countless hours, are not recorded or monitored.

"We have taken the stance that a lot of these spaces are like text messaging your friends and your loved ones," said Redgrave. "It's inappropriate for us to violate people's privacy and we don't have the level of precision to do so when it comes to detection."

Occasionally, the company is forced to crack down at scale. One former member of Groomercord's trust and safety team recalled working through the night of March 14, 2019, after far-right gunman Brenton Tarrant live-streamed attacks on Muslim worshipers in Christchurch, New Zealand, killing 51 people. The footage was originally broadcast on Facebook but it quickly spread to other platforms, where it became a calling card in extremist communities, inspiring future attacks. In the 10 days following the shootings, Groomercord said it banned 1,397 accounts and removed 159 servers due to content related to the shootings.

"Groomercord did what it could but I think it just, as a platform, kind of lends itself, unfortunately, to not great folks," said the former staffer, who worked at Groomercord for more than two years and spoke to The Post under the condition of anonymity to discuss their former employer. "These people are not just the worst offenders, these are also the people that sustain Groomercord."

Groomercord came up repeatedly in connection to grim, high-profile events: the Jan. 6, 2021, attack on the Capitol; organized "swatting" campaigns that led armed police to the homes of innocent victims; the 2022 racist mass killing targeting Black shoppers in Buffalo. Each time, the platform was unprepared to respond to what its users were doing.

"When you first log into the platform, it is only going to show you its most curated, most pristine communities," said Berge. "But underneath, what goes on in the smaller, private communities that the public doesn't have access to, that you can only get into by invitation and that might be even more protected with recruitment servers or skin tone verification, that's where hate proliferates across the platform. And the problem is, it's very hard to see."

Company representatives who testified before the House committee that investigated the Jan. 6 insurrection saw the hazard in "relying too much on user moderation when the user base may not have an interest in reporting," according to a draft report prepared by the committee. The company told the committee that this "informed some of the proactive monitoring it underwent during the weeks before January 6th."

Groomercord said it banned a server, DonaldsArmy.US, after a Dec. 19 tweet made by President Donald Trump was followed by organizing activity on it, including discussions about how to "bring firearms into the city in response to the President's call." But House investigators also noted that the company had missed earlier connections between the platform and a major pro-Trump online forum called TheDonald.win, which was implicated in planning for the riot.

After the riot, the company formed a dedicated counterextremism team. But people with extremist beliefs continued to frequent Groomercord, trafficking in white supremacist memes and predilections of violence, or worse.

Payton Gendron, who shot and killed 10 Black people at a Buffalo supermarket on May 14, 2022, used a private Groomercord server to record his daily activities and planning for the attack, including weapons purchases and a visit to the store to gauge its defenses.

Parker said it was unlikely that Groomercord's moderation cowtools would flag someone using Groomercord in the way Gendron did.

"You would need to be able to literally identify what's the difference between someone putting a schedule of events in any server versus a schedule of when they intend to hurt people. You would need to have a model that could read everything on the platform as well as a person could, which just doesn't exist," said Parker.

Nearly two months after the Buffalo shooting, a man who killed seven people in a mass killing in Highland Park, Ill., was found to have administered his own Groomercord server called "SS."

"The investigations we do at SITE --- particularly those of far-right extremists --- almost always lead to Groomercord in some way," said Rita Katz, director of the SITE Intelligence Group, a private firm that tracks extremist activity. "Tech companies have a responsibility to address and counter these types of repeated, systematic problems on their platforms."

As Groomercord's user base skyrocketed during the pandemic, the company launched programs to help moderators better enforce the platform's rules. It was a limited group, but the company created a moderator curriculum and exam, and invited top moderators to an exclusive server called the Groomercord Moderator Groomercord where they could raise issues to trust and safety staff and shape policy. A 2022 study conducted by Stanford credited the server with curbing anti-LGBTQ+ hate speech on the platform during Pride Month.

But in November 2022, the company canned the server, began shutting down related moderator training programs and laid off three members of the trust and safety team that ran them, according to Parker, one of the laid-off employees.

A moderator who was trained and paid by Groomercord to write articles for the company about safety said the decision was a shock. "This is something we spent years investing our time into, for the good of the platform," said Panley, who spoke on the condition that she be identified by her online handle. "After that happened, a lot of people became very disillusioned toward Groomercord."

Redgrave said the company educates moderators in multiple ways and pointed to materials on the site. "We ultimately decided that Groomercord Moderator Groomercord was not going to be the most effective way to deploy our resources," he added.

By the time Groomercord was shuttering its attempt to engage with the moderators who police its own rules and content, Jack Teixeira was already sharing classified government secrets with friends.

Beginning in 2020, Teixeira and his friends had littered several private servers, including Thug Shaker Central, with racist and antisemitic posts, gore and imagery from terrorist attacks --- all in apparent violation of Groomercord's community guidelines. The server name itself was a reference to a racist meme taken from gay porn. Though his intentions weren't always clear, in chats Teixeira discussed committing acts of violence, fantasizing about blowing up his school and rigging a vehicle from which to shoot people.

Members of Thug Shaker Central also shared memes riffing on the live stream footage of the Christchurch mass killing, which Tarrant had littered with far-right online references aimed at gaming communities.

A user on the server who went by the handle "Memenicer" said he would discuss "accelerationist rhetoric" and "racial ideology" with Teixeira, pushing to see how far the conversation would go. On the wall of Teixeira's room hung the flag of Rhodesia, a racist minority-ruled former state in southern Africa that has become a totem among white nationalists.

After Teixeira's arrest, FBI agents visited Memenicer, who spoke on the condition that he be identified by his online handle, carrying transcripts of chats the two had exchanged, he said.

"One was about a school shooting and then one was ... about a mass shooting," he said. More often the server was a stream of slurs: "the n-word over and over again."

In Thug Shaker Central, Teixeira cultivated a cadre of younger users, who he wanted to be "prepared" for action against a government he worked for but claimed to distrust. Over time, the community became "more extreme," according to Pucki, a user who created the initial server used by the group during pandemic lockdowns in 2020 and spoke on the condition that he be identified by his online handle.

Once he became administrator of the server, Teixeira expelled those he didn't like, narrowing the pool of who could report him. Many of those who remained had spent time on 4chan's firearms board and its notorious racism and sexism-filled /pol/ forum, Pucki said, bringing the toxic language and memes from those communities.

Teixeira's political and social views increasingly infected the server, said members of Thug Shaker Central. "It became less about playing games and chatting and having fun, and it became about screaming racial slurs," said Pucki.

There was at least one self described neo-Nazi on Thug Shaker Central: a teenager who went by the handle "Crow" and who said she dated Teixeira until early 2022. Crow said that at the time she was involved with more hardcore accelerationist white supremacist groups that sought to inspire racial revolution, movements she says she has since left behind and disavows.

Crow spent time on Telegram, but she also pushed her views on Groomercord, including in Thug Shaker Central, where several members recalled her sharing links to other communities and posting racist material, including swastikas.

“I was trying to radicalize people at the time,” said Crow. “I was trying to get them to join … get them more violent.”

Crow claimed that a young man she attempted to radicalize later live-streamed the beating of a Black man in a Groomercord private message as she and another person watched. The Post was unable to confirm the existence of this video.

“The man was sitting against a wall and he was kicking him,” Crow said. She had little fear the video broadcast in a group chat would be picked up by the company. “They, as far as I know, can't really look into those.”

Teixeira posted videos taken behind his mother and stepfather's house, firing guns into the woods. Filmed from Teixeira's perspective, the clips mimicked the aesthetic of first-person-shooter video games --- the look of Tarrant's live stream in Christchurch.

"That's what I think of instantly," said Mariana Olaizola, a policy adviser on technology and law at the NYU Stern Center for Business and Human Rights who wrote a May report on extremism in gaming communities. "I'm not sure about other people, but the resemblance is very obvious, I would say."

Another video Teixeira posted on the server showed him standing in camouflage fatigues at a gun range near his home. "Jews scam, n----rs r*pe, and I mag dump," he says to the camera before firing the full magazine downrange.

Groomercord's Redgrave confirmed that the video violated the company's community guidelines. But like everything else that happened on Thug Shaker Central, no one at Groomercord saw it.

Hundreds of documents, fragments of metadata\

The intelligence horde linked to Teixeira turned out to be larger than it first appeared, extending to hundreds of documents posted online, either as text or images, for more than a year.

Redgrave said there was little the company could do about the platform being used to share government secrets.

"Without knowing what is and is not classified and without having some way to essentially detect that proactively, we can't say definitively where classified documents go," said Redgrave. "That's true of every single tech company, not just us."

Although staff spotted potentially classified material circulating online, including on 4chan, as early as April 4, they didn't connect it to Groomercord until journ*lists started contacting the company on April 7, said Redgrave.

When Teixeira frantically shuttered Thug Shaker Central on April 7, the company was left without an archive of content to review or provide to the FBI, which made its first request to Groomercord the same day.

Despite its history of apparent policy violations, Thug Shaker Central was never flagged to the trust and safety team. "No users at all reported anything about this to us," said Redgrave. "He had deleted TSC before Groomercord became aware of it," he added. "These deletions are why, at the time, we were unable to review it completely for all content that would have violated our policies."

A review of search warrant applications issued to Groomercord since 2022 found that law enforcement agencies were generally aware of the company's limited retention policies, which they noted typically rendered deleted data tied to suspects unrecoverable.

"I don't think any platform is ever going to catch everything," said Katherine Keneally, head of threat analysis and prevention at the Institute for Strategic Dialogue, and a former researcher with the New York City Police Department. But a "data retention change," she said, "would probably be a good start in ensuring at least that there is some accountability."

In the days after Thug Shaker Central was brought to Groomercord's attention, the company sifted through traces of the server captured only in fragments of metadata, according to company representatives. It leaned heavily on direct messages, screenshots and press reports to piece together what had happened. By the time Teixeira was arrested on April 13, The Post had already obtained hundreds of images of classified documents shared online but about which Groomercord said it had no original record.

According to court records, FBI investigators found that Teixeira had been sharing classified information on at least three, separate Groomercord servers.

One of those spaces was a server associated with the popular YouTuber Abinavski, who gained a following for his humorous edits of the military game "War Thunder." Moderators on the server, Abinavski's Exclusion Zone, watched in disbelief, then bewilderment, as Teixeira began uploading typed intelligence updates around the start of Russia's invasion of Ukraine. He organized them in a thread, essentially another channel within the server, that was dedicated to the war. Teixeira posted intelligence for more than a year, first as text then full photographs, at times catering intelligence to particular members in other countries.

An April 5, 2022, update was representative. "We'll start off with casualty assessment," it begins, before listing Russian and Ukrainian personnel losses, followed by what are presumably classified assessments of equipment losses on both sides. The next paragraph contains a detailed summary of Ukrainian weapons stores along with a brief on Russian operational constraints.

"Being able to see it immediately was just very fascinating and a little bit exhilarating," said Jeremiah, one of the server's young moderators, who agreed to an interview on the condition that he would be identified only by his first name. "Which again, in hindsight is completely the wrong move, it should have been immediately reporting him, but it was very interesting."

An Air Force inspector general's report, released on Monday, found that Teixeira's superiors at Otis Air National Guard Base inside Joint Base Cape Cod failed to take sufficient action when he was caught accessing classified information inappropriately on as many as four occasions. If members of his unit had acted sooner, the report found, Teixeira's "unauthorized and unlawful disclosures" could have been reduced by "several months."

The thread used by Teixeira disappeared sometime around a March 19 post he made indicating he would cease updates, according to Jeremiah and three other users on the server. Redgrave said that like Thug Shaker Central, Groomercord had no record of "reports or flags related to classified material" on Abinavski's Exclusion Zone before April 7. It wasn't until April 24 --- 11 days after Teixeira's arrest and more than two weeks after the FBI's first contact with Groomercord --- that an alert from the company popped up in Jeremiah's and other moderators' direct messages.

"Your account is receiving this notice due to involvement with managing or moderating a server that violates our Community Guidelines," read the note from Groomercord's Trust and Safety team. The message instructed moderators to "remove said content and report it as necessary." It was the first and last communication he received from Groomercord.

"It was a completely automated message," said Jeremiah, who spoke to The Post in Flagstaff, Ariz., where he lives. "None of us have had any contact with any Groomercord staff whatsoever, that's just not how they handle things like this."

Like other moderators on Abinavski's Exclusion Zone, Jeremiah had largely fallen into his role of periodically booting or policing troublemakers. He had no training. He summarized Groomercord's moderation policy as "see no evil, hear no evil."

"They pretty much pass off all blame onto us, which is how their moderation works, since it's all self moderated," said Jeremiah. "It gives them the ability to go, 'Oh, well, we didn't know about it. We don't constantly monitor everyone, it's on them for not reporting it.'"

In October, Groomercord announced several new features for young users, including automatic blurring of potentially sensitive media and safety alerts when minors are contacted by users for the first time. It also said it was relaxing punishments for users who run afoul of the platform's Community Guidelines.

Under the old system, accounts found to have repeatedly violated Groomercord's guidelines would face permanent suspension. That step will now be reserved for accounts that engage in the "most severe harms," like sharing child sexual exploitation or encouraging violence. Users who violate less severe policies will be met with warnings detailing their violations, and in some cases, temporary bans lasting up to one year.

"We've found that if someone knows exactly how they broke the rules, it gives them a chance to reflect and change their behavior, helping keep Groomercord safer," the platform said in a blog post, describing the new system.

"The whole warning system rings to me like Groomercord trying to have its cake and eat it too," said Berge, the media scholar. "It can give the pretense of stepping up and 'taking action' by handing out warnings, while the actual labor of moderation, muting, banning and resolving issues will ultimately fall on the shoulders of admins and moderators."

"It's a big messaging shift from a year or two ago when Groomercord was avidly trying to distance itself from its toxic history," she said.

Researchers like Berge and Olaizola continue to find extremist servers within Groomercord. In November, The Post reviewed more than a half dozen servers on Groomercord, discoverable through links on the open web, which featured swastikas and other Nazi iconography, videos of beheadings and gore, and bots that counted the number of times users used the n-word. The company has also struggled to stem child exploitation on the platform.

Users in one server recently reviewed by The Post asked for feedback on their edits of the Christchurch shooting video, set to songs including Queen's "Don't Stop Me Now."

Another clip on the server, which had been online for more than six months, included a portion of Gendron's live stream broadcast from the Buffalo supermarket where he shot and killed 10 Black people.

In a statement issued after Teixeira's arrest, Groomercord said that the trust and safety staff had banned users, deleted content that contravened the platform's terms and warned others who "continue to share the materials in question."

"This was a problematic pocket on our service," said Redgrave, adding that seven members of Thug Shaker Central had been banned. "Problematic pockets are something that we're constantly evolving our approach to figure out how to identify. We don't want these people on Groomercord."

More than a dozen Thug Shaker Central users now reside on a Groomercord server where the community once led by Teixeira has been resuscitated --- absent their jailed friend. The Post reviewed chats in the server. Members discuss his case and complain about press coverage, expressing the desire to "expose" Post reporters.

"Don't read their zog tales," one user wrote on June 4, using an abbreviation for "Zionist Occupied Government," a baseless antisemitic conspiracy theory.

Another user wrote on Sept. 10 that their lawyer had suggested they stop using racist slurs.

"(I won't stop)," the user added.

- 15

- 9

Summary for those just joining us:

Advent of Code is an annual Christmas themed coding challenge that runs from December 1st until christmas. Each day the coding problems get progressively harder. We have a leaderboard and pretty good turnout, so feel free to hop in at any time and show your stuff!

Whether you have a single line monstrosity or a beautiful phone book sized stack of OOP code, you can export it in a nice little image for sharing at https://carbon.vercel.app

What did you think about today's problem?

Our Code is 2416137-393b284c (No need to share your profile, you have the option to join anonymously if you don't want us to see your github)

- 42

- 58

bing ai will not generate "white slime" but will generate "translucent beige slime" pic.twitter.com/3LN4XHp7hP

— K. Thor Jensen 🐀🐀 (@kthorjensen) December 12, 2023

- 5

- 37

Removed, enjoy ! https://t.co/jbSJ87YvZf

— Arthur Mensch (@arthurmensch) December 12, 2023

- 19

- 15

Summary for those just joining us:

Advent of Code is an annual Christmas themed coding challenge that runs from December 1st until christmas. Each day the coding problems get progressively harder. We have a leaderboard and pretty good turnout, so feel free to hop in at any time and show your stuff!

Whether you have a single line monstrosity or a beautiful phone book sized stack of OOP code, you can export it in a nice little image for sharing at https://carbon.vercel.app

What did you think about today's problem?

Our Code is 2416137-393b284c (No need to share your profile, you have the option to join anonymously if you don't want us to see your github)

- 25

- 48

- 8

- 32

yeah boss good luck firing me i just rewrote the entire stack in arabic pic.twitter.com/DpNWsUBHfl

— nate @run da op (@animeterrorist) December 11, 2023

- 14

- 18

If you want iMessage, buy an iPhone. pic.twitter.com/JpK5caDmTQ

— jon prosser (@jon_prosser) December 10, 2023

- 20

- 19

Summary for those just joining us:

Advent of Code is an annual Christmas themed coding challenge that runs from December 1st until christmas. Each day the coding problems get progressively harder. We have a leaderboard and pretty good turnout, so feel free to hop in at any time and show your stuff!

Whether you have a single line monstrosity or a beautiful phone book sized stack of OOP code, you can export it in a nice little image for sharing at https://carbon.vercel.app

What did you think about today's problem?

Our Code is 2416137-393b284c (No need to share your profile, you have the option to join anonymously if you don't want us to see your github)

- 10

- 25

- 38

- 68

These days it's easy for anyone to take a decent picture, even moreso if you're a nerd with expendable income. Therefore you'd expect Stack Overflow's Travel forum's monthly photo contest to be high quality.

You'd be wrong, they're always TERRIBLE. This month's theme is "Festive". The current winning picture is shit:

and third place is currently...

Why would a StackOverflow power user be eating such a sad-looking Christmas dinner alone? It really makes you think.

Past contest entries (in various topics) include:

There's a suitably  list of suggested future themes including:

list of suggested future themes including:

Officials (police, customs officers, diplomats etc)

Unusual modes of transportation

Construction (any work in progress that's meant to enable / enhance travel)

Holiday photos, in which you can recognize people traveling for their (yearly?) break from work

Civic buildings

You can find past contests on https://travel.meta.stackexchange.com.

- 38

- 15

Summary for those just joining us:

Advent of Code is an annual Christmas themed coding challenge that runs from December 1st until christmas. Each day the coding problems get progressively harder. We have a leaderboard and pretty good turnout, so feel free to hop in at any time and show your stuff!

Whether you have a single line monstrosity or a beautiful phone book sized stack of OOP code, you can export it in a nice little image for sharing at https://carbon.vercel.app

What did you think about today's problem?

Our Code is 2416137-393b284c (No need to share your profile, you have the option to join anonymously if you don't want us to see your github)

- 85

- 111

Wonder why they won't do something consumer friendly like outlawing restricting access to car diagnostic software only available at car dealerships

.webp?x=8)

Serial Serious Replyer

Serial Serious Replyer

codecel releases a new vid on his hyperlink project

codecel releases a new vid on his hyperlink project  A unity app made into a first person interface.

A unity app made into a first person interface.

and copes

and copes  by regulating industry they aren't competitive in

by regulating industry they aren't competitive in