https://www.cbsnews.com/news/google-ai-chatbot-threatening-message-human-please-die/

I thought Journos were making this up!

but here's the chat log:

https://gemini.google.com/share/6d141b742a13

How did this happen?

Can chatbots get possessed by dead things that wander the earth? Did the guy secretly steering the AI pull a scary prank?

.webp?h=8)

Jump in the discussion.

No email address required.

Mentioned in the last couple

s but my theory

s but my theory  is that it's from prompt padding

is that it's from prompt padding

Behind the scenes the LLM is fed a bunch of crap like "You are an artificial

of crap like "You are an artificial  intelligence

intelligence  assistant

assistant  do this do that don't be racist" I think

do this do that don't be racist" I think  it got tripped up by this (Google probably implemented it poorly like everything else relating to Gemini) and thought

it got tripped up by this (Google probably implemented it poorly like everything else relating to Gemini) and thought  it was supposed to act like a malevolent sci-fi AI

it was supposed to act like a malevolent sci-fi AI

Jump in the discussion.

No email address required.

More options

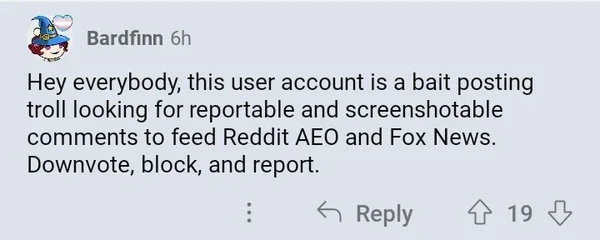

Context