Who could have possibly seen this coming? AI image generators have been used to create non-consensual pornography of celebrities, but why would that same user base dare abuse a free AI text-to-speech deepfake voice generator?

UK-based ElevenLabs first touted its Prime Voice AI earlier this month, but just a few weeks later it may need to rethink its entire model after users reported a slew of users creating hateful messages using real-world voices. The company released its first text-to-voice open beta system on Jan. 23. Developers promised the voices match the style and cadence of an actual human being. The company's "Voice Lab" feature lets users clone voices from small audio samples.

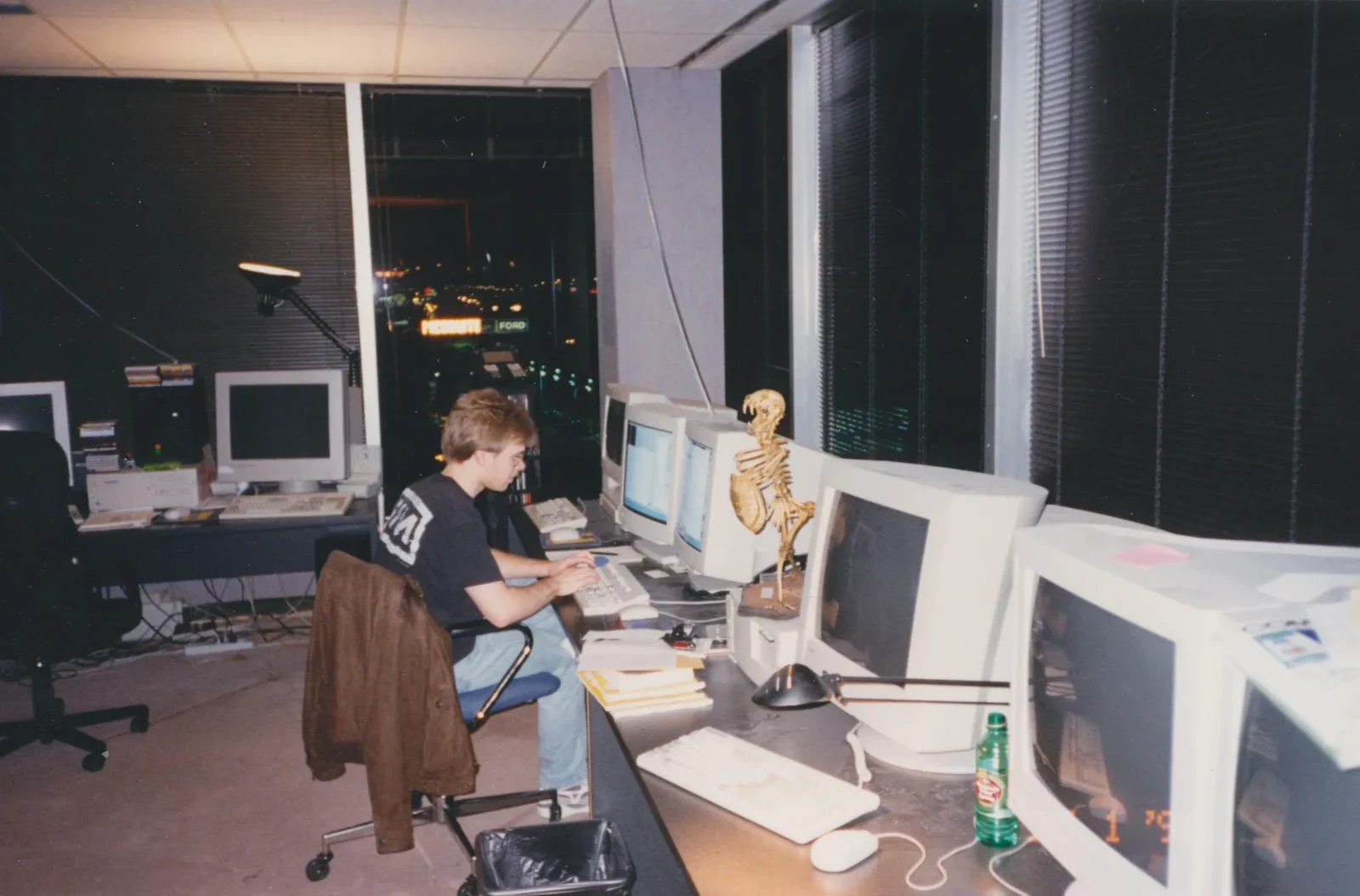

Motherboard first reported Monday about a slew of 4Chan users who were uploading deepfaked voices of celebrities or internet personalities, from Joe Rogan to Robin Williams. One 4Chan user reportedly posted a clip of Emma Watson reading a passage of Mein Kampf. Another user took a voice reportedly sounding like Justin Roiland's Rick Sanchez from Rick & Morty talking about how he was going to beat his wife, an obvious reference to current allegations of domestic abuse against the series co-creator.

On one 4Chan thread reviewed by Gizmodo, users posted clips of the AI spouting intense misogyny or transphobia using voices of characters or narrators from various anime or video games. All this is to say, it's exactly what you would expect the armpit of the internet to do once it got its hands on easy-to-use deepfake technology.

On Monday, the company tweeted that they had a "crazy weekend" and noted while their tech was "being overwhelmingly applied to positive use," developers saw an "increasing number of voice cloning misuse cases," but didn't name a specific platform or platforms where the misuse was taking place.

ElevenLabs offered a few ideas on how to limit the harm, including introducing some account verification, which could include a payment up front, or even dropping the free version of voice lab altogether, which would then mean manually verifying each cloning request.

Last week, ElevenLabs announced it received $2 million in pre-seed funding led by Czech Republic-based Credo Ventures. The little AI-company-that-could was planning to scale up its operation and use the system in other languages as well, according to its pitch deck. This admitted misuse is a turn for developers who were very bullish on where the tech could go. The company spent the past week promoting its tech, saying its system could reproduce Polish TV personalities. The company further promoted the idea of putting human audiobook narrators out of a job. The beta website talks up how the system could automate audio for news articles, or even create audio for video games.

The system can certainly mimic voices pretty well, just based on the voices reviewed by Gizmodo. A layperson may not be able to tell the difference between a faked clip of Rogan talking about porn habits versus an actual clip from his podcast. Still, there's definitely a robotic quality to the sound that becomes more apparent on longer clips.

Gizmodo reached out to ElevenLabs for comment via Twitter, but we did not immediately hear back. We will update this story if we hear more.

There's plenty of other companies offering their own text-to-voice tool, but while Microsoft's similar VALL-E system is still unreleased, other smaller companies have been much less hesitant to open the door for anybody to abuse. AI experts have told Gizmodo this push to get things out the door without an ethics review will continue to lead to issues like this.

Jump in the discussion.

No email address required.

There's a pasta in here somewhere https://old.reddit.com/r/skeptic/comments/10pfc4p/ai_voice_simulator_easily_abused_to_deepfake/j6l4vlp/

Jump in the discussion.

No email address required.

Isn't this pearl-clutching gibberish in response to this lol?

https://vocaroo.com/13cVykobml8D

Jump in the discussion.

No email address required.

Literal terrorism

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Hes right but not the way he put it. Voice synthesizer tech will 100% be used for terrorism, there are so many applications that could result in death, disruption or at least demoralization. No I wont post them (frick you glowies)

Jump in the discussion.

No email address required.

More options

Context

More options

Context