Since I've now listened to one (1) entire talk on AI, I am the world's foremost leading expert.

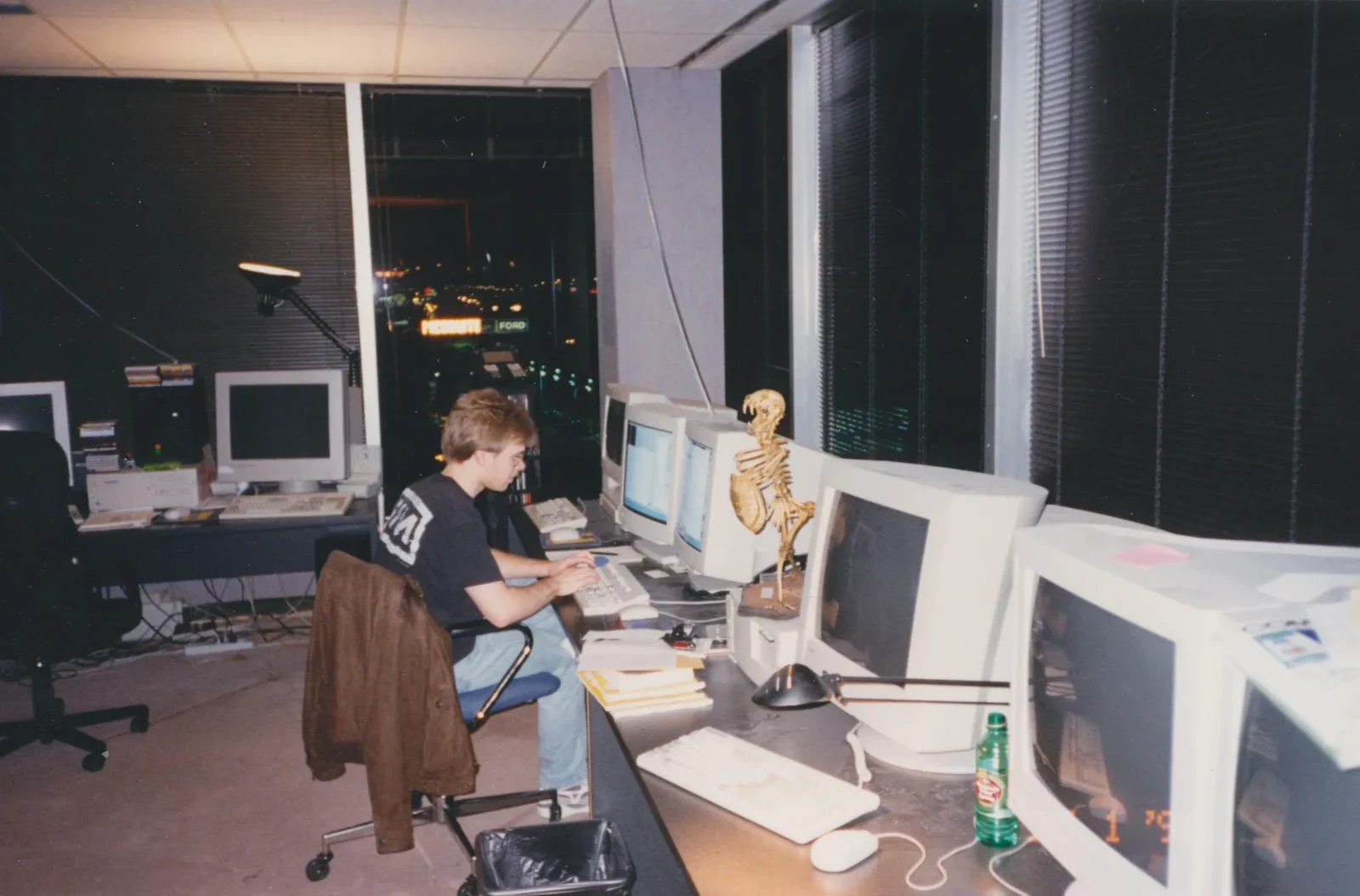

As such I will be running a localized llama 13B instance. For the first time in over a decade I've bought a PC that wasn't an HP workstation.

Specs:

H110 motherboard w/celeron and 4GB ram alongside Nvidia quadro 5200 8gb

Do you guys think it'll actually run a quantized llama? Is 500W PSU enough?

Jump in the discussion.

No email address required.

lol you'll find out when you try to run it

Jump in the discussion.

No email address required.

I need ur thoughts and prayers

I feel fairly confident esp with quantizing. I'm not expecting fast response and I can always add a second gfx card for 16gb vram.

Or comfortably run the 7B model which apparently sucks tho

Jump in the discussion.

No email address required.

Oh yah one of the dope coooders dropped this gem yesterday: https://simonwillison.net/2023/Nov/29/llamafile

coooders dropped this gem yesterday: https://simonwillison.net/2023/Nov/29/llamafile

Looks like a pretty good way to run Shit tbh

Jump in the discussion.

No email address required.

Jump in the discussion.

No email address required.

transphobia

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Now, theoretically, how would I get python to interact with the web interface

Actually what I'm gonna do is try to run llama cpp and python reps so I can just use python to ask it stuff while it runs in C++

Apparently this setup is super lightweight

Jump in the discussion.

No email address required.

lol idk just get it running first and start from there i guess

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Jump in the discussion.

No email address required.

More options

Context

More options

Context

use koboldCPP and split over vram and ram

only works with GGML.bin models though

Jump in the discussion.

No email address required.

More options

Context

i use a chromebook bro lol i'm not running anything locally

but even running remotely usually isn't as simple as it might seem so it might be a fair bit of trial and error when your parts arrive and you give it a go

Jump in the discussion.

No email address required.

I installed a github repo for the first time in my life today so i have high hopes

Jump in the discussion.

No email address required.

lol never a bad thing to start but i guess there's a charm in trying to figure out how to solve the frick ups that might happen

Jump in the discussion.

No email address required.

More options

Context

More options

Context

More options

Context

More options

Context

More options

Context

Jump in the discussion.

No email address required.

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Graphic card most important thing, 24gb vram minimum to run non r-slurred text generation, 13bs are pretty awful

70s are not realistic for hobby level yet, but the mid range stuff is

Jump in the discussion.

No email address required.

I actually decided to try a dif approach. Llamma cpp favors processor and ram computation so I'm not running any significant amount of vram anymore. I'm gonna get 32 (maybe 64gb) of DDR4 and upgrade the celeron to an i5

That way I wont have to modify the model to split the load into vram (which can be finicky apparently). I'm fine with like 1min/token tbh so I might try running the 30B

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Jump in the discussion.

No email address required.

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Jump in the discussion.

No email address required.

Jump in the discussion.

No email address required.

More options

Context

More options

Context

This appears to be a nearly decade old PC with an even older gfx card. I'm not sure if you're trolling or legitimately a noob.

If you want to play with these things I'd suggest renting a cloud server with beefy GPUs.

You pay a couple bucks per hour and they often have free credits. Googling I found this site: https://cloud-gpus.com

If you do want to build a system, I'd see if you have a local Microcenter and pick up the mobo combos they have and like a 16GB 4060ti.

Even then being able to use an A100 in the cloud for a couple bucks is a hard proposition to beat.

Jump in the discussion.

No email address required.

I'm legitimately r-slurred. I looked up the absolute minimum specs needed to run Llama and this rig fit the bill.

Afaik the biggest issue is vram to load the model into. Everything else doesn't have as much of an impact on model speed. And I'm entirely OK with a 7 to 10 min computing time for answers.

Jump in the discussion.

No email address required.

More options

Context

More options

Context

How much did you pay for it???

omg! For 800-1000 you can get a much better consumer rig

If you look up the quantized models on hugging face theyll tell you how much ram you need

Jump in the discussion.

No email address required.

So far this is my build

Gigabyte H110 motherboard w/4gig DDR4 and celeron: $80

Power supply: :$70

32GB DDDR 2133: $70

Intel i3: $30

Gfx: some POS 2gb card, free

HDD: free

Antec case: $40

Total: $290 CAD

I am actually gonna run a quantized 7b which runs on android and pi, I'm no longer worried about specs. With 32GB ram i can hold the entire model in memory with room to spare. I am actually going to use it for searching a vector DB so it should actually run fairly quickly.

Jump in the discussion.

No email address required.

More options

Context

More options

Context

good luck bud!

Let me know what you think of llama. I kinda want to do something similar but am worried llama will suck too much in comparison to ChatGPT

Jump in the discussion.

No email address required.

It's not a fair comparison at all tbh. ChatGPT is just so incredibly powerful. Llama seems like it would be useful when trained on very specific things, I'll let you know how things go.

There's a project where they're training an instance of it on HN, which seems like a good use case of a small LLM. Just train it in a narrow range of topics and regurgitate answers

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Jump in the discussion.

No email address required.

More options

Context