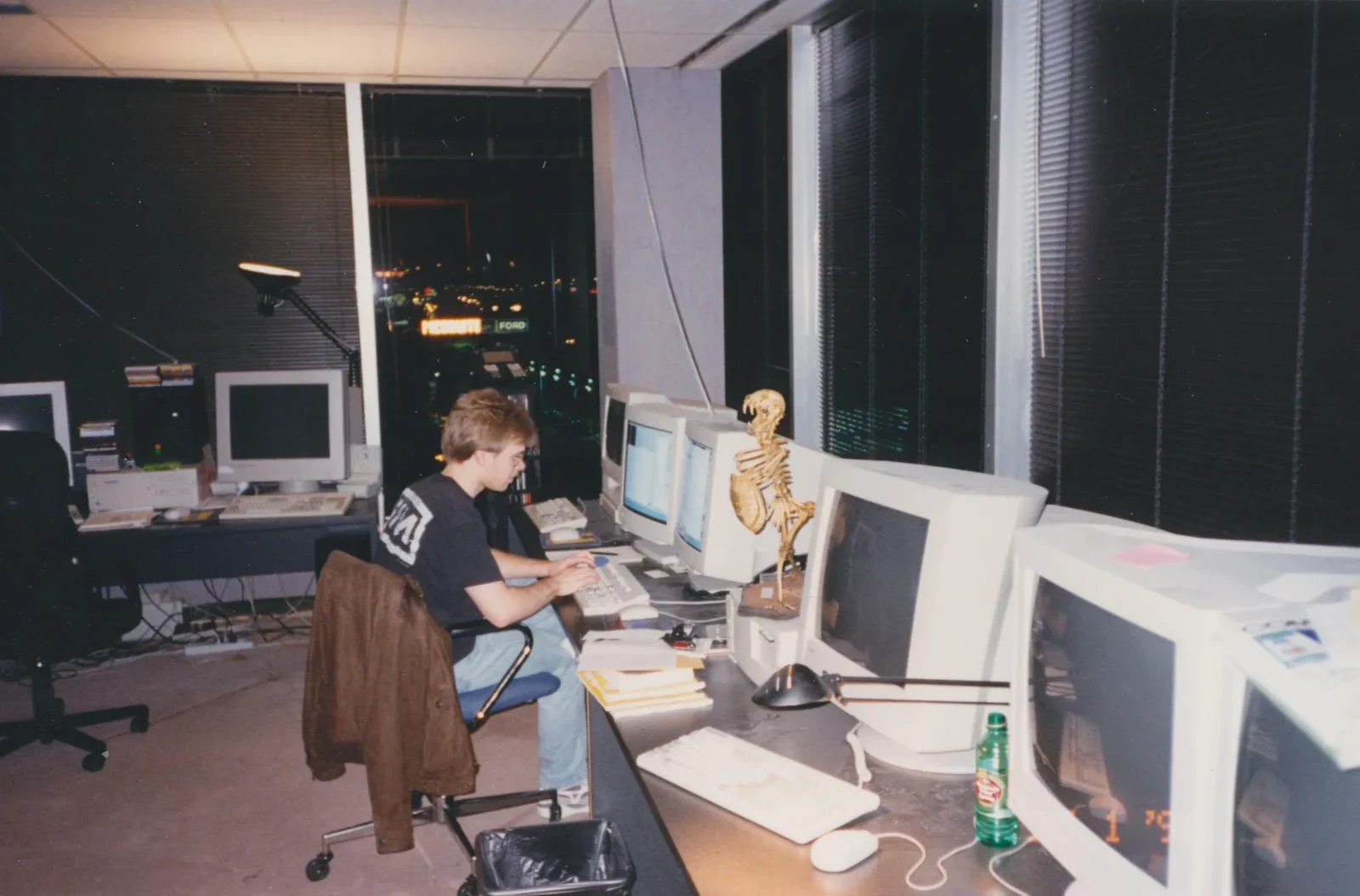

Since I've now listened to one (1) entire talk on AI, I am the world's foremost leading expert.

As such I will be running a localized llama 13B instance. For the first time in over a decade I've bought a PC that wasn't an HP workstation.

Specs:

H110 motherboard w/celeron and 4GB ram alongside Nvidia quadro 5200 8gb

Do you guys think it'll actually run a quantized llama? Is 500W PSU enough?

Jump in the discussion.

No email address required.

This appears to be a nearly decade old PC with an even older gfx card. I'm not sure if you're trolling or legitimately a noob.

If you want to play with these things I'd suggest renting a cloud server with beefy GPUs.

You pay a couple bucks per hour and they often have free credits. Googling I found this site: https://cloud-gpus.com

If you do want to build a system, I'd see if you have a local Microcenter and pick up the mobo combos they have and like a 16GB 4060ti.

Even then being able to use an A100 in the cloud for a couple bucks is a hard proposition to beat.

Jump in the discussion.

No email address required.

I'm legitimately r-slurred. I looked up the absolute minimum specs needed to run Llama and this rig fit the bill.

Afaik the biggest issue is vram to load the model into. Everything else doesn't have as much of an impact on model speed. And I'm entirely OK with a 7 to 10 min computing time for answers.

Jump in the discussion.

No email address required.

More options

Context

More options

Context