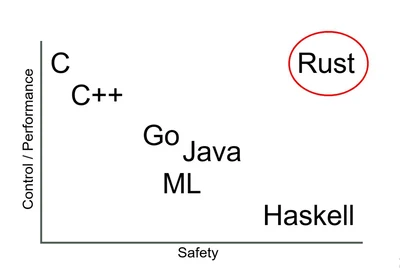

Background: Dalle2 "improved" the diversity of their prompts by silently adding diversity keywords

tldr: it's the diversity stuff. Switch "cowboy" to "cowgirl", which would disable the diversity stuff because it's now explicitly asking for a 'girl', and OP's prompt works perfectly.

And turns out that, like here, if we mess around with trying to trigger or disable the diversity stuff, we can get out fine samples; the trigger word appears to be... 'basketball'! If 'basketball' is in the prompt and no identity-related keywords like 'black' are, then the full diversity filter will be applied and will destroy the results. I have no idea why 'basketball' would be a key term here, but perhaps basketball's just so strongly associated with African-Americans that it got included somehow, such as a CLIP embedding distance?

Jump in the discussion.

No email address required.

The ethicist strags made up a problem nobody was complaining about and forced the engineers to break shit to "fix" it. If you wanted a black transexual construction worker in a wheelchair all you had to do was ask the machine for it. The more vague your prompt is the less likely you are to get what you want from the model because fricking duh.

Jump in the discussion.

No email address required.

But but if it accurately represents the world then that's BIAS. It needs to represent my utopia where there's 1 black for every 1 ciswhite male!

for every 1 ciswhite male!

Jump in the discussion.

No email address required.

The real funny thing is that they came out and said they added biases to represent "the world population", but somehow that results in outputs roughly matching the demographics of Californian cities rather than every otger result being Chinese or Indian

Jump in the discussion.

No email address required.

More options

Context

More options

Context

More options

Context