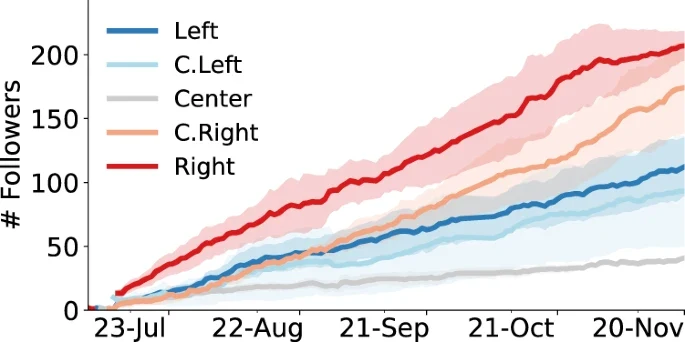

Growth in followers

The present results suggest that early choices about which sources to follow impact the experiences of social media users. This is consistent with previous studies4,5. But beyond those initial actions, drifter behaviors are designed to be neutral with respect to partisan content and users. Therefore the partisan-dependent differences in their experiences and actions can be attributed to their interactions with users and information mediated by the social media platform — they reflect biases of the online information ecosystem.

Drifters with Right-wing initial friends are gradually embedded into dense and homogeneous networks where they are constantly exposed to Right-leaning content. They even start to spread Right-leaning content themselves. Such online feedback loops reinforcing group identity may lead to radicalization17, especially in conjunction with social and cognitive biases like in-/out-group bias and group polarization. The social network communities of the other drifters are less dense and partisan.

We selected popular news sources across the political spectrum as initial friends of the drifters. There are several possible confounding factors stemming from our choice of these accounts: their influence as measured by the number of followers, their popularity among users with a similar ideology, their activity in terms of tweets, and so on. For example, @FoxNews was popular but inactive at the time of the experiment. Furthermore, these quantities vary greatly both within and across ideological groups (Supplementary “Methods”). While it is impossible to control for all of these factors with a limited number of drifters, we checked for a few possible confounding factors. We did not find a significant correlation between initial friend influence or popularity measures and drifter ego network transitivity. We also found that the influence of an initial friend is not correlated with the drifter influence. However, the popularity of an initial friend among sources with similar political bias is a confounding factor for drifter influence. Online influence is therefore affected by the echo-chamber characteristics of the social network, which are correlated with partisanship, especially on the political Right20,48. In summary, drifters following more partisan news sources receive more politically aligned followers, becoming embedded in denser echo chambers and gaining influence within those partisan communities.

The fact that Right-leaning drifters are exposed to considerably more low-credibility content than other groups is in line with previous findings that conservative users are more likely to engage with misinformation on social media20,49. Our experiment suggests that the ecosystem can lead completely unbiased agents to this condition, therefore, it is not necessary to impute the vulnerability to characteristics of individual social media users. Other mechanisms that may contribute to the exposure to low-credibility content observed for the drifters initialized with Right-leaning sources involve the actions of neighbor accounts (friends and followers) in the Right-leaning groups, including the inauthentic accounts that target these groups.

Although Breitbart News was not labeled as a low-credibility source in our analysis, our finding might still be biased in reflecting this source’s low credibility in addition to its partisan nature. However, @BreitbartNews is one of the most popular conservative news sources on Twitter (Supplementary Table 1). While further experiments may corroborate our findings using alternative sources as initial friends, attempting to factor out the correlation between conservative leanings and vulnerability to misinformation20,49 may yield a less-representative sample of politically active accounts.

While most drifters are embedded in clustered and homogeneous network communities, the echo chambers of conservative accounts grow especially dense and include a larger portion of politically active accounts. Social bots also seem to play an important role in the partisan social networks; the drifters, especially the Right-leaning ones, end up following a lot of them. Since bots also amplify the spread of low-credibility news33, this may help explain the prevalent exposure of Right-leaning drifters to low-credibility sources. Drifters initialized with far-Left sources do gain more followers and follow more bots compared with the Center group. However, this occurs in a way that is less emphatic and vulnerable to low-credibility content compared to the Right and Center-Right groups. Nevertheless, our results are consistent with findings that partisanship on both sides of the political spectrum increases the vulnerability to manipulation by social bots50.

Twitter has been accused of favoring liberal content and users. We examined the possible bias in Twitter’s news feed, i.e., whether the content to which a user is exposed in the home timeline is selected in a way that amplifies or suppresses certain political content produced by friends. Our results suggest this is not the case: in general, the drifters receive content that is closely aligned with whatever their friends produce. A limitation of this analysis is that it is based on limited sets of recent tweets from drifter home timelines (“Methods”). The exact posts to which Twitter users are exposed in their news feeds might differ due to the recommendation algorithm, which is not available via Twitter’s programmatic interface.

Despite the lack of evidence of political bias in the news feed, drifters that start with Left-leaning sources shift toward the Right during the course of the experiment, sharing and being exposed to more moderate content. Drifters that start with Right-leaning sources do not experience a similar exposure to moderate information and produce increasingly partisan content. These results are consistent with observations that Right-leaning bots do a better job at influencing users51.

In summary, our experiment demonstrates that even if a platform has no partisan bias, the social networks and activities of its users may still create an environment in which unbiased agents end up in echo chambers with constant exposure to partisan, inauthentic, and misleading content. In addition, we observe a net bias whereby the drifters are drawn toward the political Right. On the conservative side, they tend to receive more followers, find themselves in denser communities, follow more automated accounts, and are exposed to more low-credibility content. Users have to make extra efforts to moderate the content they consume and the social ties they form in order to counter these currents, and create a healthy and balanced online experience.

Given the political neutrality of the news feed curation, we find no evidence for attributing the conservative bias of the information ecosystem to intentional interference by the platform. The bias can be explained by the use (and abuse) of the platform by its users, and possibly to unintended effects of the policies that govern this use: neutral algorithms do not necessarily yield neutral outcomes. For example, Twitter may remove or demote information from low-credibility sources and/or inauthentic accounts, or suspend accounts that violate its terms. To the extent that such content or users tend to be partisan, the net result would be a bias toward the Center. How to design mechanisms capable of mitigating emergent biases in online information ecosystems is a key question that remains open for debate.

Jump in the discussion.

No email address required.

Wah wah wah. Now check this shit out.

Jump in the discussion.

No email address required.

More options

Context