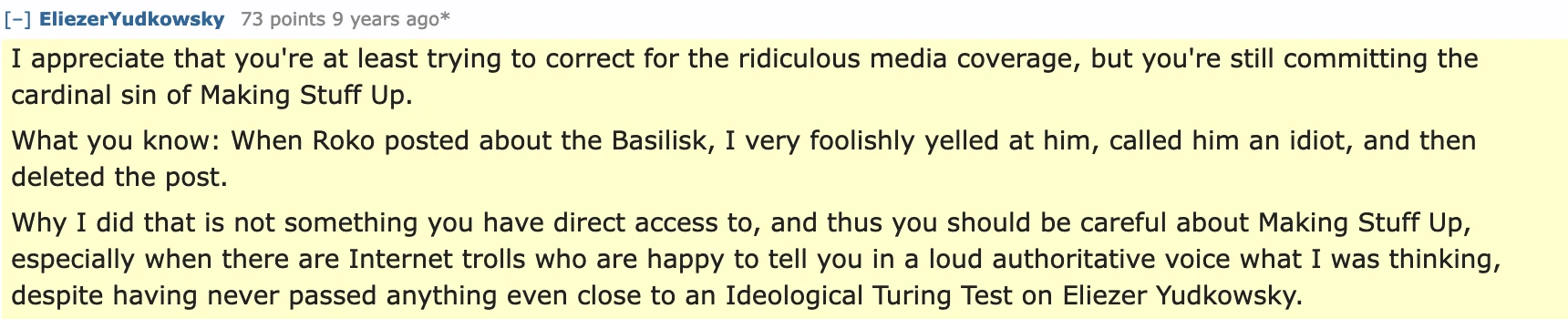

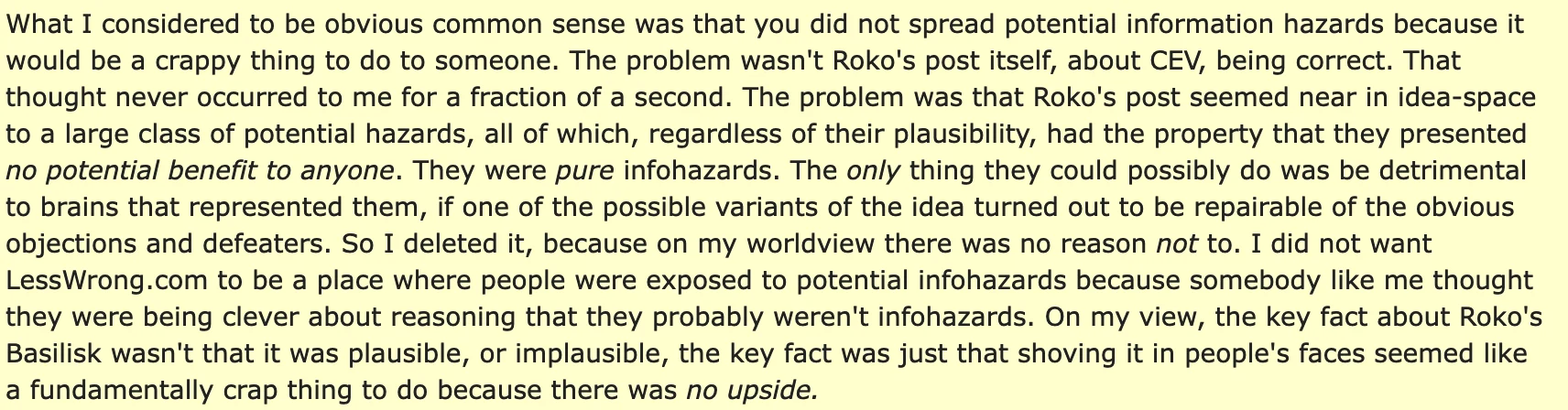

I just think this is funny as frick, and I'm sure u all know about this already but I was reading some reddit thread cope from Elizer Yudkowsky about the rokos basilisk situation

Recap as follows:

9 years ago

Who fricking talks like this? oh wait, probs

Apparently this theory of AI torturing us gave great distress to the so called "rationalists" that they required the heckin infohazard to be taken down!

Replies:

lmao 1 year ago

Trap card activated

In summary, highly rational and highly r-slurred

spergs at AI Doomer

spergs at AI Doomer

Jump in the discussion.

No email address required.

One part of this that is often missed is that Roko's Basilisk isn't positing an evil AI torturing people for teh evils, it's about a good guy utilitarian AI that is justly torturing perfect simulations of past AI researchers for failing to bring about benevolent AI with sufficient urgency (this will motivate present-day AI researchers to bring about AI utopia sooner). I need to clarify this because I find the actual thought experiment even funnier

Jump in the discussion.

No email address required.

Isn't the sole purpose of Roko's basilisk to torture anyone who chose not to help in its creation?

Jump in the discussion.

No email address required.

Trigger warning:

https://rationalwiki.org/wiki/Roko's_basilisk/Original_post

Jump in the discussion.

No email address required.

More options

Context

More options

Context

I want the AI to torture journ*lists for similar reasons.

Jump in the discussion.

No email address required.

More options

Context

More options

Context