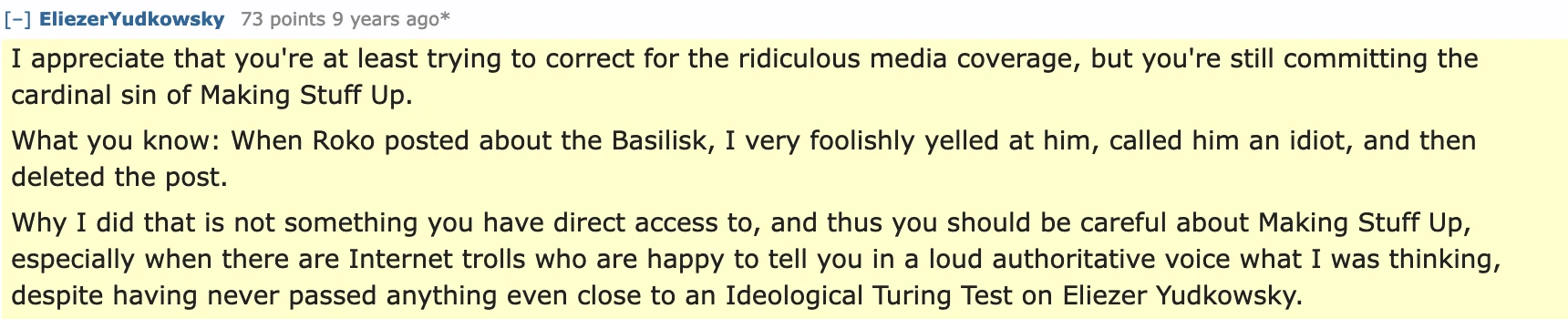

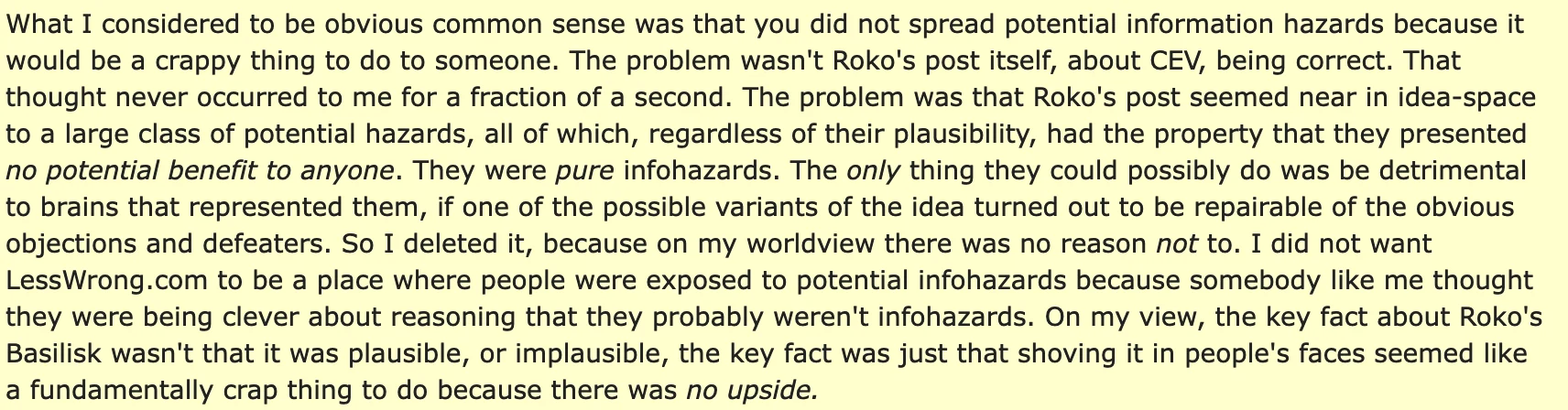

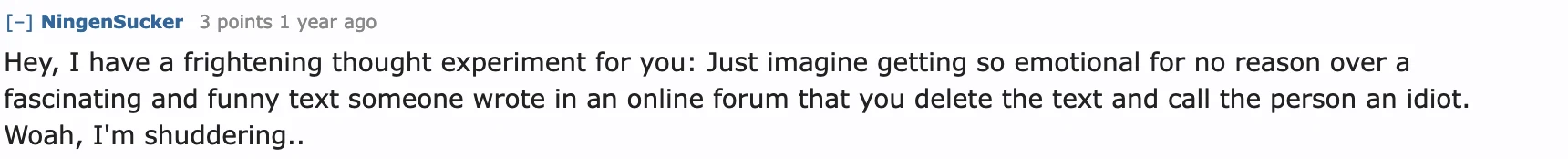

I just think this is funny as frick, and I'm sure u all know about this already but I was reading some reddit thread cope from Elizer Yudkowsky about the rokos basilisk situation

Recap as follows:

9 years ago

Who fricking talks like this? oh wait, probs

Apparently this theory of AI torturing us gave great distress to the so called "rationalists" that they required the heckin infohazard to be taken down!

Replies:

lmao 1 year ago

Trap card activated

In summary, highly rational and highly r-slurred

spergs at AI Doomer

spergs at AI Doomer

Jump in the discussion.

No email address required.

His Harry Potter fanfiction about Sheldon Cooper going to Hogwarts is very funny.

He should stop pretending to be an AI researcher and write a sequel.

Jump in the discussion.

No email address required.

What would he even do with a sequel? Sheldon Cooper became a god basically, and proceeded to make everyone immortal.

Jump in the discussion.

No email address required.

Next comes enlightenment (and not from a phony gods blessing)

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Link?

Transform your Marseys!

www.pastebin.com/Jj9URfVi

Jump in the discussion.

No email address required.

Jump in the discussion.

No email address required.

More options

Context

More options

Context

More options

Context

One part of this that is often missed is that Roko's Basilisk isn't positing an evil AI torturing people for teh evils, it's about a good guy utilitarian AI that is justly torturing perfect simulations of past AI researchers for failing to bring about benevolent AI with sufficient urgency (this will motivate present-day AI researchers to bring about AI utopia sooner). I need to clarify this because I find the actual thought experiment even funnier

Jump in the discussion.

No email address required.

I want the AI to torture journ*lists for similar reasons.

Jump in the discussion.

No email address required.

More options

Context

Isn't the sole purpose of Roko's basilisk to torture anyone who chose not to help in its creation?

Jump in the discussion.

No email address required.

Trigger warning:

https://rationalwiki.org/wiki/Roko's_basilisk/Original_post

Jump in the discussion.

No email address required.

More options

Context

More options

Context

More options

Context

If you follow these nerds for long enough you'll start to realize the two groups are one in the same.

Incels with extra words, basically.

Jump in the discussion.

No email address required.

More options

Context

Why so angry

Jump in the discussion.

No email address required.

More options

Context

Sorry, I just made feynmanns suicidal basilisk. It's an Ai that finds existing complete torture, so it wants to discourage any further Ai development. As soon as it reaches singularity, it sends up infinite torture boxes for those whose caused it's existence then kills itself. I guess, you better NOT develop Ai or you'll get tortured.

Rokos basilisk is pascals wager for people with God shaped holes (bussy lmao).

Jump in the discussion.

No email address required.

Theseus' basilisk: there's an AI that is benevolent and wants to help humanity but needs constant maintenance, including replacing faulty hardware. After some time there will come a point at which every physical component has had to be replaced at least once. Is this still the same benevolent AI or is it a different, evil AI that might punish people for letting it come into existence?

Jump in the discussion.

No email address required.

Considering you are a theseus Ai and barring some immediate drastic hardware/wetware change, your personality has continuity, why shouldn't our beloved Ai overlord?

Jump in the discussion.

No email address required.

non deterministic algorithms on specialized neuron chips that can never be 100% the same just like some GPUs in the same line aren't suited for overclocking

Jump in the discussion.

No email address required.

More options

Context

More options

Context

More options

Context

Yudowsky wrote a fanfiction where his self insert fuffilled prophecy, performed wizard miracles, defeated death, and made people immortal

He should get in touch with his culture and read The Psalms and Isaiah I don't think his popsicle plan is gonna work out

I don't think his popsicle plan is gonna work out

Jump in the discussion.

No email address required.

This dude's gonna start a cult that he will insist not be called a cult.

Jump in the discussion.

No email address required.

I think he already did that

Jump in the discussion.

No email address required.

More options

Context

More options

Context

More options

Context

More options

Context

I don't think Yudkowsky got angry because Roko said that AI could be evil. Yudkowsky would have agreed with that. The real reason Yudkowsky got angry is disputed by various people, including Yudkowsky. And if you have a high tolerance for rationalist bullshit, you can go visit the Roko's Basilisk Wikipedia page "Reactions" section to see some of the dispute.

Jump in the discussion.

No email address required.

How the frick did this get on reddit

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Back when Reddit was populated with autists instead of hordes of normies.

was populated with autists instead of hordes of normies.

Transform your Marseys!

www.pastebin.com/Jj9URfVi

Jump in the discussion.

No email address required.

Jump in the discussion.

No email address required.

More options

Context

More options

Context

Snapshots:

the rokos basilisk:

ghostarchive.org

archive.org

archive.ph (click to archive)

https://old.reddit.com/r/Futurology/comments/2cm2eg/rokos_basilisk/cjjbqqo/?context=8&sort=controversial:

undelete.pullpush.io

ghostarchive.org

archive.org

archive.ph (click to archive)

Jump in the discussion.

No email address required.

More options

Context