- 55

- 42

I was chudded yesterday so I waited a bit for that to expire before posting this.

Alpaca is an API that allows you to algorithmically trade stocks. I've been wanting options for a long time, as most of my trading is theta ganging options. There has never really been a good API available to the average person for trading options till now. So much potential degeneracy has been unleashed!

- 143

- 98

RCS (Rich Communication Services) will bring a number of iMessage-style features to texts between Android and iPhone users. This includes things such as read receipts, typing indicators, and higher-quality images and videos.

The one thing that won't be changing, however, is the color of the messaging bubbles.

SEETHE

https://old.reddit.com/r/Android/comments/17x3bo1/apple_confirms_rcs_messages_will_have_green

https://old.reddit.com/r/Android/comments/17wzc06/google_will_work_with_apple_on_implementing_rcs

https://old.reddit.com/r/apple/comments/17x3d2d/what_color_bubbles_will_rcs_messages_be_apple

https://old.reddit.com/r/apple/comments/17wtc6l/apple_announces_that_rcs_support_is_coming_to

https://news.ycombinator.com/item?id=38293082

Short primer.

RCS is something that Apple initial help fund the development of. As is usual, when a bunch of companies get to gather to create a protocol it gets bogged down my too many cooks in the kitchen. This was the case with USB 3 or USB C or whatever and why Apple developed lightning cables. It is shittier than iMessage and currently encryption only works by sending everything through Google servers lmao. It basically does everything iMessage does that normies need but came out much later and is bulkier and the roll out sucks because it is based on carrier and Google support and there's a laundry list that I don't care to type up and if you're still reading this what the frick is wrong with you.

Q & A

Why is bubble color important?

It helps us in the dating world know who is capable of making smart purchases as well as an idea on how much they will be able to contribute in the relationship. I don't mind paying for dinner but if she expects for me to provide her gum after she blows me sorry girl

- 2

- 21

tom7 creates a humorous submission for SIGBOVIC each year and its always really fricking cool, like this video.

- J : Yes

- 27

- 52

There is literally no other information found. The link to the source just shows this:

What speculation aka internet rumours is now "information". I mean I guess by definition.

This is typical wikipedia nonsense, but its getting on my nerves more and more. Its really bad when you look at celeb pages. You might as well just read the publicist shit.

For example, look at "yungblud" 's wikipedia page:

Ama from guy who flexes on his $1500 a month "job":

https://old.reddit.com/r/casualiama/comments/jfn0ck/i_am_a_former_paid_shill_on_wikipedia_for_a

Other shit post discussion: https://news.ycombinator.com/item?id=32840097

Link to actual ARTicle: https://en.wikipedia.org/wiki/Wikipedia:Wikipedia_Signpost/2022-06-26/Special_report

Additional Link: https://wikipediocracy.com/2020/12/15/the-nicholas-alahverdian-story

Discussion: https://old.reddit.com/r/RealWikiInAction/comments/kfprtt/the_nicholas_alahverdian_story

Cool subreddit: https://old.reddit.com/r/RealWikiInAction/top?sort=top&t=all

Donate to real people not organizations or (most) charities. And not people on the internet either. I mean real people you meet in real life. And not friends or family (in most circumstances), but like homeless dudes or something. Somebody trully in need.

- 45

- 101

- 9

- 25

- 15

- 38

Adobe realizing their $20B acquisition of Figma is now worth zero because of AI doodling https://t.co/KaZDFqZTOl pic.twitter.com/te5PLFKmVq

— Hassan Hayat 🔥 (@TheSeaMouse) November 15, 2023

- 1

- 12

ICYMI, you can now ask @github Copilot questions about your entire project with '@ workspace'.

— Visual Studio Code (@code) November 15, 2023

Space between @ and workspace because Twitter. pic.twitter.com/xNrVvaKuyq

- 15

- 13

claw mail needs oauth for outlook. doesnt matter what browser i use it does not want to authenicate, i get a error for local host. see here:

https://www.claws-mail.org/faq/index.php/Oauth2

what do

- CREAMY_DOG_ORGASM : Idk but can you buy me an unban award please

- 33

- 19

I'm not spending nearly $500 after taxes for a midrange years old card, I'm just not going to do it.

- 3

- 17

1 of 7 ruqqus us holes that remain

- 21

- 16

Videos and pretty much anything to do with graphics is lagging the system. Idk much about hardware but I assume this is something to do with graphics card driver?

So I am trying to get set up with desktop env and all my experience with linux has been like half a year on terminal thru ssh.

I was able to install Debian 12 by partitioning like 30 or 40 gigs of space on my HP / intel laptop.

So the monitor, mouse, and keyboard worked on initial boot without me having to do anything so this was nice. But from what I have read intel drivers should already come with the default debian install.

Obviously I don't have this issue when booting to windows so I'm hoping it's just a driver issue.

-

rDramaHistorian

: We have so many cute codecels

thigh high pink&blue socks for everyone of u

thigh high pink&blue socks for everyone of u

- 50

- 75

The author of this article is hilarious. Here are some choice quotes:

How the fuck does that take you multiple attempts, require you to Google shit, and then eventually take so long that your incrementally less r-slurred friend has to get ChatGPT to do it?

How the fuck does that take you multiple attempts, require you to Google shit, and then eventually take so long that your incrementally less r-slurred friend has to get ChatGPT to do it?

Out of curiosity I looked up the table of contents of this book: https://catdir.loc.gov/catdir/toc/ecip063/2005032051.html

Contents

1\. Programming with Visual C++ 2005

2\. Data, Variables, and Calculations

3\. Decisions and Loops

4\. Arrays, Strings, Pointers, and References

Thats right, this guy got filtered right after the chapter that explains if and for statements

Theres more r-sluration just after this paragraph as well, where he struggles to write a Hello World program and frames it as an epic intellectual struggle:

The rest of the article is equally braindead, it looks like hes a CRUD front end dev or something. He brags about some of his high-octane  "craftsmanship"

"craftsmanship"  here :

here :

This really makes the rest of the articles pseudointellectual posturing extra hilarious to me.

This isn't really drama but I thought it was funny, so there

Theres a hackernews discussion but I don't think any of them read the article (or maybe they are all equally  ) because almost nobody is pointing out how retarded this article is.

) because almost nobody is pointing out how retarded this article is.

- 5

- 15

- 3

- 13

- 21

- 21

C code provides a mostly serial abstract machine.

Guess what nigger, processors are still mostly serial. Yes there are multiple pipelines with operations flying around the place, but at the end of the day the program counter is incremented on each core and the next instruction is fetched.

In contrast, GPUs achieve very high performance without any of this logic, at the expense of requiring explicitly parallel programs.

Lmao GPUs are broken as fuck for anything which isn't trivial arithmetic. Branches absolutely kill performance. The memory model is even more poorly defined than CPUs; atomics and branches regularly break from what the vendor says.

This unit is conspicuously absent on GPUs, where parallelism again comes from multiple threads rather than trying to extract instruction-level parallelism from intrinsically scalar code.

This is because you CAN'T implement a good register renamer for GPUs. It has the same problem as branching: the execution model is such that the same instruction is executed in lockstep across several threads; If one thread has a different instruction ordering, everything gets fucked up.

If instructions do not have dependencies that need to be reordered, then register renaming is not necessary.

Nigga what? The same theoretical optimizations apply to GPUs: add a, b; some slow instruction; add b, c. The second add could still be executed in parallel first for perf win. The problem is that the SIMT execution model does not make reordering easy.

Consider another core part of the C abstract machine's memory model: flat memory. This hasn't been true for more than two decades. A modern processor often has three levels of cache in between registers and main memory, which attempt to hide latency.

From the first processors, loading & storing was just used as another communication channel with the processor. A somewhat large part of the address space is dedicated to hardware registers that have whatever wacky functionality you want. E.g. write to 0x1000EFAA to turn on the blinky yahoo red LED. It is you who is perverted by years of programming thinking that memory means bytes the programmer stores.

The cache is, as its name implies, hidden from the programmer and so is not visible to C. Efficient use of the cache is one of the most important ways of making code run quickly on a modern processor, yet this is completely hidden by the abstract machine, and programmers must rely on knowing implementation details of the cache (for example, two values that are 64-byte-aligned may end up in the same cache line) to write efficient code.

Whoa! You need to actually know how your computer works to program? Explicit instructions for load to L1 load to L2 flush cache are unnecessary. You can already achieve these effects with the normal instruction set.

Optimizing C

In this section, he argues how difficult it is for a compiler to optimize C. This is actually fair. C is currently at a worst middle ground where some stuff is unoptimizable (according to the standard) and other stuff is undefined behavior that sucks for programming. For example, unsigned integers overflow (nice behavior for the programmer but makes it harder for the compiler) and signed integers can't overflow (nice for compiler optimizations but really sucky for the programmer). I think C is actually trying to be 2 languages: a high-level assembly and a compiler IR. I think we are trending towards the latter. For example, passing NULL to memcpy is UB even if the # of bytes to copy is 0, because it lets the compiler assume the input/output pointers are non-NULL. I'm honestly somewhat torn on what I'd like more.

We have a number of examples of designs that have not focused on traditional C code to provide some inspiration. For example, highly multithreaded chips, such as Sun/Oracle's UltraSPARC Tx series, don't require as much cache to keep their execution units full. Research processors2 have extended this concept to very large numbers of hardware-scheduled threads. The key idea behind these designs is that with enough high-level parallelism, you can suspend the threads that are waiting for data from memory and fill your execution units with instructions from others. The problem with such designs is that C programs tend to have few busy threads.

Lmao all the examples he gives are failures. The nigga wants a dataflow machine, which we decided aren't useful in the 80s. Also, it's not just C programs; I'd say most programs are serial in nature.

Consider in contrast an Erlang-style abstract machine, ... A cache coherency protocol for such a system would have two cases: mutable or shared.

The reason modern cache coherency protocol state machines have 70+ states in them is because they trie to preempt and paper around cache misses, because moving data around cores is much more expensive than adding a few more state machine transitions. The same situation would arise in his fantasy.

Immutable objects can simplify caches even more, as well as making several operations even cheaper.

Most useful work your program does uses mutable data structures. Yes, even the haskellers have a Vector type that you can push and pop to.

A processor designed purely for speed, not for a compromise between speed and C support, would likely support large numbers of threads, have wide vector units, and have a much simpler memory model.

Yes if you got rid of all the legacy cruft and tried making something new you could make a genuinely good processor. All this to run your crappy garbage collected bloated functional-programming language. Sigh.

There is a common myth in software development that parallel programming is hard. This would come as a surprise to Alan Kay, who was able to teach an actor-model language to young children.

When you're playing around with lego bricks provided by someone else's framework it is easy. However, offloading complexity onto someone else doesn't remove it. You pay for it in performance.

In general, I hate the tyranny of "big-idea" programming languages which he advocates for. You WILL not mutate any variables, perform any IO, or do any useful work and you WILL like it.

- 8

- 9

I wanna read comics on kindle so I download them in bulk in .cbz and .cbr format to transfer via calibre.

Problem is calibre will not transfer them if they have anything in the file besides images.

Many comics have .nfo and thumbs.db files put inside them by r-slurs that uploaded them, to unscrew it I need to open the .cbz/r file with winrar and manually delete the offending files.

Is there a way to do this besides opening them up one by one? Cbz/r files don't let me bulk unpack them so I cannot search them all at once and there's hundreds of them.

- 25

- 34

- 38

- 92

Orange site https://news.ycombinator.com/item?id=38266340

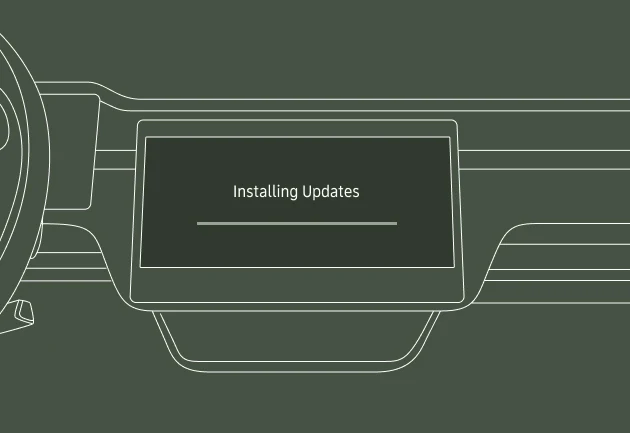

Rivian blames “fat finger” for infotainment-bricking software update

Some affected Rivian EVs may require physical servicing to fix the problem.

The more innovation-minded people in the auto industry have heralded the advent of the software-defined car. It's been spun as a big benefit for consumers, too—witness the excitement among Tesla owners when that company adds a new video game or childish noise to see why the rest of the industry joined the hype train. But sometimes there are downsides, as some Rivian owners are finding out this week.

The EV startup, which makes well-regarded pickup trucks and SUVs, as well as delivery vans for Amazon, pushed out a new over-the-air software update on Monday. But all is not well with 2023.42; the update stalls before it completes installing, taking out both infotainment and main instrument display screens.

Rivian VP of software engineering Wassym Bensaid explained the problem in a post on Rivian's subreddit:

Hi All,

We made an error with the 2023.42 OTA update - a fat finger where the wrong build with the wrong security certificates was sent out. We cancelled the campaign and we will restart it with the proper software that went through the different campaigns of beta testing.

Service will be contacting impacted customers and will go through the resolution options. That may require physical repair in some cases.

This is on us - we messed up. Thanks for your support and your patience as we go through this.

Update 1 (11/13, 10:45 PM PT): The issue impacts the infotainment system. In most cases, the rest of the vehicle systems are still operational. A vehicle reset or sleep cycle will not solve the issue. We are validating the best options to address the issue for the impacted vehicles. Our customer support team is prioritizing support for our customers related to this issue. Thank you.

Rivian is still working on a fix. And perhaps regretting this gif on its website:

!codecels on a scale of 0 to  how over is the future of personal transportation?

how over is the future of personal transportation?

- 2

- 14

- 10

- 24

Doctor reveals weird toilet position to help you poop when constipated https://t.co/57CJNGNIKg pic.twitter.com/eqNa5cARNT

— New York Post (@nypost) November 15, 2023

.webp?x=8)

Microsoft will let users uninstall Edge, Bing, and disable ads on Windows 11 as it complies with the Digital Markets Act

Microsoft will let users uninstall Edge, Bing, and disable ads on Windows 11 as it complies with the Digital Markets Act

Well Firefoxcels?

Well Firefoxcels?

Canuck spergs about heaters in his woodworking shop.

Canuck spergs about heaters in his woodworking shop.

.webp?x=8)

.webp?x=8)

.webp?x=8)

calls for TDD(total drama death), wants to end anonynous

calls for TDD(total drama death), wants to end anonynous  online accounts

online accounts

- Paleo Energetique

- Paleo Energetique

posts it.

posts it.