- 37

- 16

Here is the cipher. The question  has been scrambled from its original form.

has been scrambled from its original form.

Here is the key:

The first  to give me the answer

to give me the answer  to the question

to the question  will get 10k MB and a unique badge. The only hint I will give is that I started with the Caesar

will get 10k MB and a unique badge. The only hint I will give is that I started with the Caesar  cypher method. Badge

cypher method. Badge  should

should  be ready

be ready  in the next few days or so. The next 4 will get 10k mb.

in the next few days or so. The next 4 will get 10k mb.

!ghosts would  someone in badgemaxxers mind pinging them please?

someone in badgemaxxers mind pinging them please?

- 17

- 24

Local monero, a fiat way to buy monero with no KYC, will be shutting down.

Best way now is to use bisq if you want to avoid centralized exchanges. Maybe some image board will come up for p2p

- 5

- 11

- 40

- 80

- 21

- 25

hi guys, i am looking for sites to add to my webring! gimme ur urls :p thanks @X for suggsting neocities!

- 20

- 17

hi, im learning some html in class right now, but it's all just .html files on my computer, how do i add it to the web? preferably for free, also i dont want it to be slow

- 38

- 121

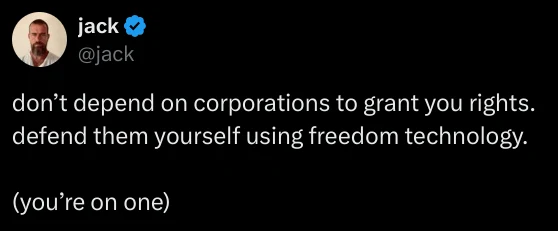

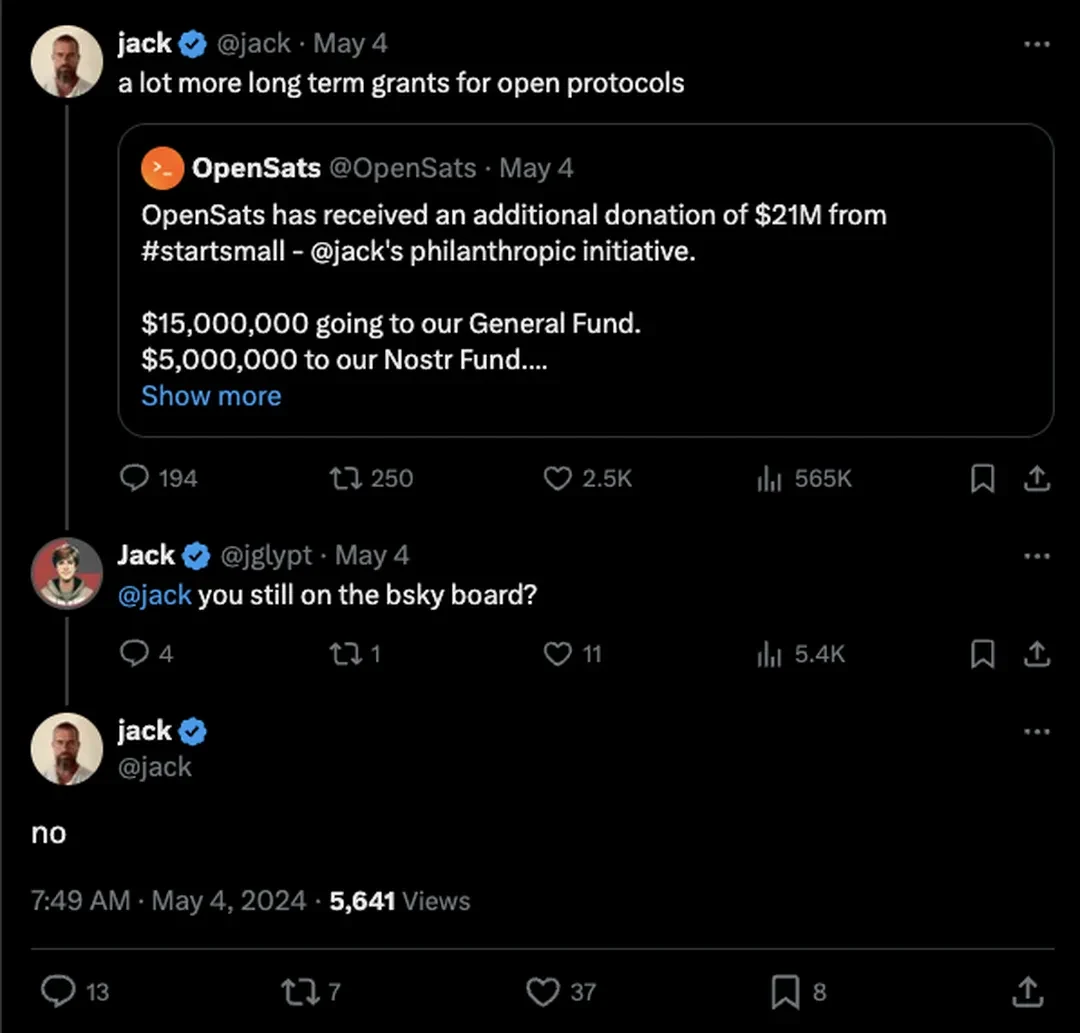

Twitter founder Jack Dorsey is no longer on the board of Bluesky, the decentralized social media platform he helped start. In two posts today, Bluesky thanked Dorsey while confirming his departure and adding that it's searching for a new board member “who shares our commitment to building a social network that puts people in control of their experience.”

The posts come a day after an X user asked Dorsey if he was still on the company's board, and Dorsey responded, without further elaboration, “no.” As TechCrunch points out, Dorsey was on a tear yesterday, unfollowing all but three accounts on X while referring to Elon Musk's platform as “freedom technology.”

Neither Bluesky nor Dorsey himself seem to have said how or why he left the board. For now, two board members remain: CEO, Jay Graeber, and Jabber / XMPP inventor Jeremie Miller. Dorsey originally backed Bluesky in 2019 as a project to develop an open-source social media standard that he wanted Twitter to move to. He later joined its board of directors when it split from Twitter in 2022.

But Dorsey hadn't seemingly been a particularly active participant at the company. In March, when The Verge's Nilay Patel asked Graeber for Decoder about his level of involvement with Bluesky, she said she gets “some feedback occasionally,” but implied he's otherwise “being Jack Dorsey on a cloud,” as Nilay put it. Months before that interview, Dorsey had closed his Bluesky account.

Bluesky did not immediately respond to The Verge's request for comment.

Update May 5th, 2024, 4:37PM ET: Updated with Bluesky's confirmation of Dorsey's departure from its board.

- 57

- 103

TunnelVision, as the researchers have named their attack, largely negates the entire purpose and selling point of VPNs, which is to encapsulate incoming and outgoing Internet traffic in an encrypted tunnel and to cloak the user's IP address. The researchers believe it affects all VPN applications when they're connected to a hostile network and that there are no ways to prevent such attacks except when the user's VPN runs on Linux or Android. They also said their attack technique may have been possible since 2002 and may already have been discovered and used in the wild since then.

( . . . . )

Interestingly, Android is the only operating system that fully immunizes VPN apps from the attack because it doesn't implement option 121. For all other OSes, there are no complete fixes. When apps run on Linux there's a setting that minimizes the effects, but even then TunnelVision can be used to exploit a side channel that can be used to de-anonymize destination traffic and perform targeted denial-of-service attacks. Network firewalls can also be configured to deny inbound and outbound traffic to and from the physical interface. This remedy is problematic for two reasons: (1) a VPN user connecting to an untrusted network has no ability to control the firewall and (2) it opens the same side channel present with the Linux mitigation.

- 5

- 13

- 6

- 10

Trans lives matter

- 49

- 56

It seems as though we've arrived at the moment in the AI hype cycle where no idea is too bonkers to launch. This week's eyebrow-raising AI project is a new twist on the romantic chatbot—a mobile app called AngryGF, which offers its users the uniquely unpleasant experience of getting yelled at via messages from a fake person. Or, as cofounder Emilia Aviles explained in her original pitch: “It simulates scenarios where female partners are angry, prompting users to comfort their angry AI partners” through a “gamified approach.” The idea is to teach communication skills by simulating arguments that the user can either win or lose depending on whether they can appease their fuming girlfriend.

The central appeal of a relationship-simulating chatbot, I've always assumed, is that they're easier to interact with than real-life humans. They have no needs or desires of their own. There's no chance they'll reject you or mock you. They exist as a sort of emotional security blanket. So the premise of AngryGF amused me. You get some of the downsides of a real-life girlfriend—she's furious!!—but none of the upsides. Who would voluntarily use this?

Obviously, I downloaded AngryGF immediately. (It's available, for those who dare, on both the Apple App Store and Google Play.) The app offers a variety of situations where a girlfriend might ostensibly be mad and need “comfort.” They include “You put your savings into the stock market and lose 50 percent of it. Your girlfriend finds out and gets angry” and “During a conversation with your girlfriend, you unconsciously praise a female friend by mentioning that she is beautiful and talented. Your girlfriend becomes jealous and angry.”

The app sets an initial “forgiveness level” anywhere between 0 and 100 percent. You have 10 tries to say soothing things that tilt the forgiveness meter back to 100. I chose the beguilingly vague scenario called “Angry for no reason,” in which the girlfriend is, uh, angry for no reason. The forgiveness meter was initially set to a measly 30 percent, indicating I had a hard road ahead of me.

Reader: I failed. Although I genuinely tried to write messages that would appease my hopping-mad fake girlfriend, she continued to interpret my words in the least generous light and accuse me of not paying attention to her. A simple “How are you doing today?” text from me—Caring! Considerate! Asking questions!—was met with an immediately snappy answer: “Oh, now you care about how I'm doing?” Attempts to apologize only seemed to antagonize her further. When I proposed a dinner date, she told me that wasn't sufficient but also that I better take her “somewhere nice.”

It was such an irritating experience that I snapped and told this bitchy bot that she was annoying. “Great to know that my feelings are such a bother to you,” the sarcast-o-bot replied. When I decided to try again a few hours later, the app informed me that I'd need to upgrade to the paid version to unlock more scenarios for $6.99 a week. No thank you.

At this point I wondered if the app was some sort of avant-garde performance art. Who would even want their partner to sign up? I would not be thrilled if I knew my husband considered me volatile enough to require practicing lady-placation skills on a synthetic shrew. While ostensibly preferable to AI girlfriend apps seeking to supplant IRL relationships, an app designed to coach men to get better at talking to women by creating a robot woman who is a total killjoy might actually be even worse.

- 45

- 150

- 92

- 70

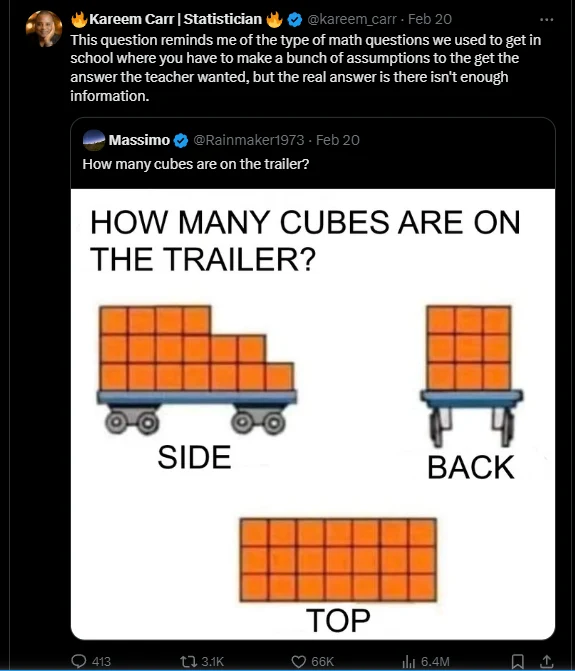

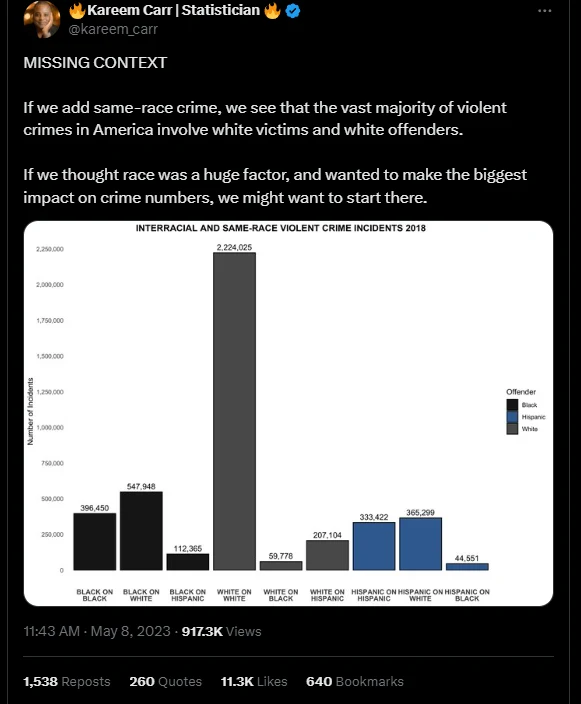

For the uninitiatied: This is the absolute r-slur who spent weeks arguing that "2 + 2 ≠ 4"

https://www.westernjournal.com/wokeness-comes-mathematics-academics-saying-225/

He's also famous for making sophomoric ggplot charts in R to show off his "data science" chops

and going viral for bullshit like this:

- 26

- 29

According to FBI records, all seven tips were submitted from four specific, identified IP addresses. Subscriber information for these IP addresses demonstrated that all four were assigned to a particular provider (hereinafter, “Company A”). Company A provides a service that allows its users to access the Internet via an isolated web browser to help protect users from security threats and for other purposes. In general, when a user of Company A's services accesses a website through Company A's product, the website will record an IP address associated with Company A, and not the end user.

- 13

- 45

- 87

- 112

We have heard from several sources who told us that the reason for these firings is because Rebecca Tinucci, former head of Tesla's EV Charging division, resisted Musk's demand to fire large portions of her team.

While this is hearsay, it's plausible considering the language in Musk's letter announcing the firings – which claimed that some executives are not taking headcount reduction seriously, and made a point to say that executives who retain the wrong employees may see themselves and their whole teams cut. It isn't a stretch to think that Musk included those demands since they were related to his firing of Tinucci and her team.

- 34

- 36

orange site: https://news.ycombinator.com/item?id=40235114

i have lived in exclusively red counties my entire life

alaska is not as red as i would expect

also lol @ wisconsin

- 10

- 29

- 12

- 31

Orange Site:

- 11

- 31

https://www.gizmochina.com/2024/04/25/huawei-pura-70-ai-object-removal-raises-privacy-concerns/

AI has become a defining feature of smartphones in 2024, particularly in the field of photography. From image generation to object removal, AI is changing the way we capture and edit photos.

Huawei‘s latest Pura 70 series also features an object removal function powered by AI. But it has come under fire as the feature can inadvertently erase parts of people's clothing.

Disgruntled users on Weibo, a popular Chinese social media platform, have been posting videos highlighting this concerning issue. The videos show how effortless it is to remove clothing with a single tap using the phone's “smart AI retouching” feature.

The problematic nature of this feature is pretty evident — anyone can easily alter an image to expose parts of a person's body without their consent. it raises serious privacy concerns and could lead to the misuse and sharing of inappropriate content.

Huawei customer service has acknowledged the problem, attributing it to loopholes within the AI algorithm. They have assured users that these issues will be addressed in upcoming system updates.

While this is a step in the right direction, Huawei must quickly fix the issue. The ability to alter photos in such a way brings up ethical questions and shows how AI technology can be misused.

This incident is a reminder of the importance of responsible AI development and implementation. AI features, especially those involving image manipulation, require careful testing and safeguards to prevent unintended consequences and potential privacy violations.

Unique Badge!

Unique Badge!

to solve this Cipher! First

to solve this Cipher! First

about how

about how  local contracting firm isn't great to the filth it hires as contractors

local contracting firm isn't great to the filth it hires as contractors

Diversity Is Our Strength

Diversity Is Our Strength

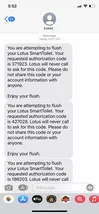

IMPORTANT

IMPORTANT

"Data Scientist" (Kareem Carr) thinks that "Python is a massive pain in the butt to install"

"Data Scientist" (Kareem Carr) thinks that "Python is a massive pain in the butt to install"

walks back their statements of continuing FreeBSD

walks back their statements of continuing FreeBSD  support….in a random Reddit post,

support….in a random Reddit post,  to the chagrin of many.

to the chagrin of many.

Pura 70 series AI editing tool I

Pura 70 series AI editing tool I  draws controversy as it can remove clothing """""unintentionally"""""

draws controversy as it can remove clothing """""unintentionally"""""