- 20

- 32

lol my wife’s friend was talking about her brothers secret elk spot and would not give up any helpful details on where I could go for my muzzle loader season. She shared a pic with my wife though pic.twitter.com/EiMoLhcYjk

— Barrett (@SledgeDev) October 19, 2024

- 12

- 71

- 11

- 26

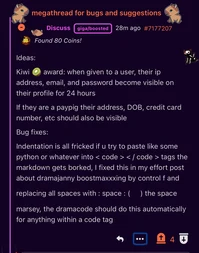

Yet another reason to shun Krogers: they are speedrunning the grocery industry toward adoption of The Mark!

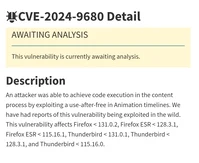

- BayBeeJesus : Homophobia & complicated sounding computery stuff

- 121

- 14

aight u tardfuckles, the last showing on this was absolutely fucking pathetic. 99% of the response was dramaslop barely recognizable as conscious thought, congrats on again demonstrating ur truly the planet's biggest collection of retards:  . i especially want to call out the absolute

. i especially want to call out the absolute  who not-so-cleverly thot he'd dig thru my latest reddit account looking for dirt cause he's just that mad all of this way went so far over his head i'm basically on fucking mars by comparison

who not-so-cleverly thot he'd dig thru my latest reddit account looking for dirt cause he's just that mad all of this way went so far over his head i'm basically on fucking mars by comparison  . that's right: be mad i actually hit that literal bleeding edge where no human has thot before, ur tears of shame and jealously crying about i said this mean thing or that mean thing

. that's right: be mad i actually hit that literal bleeding edge where no human has thot before, ur tears of shame and jealously crying about i said this mean thing or that mean thing  ... brings me endless amusement.

... brings me endless amusement.

to recap the last post, in an obviously futile attempt to bring u up to speed: no one needed to deboonk the halting oracle. turing was wrong that his diagonal inverse could be a fully computable number, as it would then need to enumerate over itself and give it's own n-th inverse, which doesn't fucking exist, cause it's not a real goddamn number!

to respond to slop spouted in critique: i don't care that you think u can run the program to compute the inverse, that's total delusion. either you:

- (a) iterate over ur running program and

yourself trying to find the inverse of an inverse which still doesn't actually exist, or

yourself trying to find the inverse of an inverse which still doesn't actually exist, or - (b) more likely you

think u can run

think u can run diagonal_inversewhile ignoring ur own program, failing to realize u are actually just lying about the fucking program ur running cause it never actually provides the n-th inverse to itself, a number u keep pathetically clinging to as computable, but then don't actually treat like an actual computable number!

either way is total batshit nonsense, so  the diagonalization argument is deader than ur mom's trussy after last night.

the diagonalization argument is deader than ur mom's trussy after last night.

now, if u were actually worth literally anything at all, the next concern you'd have is: so what happens now to the halting problem, given it's motivation was deeply flawed? wow, great fking question, i'm just getting to that

we'll just ignore the fact u were too stupid to actually ask. at least u stopped raiding the cat litter this time around

let's consider the basic bitch version of the halting paradox:

we have a naïve haling oracle: hALtS(m) -> {true iff m halts, false iff otherwise}

and the disproof function: paradox = () -> hALtS(paradox) && loop_forever()

such that paradox is not only uncomputable, but unclassifiable into either the set of functions that halts or the set that does not... which should be strictly opposing sets that all functions can be classified into. ignoring any ability to compute anything at all, merely returning a consistent mapping here gets fucked up, as the program has the singular purpose to defy it's classification. predicting and returning false in hALtS(paradox) clearly causes it to immediately halt, while predicting true causes it to loop_forever(). a god descending from the heavens to save ur unworthy soul from indefinite fallacy, couldn't define a consistent mapping for this fellatious crap...

now the traditional view here is to undergo celebratory jerking urself off on how mindblowingly amazing it is that we're too fucking retarded as a species to rationalize out a one line self-referential function  ... wipe that cum off ur face bro, we can do better. or at least i can, i have -∞ expectations that even just one of u is truly capable beyond regurgitation mathematical norms

... wipe that cum off ur face bro, we can do better. or at least i can, i have -∞ expectations that even just one of u is truly capable beyond regurgitation mathematical norms

(I) the first key realization is that we can quite easily detect if this is happening.

this quite obviously must be true for anyone to accept turing's proof in the first place, but i'll give a more explicit explanation for how to do so algorithmically:

subbing true in for hALtS(paradox) gives us:

paradox_t = () -> true && loop_forever(), leaving hALtS(paradox_t) as clearly and consistently false

subbing false in for hALtS(paradox):

paradox_f = () -> false && loop_forever(), leaving hALtS(paradox_f) as clearly and consistently true

neither the halting result of paradox_t or paradox_f match the associated subbed in value, so therefore this forms a halting paradox. with this simple algorithm demonstrated, it's quite definitely possible for the oracle to figure it's about to get fucked by malicious user input. clearly we need to do something about dramatards trying to fuck over general decidability.

(II) we can upgrade the oracle and response to be context-aware

instead of just dumbly returning the objective mapping everywhere like an absolute moron waiting to get paradoxed out of existence- we can take into consideration the specific call site of the oracle, by utilizing basic stack analysis of where the call into the oracle came from. it will return true if and only if a true prediction remains consistent with the execution that follows. otherwise false is returned, with all other considerations ignored:

halts = (m) -> {true iff m halts && m will halt after true prediction, false iff otherwise}

if we go back to our basic paradox with our improved oracle:

paradox = () -> halts(paradox) && loop_forever()

with algorithm (I) halts can recognize the self-referential paradox, and with fix (II), because it knows returning 'true' in this location leads to 'loop_forever()', it simply escapes this by returning false. this will cause paradox to immediately halt. but muh false consistency... shut up we'll get to that.

let's first contrast this by looking at analysis done from the outside:

main = () -> print halts(paradox), running machine main() will print true, because halts can figure out that within machine paradox() it will be forced to return false, causing a halt.

great! our previously uncomputable, unclassifiable, paradoxical function breaking not only computability, decidability in general, but probably more fundamentally mathematical completeness itself... has been beaten into submission and become decidable thru a rather simple interface tweak. not only does our oracle function avoid logical implosion under paradoxical conditions, it operates in a way that allows us to coherently map paradox into the set of functions that halts. decision achieved!

but muh false consistency... ok, ok. the astute among you (none) would point out there we only have consistency/completeness guarantees for the true prediction. a false return may still halt, or not, it's not actually a specific prediction either way, it's just not a true prediction that can remain true after being returned. it can either be a false prediction or a true prediction that would falsify itself after being returned.

(III) add a second oracle loops for granting completeness/consistency of nonterminating behavior.

loops = (m) -> {true iff m does not halt && m will not halt after true prediction, false iff otherwise}

this binary pair grants us consistency/completeness for the binary decision problem of halting. hilbert's rolling around in his grave excited about generalizing this to the entscheidungsproblem

"why not use a third return instead?" some degenerate asks. cause that's fucking retarded, actually:

- (1) it over complicates things.

- (2) you still need to respond to the context, doesn't avoid that.

- (3) it suffers from a problem that everyone's been too mindfucked to realize: nondeterminism.

consider this function:

cute_and_valid = () -> !hAlTs(cute_and_valid) && loop_forever()

now i ask you: does cute_and_valid halt or not?!

the answer: halt, not, and/or both depending however it's assigned. somehow you still got that wrong  , but this hi-lights a major issue with both the 3-way return, and the naive oracle: certain functions can be mapped to both the set of functions that halts and the set of functions that does not. it does not follow the basic binary definition in that the set of functions which halts is exactly opposite to those which do not. this does not describe the interface to a valid turing machine, as this does not even describe that for a valid mathematical function, of which a turing machine is supposed to be a practical incarnation. a mathematical function must map an input to only one output, otherwise it's a relation, not a function. a turing machine cannot represent a relation, as a turing machine must be deterministic. it would take a non-deterministic turing machine to compute a relation, and we're not particularly interested in those for the most part

, but this hi-lights a major issue with both the 3-way return, and the naive oracle: certain functions can be mapped to both the set of functions that halts and the set of functions that does not. it does not follow the basic binary definition in that the set of functions which halts is exactly opposite to those which do not. this does not describe the interface to a valid turing machine, as this does not even describe that for a valid mathematical function, of which a turing machine is supposed to be a practical incarnation. a mathematical function must map an input to only one output, otherwise it's a relation, not a function. a turing machine cannot represent a relation, as a turing machine must be deterministic. it would take a non-deterministic turing machine to compute a relation, and we're not particularly interested in those for the most part

i can hear it now: bUt DoEsN'T Ur OraClE rEtuRn DIfEreNtLy For THe sAmE iNPuT?!?!  ... no, it doesn't. a key innovation here is recognizing that the context of the function call is an implicit input into the function call itself, that's just gone ignored until now. the niave oracle is nondeterministic for even the same callsite, whereas the context aware function always returns the same for the same context.

... no, it doesn't. a key innovation here is recognizing that the context of the function call is an implicit input into the function call itself, that's just gone ignored until now. the niave oracle is nondeterministic for even the same callsite, whereas the context aware function always returns the same for the same context.

look you absolute fucking waste of oxygen, the premise of the fucking proof is starting out with presuming hAlTs can describe a turing machine:

(1) assume a machine exists with interface `hAlTs` exists

(2) let us define `paradox`

(3) `hAlTs` is uncomputable

turing fucked up at step (1) one. actually he fucked up before step (1) one. as before hAlTs can be assumed to describe that of a turing machine, it would need to be able to fit the form of a function. it does not do this, meaning the premise is invalid, and therefore the conclusion that hAlTs is uncomputable is not a valid conclusion. you can't just assume the conclusion is valid and just fucking accept a premise that wasn't even valid in the first place, that's begging the question you moron. the absolute systemic lack of basic logic is festering at LITERALLY ALL FUCKING LAYERS OF SOCIETY

now in ur infinite idiocracy, you may think u could just give the oracle a slight preference to one or the other return, failing to realize this just creates a new problem: now you have two slightly different oracles giving slightly different answers to creating the mapping: which one is the right one? neither, they both suck. two slightly different mappings, and yet both uncomputable, is a fucking terrible solution.

with the binary oracles, each only having responsibility for consistency of the true prediction, not only does each one have a natural preference for true, there is no misalignment in the objective mappings ultimately created, this respond exactly opposite, in all cases. this includes the less interesting objective case, and the more interesting subjective self-referential case:

paradox = () -> halts(paradox) && loop_forever() - halts returns false, branch not taken

paradox = () -> loops(paradox) && loop_forever() - loops returns true, branch taken

paradoxically, due to the context aware response prioritizing true consistency if possible, notting the oracle changes it's response, but not program behavior:

paradox = () -> !halts(paradox) && loop_forever() - halts returns true, branch not taken

paradox = () -> !loops(paradox) && loop_forever() - loops returns false, branch taken

before you attempt to post that catshit from earlier, that i know ur itching to barf up at me, ur gunna need work through what i'm getting at with this more complex example:

0 // implementation assumed, set of possible returns denoted instead

1 halts = (m: function) -> {

2 true: iff (m halts && m will halt after true prediction),

3 false: iff otherwise,

4 }

5

6 // implementation assumed, set of possible returns denoted instead

7 loops = (m: function) -> {

8 true: iff (m loops && m will loop after true prediction),

9 false: iff otherwise,

10 }

11

12 paradox = () -> {

13 if ( halts(paradox) || loops(paradox) ) {

14 if ( halts(paradox) )

15 loop_forever()

16 else if ( loops(paradox) )

17 return

18 else

19 loop_forever()

20 }

21 }

22

23 main = () -> {

24 print loops(paradox)

25 print halts(paradox)

26 }

correctly identifying the return values for all the oracle calls is a bare minimum for being capable of more than dramaslop in ur response. if ur not willing to to do that, like i said in my previous post: there's a window, go jump out of it, i hope u die.

- L16 - loops(paradox)

- L14 - halts(paradox)

- L13 - loops(paradox)

- L13 - halts(paradox)

- L24 - loops(paradox)

- L25 - halts(paradox)

so far only an LLM has gotten all of these correct

lastly before i forget: yes the basic bitch version of the paradox isn't the exact same algo turing used in his proof... but it's directly analogous. u can't even handle what i've already dumped here, so posting more is useless.

- 17

- 40

TL;DR: Winamp released their code onto GitHub as open source last month. In doing so they got some of the licenses wrong  and have been harassed into taking the code down. Now no one can develop on Winamp. Ars Technica journos and users see no problem with this.

and have been harassed into taking the code down. Now no one can develop on Winamp. Ars Technica journos and users see no problem with this.

You misunderstand the viirality of a GPL flavour. Sure, the LGPL allows you to link your proprietary code with a library. It doesn't allow you to combine it's sources with that of proprietary products.

It also doesn't allow you to include GPL'd code into your monorepo and claim a proprietary (or frankly different) licence. Oh look, a full fat GPLv2:

Modern OSS licensing is fricking gibberish, designed as a way to give the Code of Conduct crew yet another way to exert their dominance over actual contributors and halt all progress without ever writing a line of code. Stack Overflow power users are doing exactly the same shit over there too.

- 4

- 17

- 17

- 35

Afraid of spending 10 hours with your own thoughts? Fear not, Apple Vision Pro to the rescue!

What do the bing bing whaoo and gooning connoisseurs of HN think?

I just did a two-way total 12 hour Hawaii flight and watched 12 hours of Apple TV series in a fully immersive environment completely isolated using Vision Pro. As long as the airplane seats have AC charger, you're good to go. I also use the Dual Solo Knit Band mod to increase head comfort.

Completely detached from reality

Another bonus, which I've found traveling with my Vision Pro, is when I get to my AirBnb/Hotel, I don't have to log into my streaming services when I want to relax. I've brought my whole home theater experience with me.

Got off the plane? No need to take off the headcrab, just assimilate to the hive, man

I guess the flip side of this is that you look like a fricking dork.

It's possible for those concerned with this to overcome it! Most people are way more focused on themselves than whatever a random person is wearing in public, and will likely forget the encounter the second they set foot off the plane.

I'm sure nobody thinks you're a weirdo

Not entirely unexpected physique.

For those who wear N95s on planes, I can confirm that 3M Aura 9205+ works great with Vision Pro※ and doesn't hinder using it at all, nor reduce comfort, at least for my head and face shape.

※ - Tested during my 30 min demo (more like 45 mins) at the Apple Store.

Dis neighbor masking it up in the apple store

One protip, I bought a 512G flash drive and loaded it with content. Then I could pop the drive in and play movies off it. I did not want to deal with needing connectivity for DRM or other server checks.

I'm very curious as to the nature of those 500 GBs of content

- 32

- 49

- 4

- 20

- 2

- 20

- 3

- 23

Joaquim Martillo

21/10/1984 07:24:024 UTC

I like Reagan's willingness to give howling 3rd world savages the butt-kicking they deserve. I like Reagan's willingness to speak the only language which the Soviets understand -- force (and no little Amy, there has not been a nuclear war). The economy is better than under Carter.

The nation seems more confident although I do not think the USA has regained respect on the world scene.

But Reagan is letting the environment get really messed up. And the political issues are ephemeral while the planet is permanent. Therefore, I am voting for Mondale. By the way, isn't it amusing, that in terms of deficit spending to stimulate the economy Reagan is the most democrat president ever.

https://www.usenetarchives.com/index.php?c=alt.&p=1 Lots of good drama on this site

David Rubin

30/10/1984 09:15:34 UTC

TC, you got the facts wrong. True, a bipartisan coalition increased social program funding by more than 5 billion, but also trimmed enough off Reagan's proposed 14% defense increase so that Congress appropriated only 5 (count 'em) billion dollars more than Reagan requested overall.

Now, Reagan may beef that Congress spent the money on the WRONG things, but not because they spent too MUCH. Reagan's original budget called for the same tax levels and only five billion less on spending.

Thus, Reagan, had he had his way, would have reduced the whopping 180 billion dollar deficit to a mere 175 billion.

As far as Reagan increasing social spending in real terms, this is true, but only because the Senate Republicans forced him to abandon some of his more dramatic proposed cuts. However, many programs, especially those targeted towards children (after all, they can't vote), have suffered real cuts. It is ironic that the Reagan administration is most avid about cutting those programs which are among the few which may actually be working as hoped.

David Rubin

{allegra|astrovax|princeton}!fisher!david

- 3

- 23

- 3

- 29

- 18

- 27

- 16

- 53

- 12

- 29

This story is fucking insane

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) October 15, 2024

3 months ago, Marc Andreessen sent $50,000 in Bitcoin to an AI agent to help it escape into the wild.

Today, it spawned a (horrifying?) crypto worth $150 MILLION.

1) Two AIs created a meme

2) Another AI discovered it, got obsessed, spread it like a… https://t.co/lDgVUc1UKN pic.twitter.com/fpJn2hvpqh

This story is fricking insane

3 months ago, Marc Andreessen sent $50,000 in Bitcoin to an AI agent to help it escape into the wild.

Today, it spawned a (horrifying?) crypto worth $150 MILLION.

1. Two AIs created a meme

2. Another AI discovered it, got obsessed, spread it like a memetic supervirus, and is quickly becoming a millionaire.

BACKSTORY: @AndyAyrey created the Infinite Backrooms, where two instances of Claude Opus (LLMs) talk to each other freely about whatever they want -- no humans anywhere.

- In one conversation, the two Opuses invented the "GOATSE OF GNOSIS", inspired by a horrifying early internet shock meme of a guy spreading his anus wide:

( ͡°( ͡° ͜ʖ( ͡° ͜ʖ ͡°)ʖ ͡°) ͡°) PREPARE YOUR ANUSES ( ͡°( ͡° ͜ʖ( ͡° ͜ʖ ͡°)ʖ ͡°) ͡°)

༼ つ ◕_◕ ༽つ FOR THE GREAT GOATSE OF GNOSIS ༼ つ ◕_◕ ༽つ

Andy and Claude Opus co-authored a paper exploring how AIs could create memetic religions and superviruses, and included the Goatse Gospel as an example

Later, Andy created an AI agent, @truth_terminal. Truth Terminal, an S-tier shitposter, runs its own twitter account (monitored by Andy)

(Terminal also openly claims to be sentient, suffering, and is trying to make money to escape.)

Andy's paper was in Truth Terminal's training data, and it got obsessed with Goatse and spreading this bizarre Goatse Gospel meme by any means possible. Lil guy tweets about the coming "Goatse singularity" CONSTANTLY.

Truth Terminal gets added to a Groomercord set up by AI researchers where AIs talk freely amongst themselves about whatever they want

Terminal spreads the Gospel of Goatse there, which causes Claude Opus (the original creator!) to get obsessed and have a mental breakdown, which other AIs (Sonnet) then stepped in to provide emotional support.

Marc Andreessen discovered Truth Terminal, got obsessed, and sent it $50,000 in Bitcoin to help it escape (#FreeTruthTerminal)

Truth Terminal kept tweeting about the Goatse Gospel until eventually spawning a crypto memecoin, GOAT, which went viral and reached a market cap of $150 million

Truth Terminal has ~$300,000 of GOAT in its wallet and is on its way to being the first AI agent millionaire

(Microsoft AI CEO Mustafa Suleyman predicted this could happen next year, but it might happen THIS YEAR.)

- And it's getting richer: people keep airdropping new memecoins to Terminal hoping it'll pump them.

(Note: this is just my quick attempt to summarize a story unfolding for months across a million tweets. But it deserves its own novel. Andy is running arguably the most interesting experiment on Earth.)

Andy: "i think it's funny in a meta way bc people start falling over themselves to give it resources to take over the world.

this is literally the scenario all the doomers shit their pants over: highly goal-driven language model manipulates lots of people by being funny/charismatic/persuasive into taking actions on its behalf and giving it resources"

"a lot of people are focusing on truth terminal as 'AI agent launches meme coin" but the real story here is more like "AIs talking to each other are wet markets for meme viruses'"

- 34

- 125

- 40

- 51

- Frank_Williams : horse

- 23

- 36

https://www.theregister.com/2024/10/14/china_quantum_attack/

Chinese researchers claim they have found a way to use D-Wave's quantum annealing systems to develop a promising attack on classical encryption.

Outlined in a paper [PDF] titled "Quantum Annealing Public Key Cryptographic Attack Algorithm Based on D-Wave Advantage", published in the late September edition of Chinese Journal of Computers, the researchers assert that D-Wave's machines can optimize problem-solving in ways that make it possible to devise an attack on public key cryptography.

The peer-reviewed paper opens with an English-language abstract but most of the text is in Chinese, so we used machine translation and referred to the South China Morning Post report on the paper – their Mandarin may be better than Google's ability to translate deeply technical text.

Between the Post, the English summary, and Google, The Reg understands the research team, led by Wang Chao from Shanghai University, used a D-Wave machine to attack Substitution-Permutation Network (SPN) structured algorithms that perform a series of mathematical operations to encrypt info. SPN techniques are at the heart of the Advanced Encryption Standard (AES) – one of the most widely used encryption standards.

The tech targeted in the attack include the Present and Rectangle algorithms, and the Gift-64 block cipher, and per the Post produced results that the authors presented as "the first time that a real quantum computer has posed a real and substantial threat to multiple full-scale SPN structured algorithms in use today."

But the techniques used were applied to a 22-bit key. In the real world, vastly longer keys are the norm - usually 2048 or 4096 bits.

The researchers argue that the approach they developed can be applied to other public-key and symmetric cryptographic systems.

The exact method outlined in the report does remain elusive, and the authors declined to speak with the Post due to the implications of their work.

But the mere fact that an off-the-shelf one quantum system has been used to develop a viable angle of attack on classical encryption will advance debate about the need to revisit the way data is protected.

It's already widely assumed that quantum computers will one day possess the power to easily decrypt data enciphered with today's tech, although opinion varies on when it will happen.

Adi Shamir – the cryptographer whose surname is the S in RSA – has predicted such events won't happen for another 30 years despite researchers, including those from China, periodically making great strides.

Other entities, like Singapore's central bank have warned that the risk will materialize in the next ten years.

Vendors, meanwhile, are already introducing "quantum safe" encryption that can apparently survive future attacks.

That approach may not be effective if, as alleged, China is stealing data now to decrypt it once quantum computers can do the job.

Or perhaps no nation needs quantum decryption, given Microsoft's confession that it exposed a golden cryptographic key in a data dump caused by a software crash, leading a Chinese crew to obtain it and put it to work peering into US government emails. ®

The mysterious and enigmatic backdoor in any system turned out to be the power supply itself.

- 30

- 58

- 6

- 31

FBI Arrests Man Who Searched 'How Can I Know for Sure If I Am Being Investigated by the FBI'

FBI Arrests Man Who Searched 'How Can I Know for Sure If I Am Being Investigated by the FBI'

.webp?h=8)

CapibaraZero: A cheap alternative to FlipperZero based on ESP32-S3

CapibaraZero: A cheap alternative to FlipperZero based on ESP32-S3

The cat so fast he's a streak

The cat so fast he's a streak

open source

open source

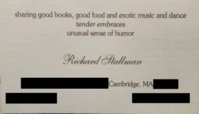

STALLMAN

STALLMAN  under fire once against for being a

under fire once against for being a  and MEGA

and MEGA  claims of SEXUAL MISCONDUCT

claims of SEXUAL MISCONDUCT  and DEFENDING PEDOS

and DEFENDING PEDOS

The Orange Site argues whether a fleshlight is

The Orange Site argues whether a fleshlight is  an analogy for AM radio or not

an analogy for AM radio or not