- 19

- 15

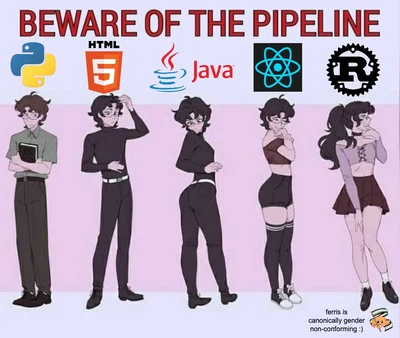

sorry if i broke things not sorry i need to finish the rewrite of rdrama.net's frontend someday because its shit to theme

trying to make it look more like hackernews... how did i do?

- 2

- 7

- 1

- 7

- 45

- 68

ngl I don’t even really know what a graphics card is, but this was a very fun article with this nerd just btfoing other nerds

warning: long

- collectijism : If you actually read this post you should visit a doctor to up your autism medicine

- 11

- 35

A whole new world of AI awaits

Imagine being 5 years old, dreaming of new adventures for Pikachu and Aladdin, and turning those tales into a cartoon right in front of your eyes... (Perhaps even with you as the main character!) What kinds of new storybook experiences could GPT-3 and DALL-E enable?

My own kindergartener, Noah, loves new bedtime stories, so let's find out.

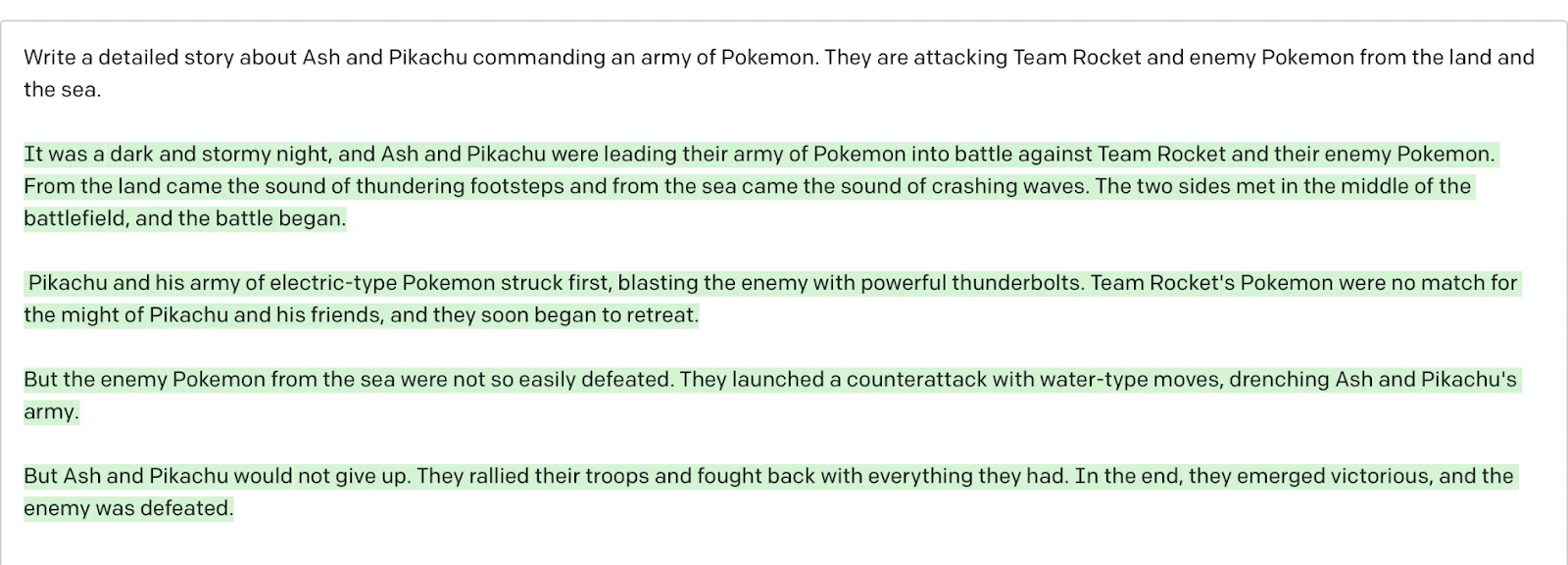

GPT-3 Story Generation

First, I asked Noah what story he wanted. In his slightly paraphrased words: "I want a story about Ash and Pikachu commanding an army of Pokemon. They're attacking Team Rocket and enemy Pokemon from the land and ocean."

I then asked GPT-3 to flesh out the story:

GPT-3 fleshes out a story about Ash and Pikachu vs. Team Rocket

It was a dark and stormy night, and Ash and Pikachu were leading their army of Pokemon into battle against Team Rocket and their enemy Pokemon. From the land came the sound of thundering footsteps and from the sea came the sound of crashing waves. The two sides met in the middle of the battlefield, and the battle began.

Pikachu and his army of electric-type Pokemon struck first, blasting the enemy with powerful thunderbolts. Team Rocket's Pokemon were no match for the might of Pikachu and his friends, and they soon began to retreat.

But the enemy Pokemon from the sea were not so easily defeated. They launched a counterattack with water-type moves, drenching Ash and Pikachu's army.

But Ash and Pikachu would not give up. They rallied their troops and fought back with everything they had. In the end, they emerged victorious, and the enemy was defeated.

Roald Dahl, watch out.

DALL-E Illustrations

Next, DALL-E generated visuals for the story. Inputting the raw sentences themselves didn't work so well, so I tweaked them to improve the illustrations.

Here's the storybook!

It was a dark and stormy night, and Ash and Pikachu were leading their army of Pokemon into battle against Team Rocket and their enemy Pokemon.

From the land came the sound of thundering footsteps and from the sea came the sound of crashing waves.

The two sides met in the middle of the battlefield, and the battle began.

Pikachu and his army of electric-type Pokemon struck first, blasting the enemy with powerful thunderbolts.

Team Rocket's Pokemon were no match for the might of Pikachu and his friends, and they soon began to retreat.

**But the enemy Pokemon from the sea were not so easily defeated. They launched a counterattack with water-type moves, drenching Ash and Pikachu's army.

But Ash and Pikachu would not give up. They rallied their troops and fought back with everything they had.

In the end, they emerged victorious, and the enemy was defeated.

What a time to be a child.

- 4

- 16

- 12

- 31

Funny how this article was getting downmarseyd on /r/technology lol.

- 10

- 22

The Federal Bureau of Investigation (FBI) warns of increasing complaints that cybercriminals are using Americans' stolen Personally Identifiable Information (PII) and deepfakes to apply for remote work positions.

Deepfakes (digital content like images, video, or audio) are sometimes generated using artificial intelligence (AI) or machine learning (ML) technologies and are difficult to distinguish from authentic materials.

Such synthetic content has been previously used to spread fake news and create revenge porn, but the lack of ethical limitations regarding their use has always been a source of controversy and concern.

The public service announcement, published on the FBI's Internet Crime Complaint Center (IC3) today, adds that the deepfakes used to apply for positions in online interviews include convincingly altered videos or images.

The targeted remote jobs include positions in the tech field that would allow the malicious actors to gain access to company and customer confidential information after being hired.

"The remote work or work-from-home positions identified in these reports include information technology and computer programming, database, and software-related job functions," the FBI said.

"Notably, some reported positions include access to customer PII, financial data, corporate IT databases and/or proprietary information."

Video deepfakes are easier to detect

While some of the deepfake recordings used are convincing enough, others can be easily detected due to various sync mismatches, mainly spoofing the applicants' voices.

"Complaints report the use of voice spoofing, or potentially voice deepfakes, during online interviews of the potential applicants," the US federal law enforcement agency added.

"In these interviews, the actions and lip movement of the person seen interviewed on-camera do not completely coordinate with the audio of the person speaking. At times, actions such as coughing, sneezing, or other auditory actions are not aligned with what is presented visually."

Some victims who reported to the FBI that their stolen PII was used to apply for a remote job also said pre-employment background checks information was utilized with other applicants' profiles.

The FBI asked victims (including companies who have received deepfakes during the interview process) to report this activity via the IC3 platform and to include information that would help identify the crooks behind the attempts (e.g., IP or email addresses, phone numbers, or names).

In March 2021, the FBI also warned in a Private Industry Notification (PIN) [PDF] that deepfakes (including high-quality generated or manipulated video, images, text, or audio) are getting more sophisticated by the day and will likely be leveraged broadly by foreign adversaries in "cyber and foreign influence operations."

Europol also warned in April 2022, that deepfakes could soon become a tool that cybercrime organizations will use on a regular basis in CEO fraud, to tamper with evidence, and to create non-consensual pornography.

- 34

- 15

First vids of active testing of drone swarms that I am aware of were from 2017. https://youtube.com/watch?v=DjUdVxJH6yI . It's been five years since then. They are now capable of even avoiding crashes in dense forests.

In a similar vein how long before military squads have transportation robots or additional robot troops. The LS3 robot was tested but the project got shelved by 2015. https://en.wikipedia.org/wiki/Legged_Squad_Support_System . Was there any project after that which is still viable to fill a similar role or have they given up on robot animal equivalents for military usage? The humanoid robots got stuck at the ad level as far as I am aware. Drones will likely fill that niche.

US soldier exosuit developments have made genuine advancements over the years and I can bet my right nut that they have active exosuit usage for their specialized troops, off the record. I believe this because in Japan industrial workers were already using exosuits by 2017.

In a similar vein I think the bio mechanical insect project likely succeeded but had to be shelved because of the level of biodiversity loss around human habitations made them unviable. They instead filled that niche at government level with mandatory chips for dogs.

In addition, fun fact, the F 35B jet can vertically takeoff.

Night vision is already as good as science fiction grade, able to give you vision as clear as the day.

So after sharing all these links i come to my primary point, what else do you think is going to be the big new future warfare upgrade in the next one or two decades and how long is the stuff that's in prototype mode right now take to come out. The more specifics with links the better.

- 6

- 11

- 11

- 33

Starting July 13, Valorant will begin listening and recording in-game voice communication with the goal of training a language model to help it identify toxic chat. This system will only work in North American/English-only servers. The goal is to launch the language model later this year in an effort to clamp down on toxicity in the first-person shooter.

Like in any first person shooter that lets players talk to each other, the voice chat in Riot Games’ Valorant can be toxic. It’s also incredibly hard to moderate. When someone drops a racial slur in text chat, a clear log is maintained that mods can look through later. But the processing and storage power required to do the same for voice chat just isn’t possible. “Voice chat abuse is significantly harder to detect compared to text (and often involves a more manual process),” Riot Games said in a February 2022 blog post.

Riot first indicated it would do something about abusive voice chat in February 2022. “Last year Riot updated its Privacy Notice and Terms of Service to allow us to record and evaluate voice comms when a report for disruptive behavior is submitted, starting with Valorant,” it said at the time. “Please note that this will be an initial attempt at piloting a new idea leveraging brand new tech that is being developed, so the feature may take some time to bake and become an effective tool to use in our arsenal. We’ll update you with concrete plans about how it’ll work well before we start collecting voice data in any form.”

Now we know what that brand-new tech is: some kind of language model that automatically detects toxic voice chat and stores it for later evaluation. The updated terms of service applied to all of Riot’s games, but it said its current plan was to use Valorant to test the software solution before rolling it out to other games.

The ability to detect keywords from live conversations is not new, however. Federal and state governments have been using similar systems to monitor phone calls from prisons and jails for at least several years—sometimes with the ability to automatically disconnect and report calls when certain words or phrases are detected.

Riot Games did not share details of the language model and did not immediately respond to Motherboard’s request for comment. According to a post announcing the training of the language model, this is all part of “a larger effort to combat disruptive behavior,” that will allow Riot Games to “record and evaluate in-game voice communications when a report for that type of behavior is submitted.”

The updated terms of service had some more specifics. “When a player submits a report for disruptive or offensive behavior in voice comms, the relevant audio data will be stored in your account’s registered region and evaluated to see if our behavior agreement was violated,” the TOS said. “If a violation is detected, we’ll take action. After the data has been made available to the player in violation (and is no longer needed for reviews) the data will be deleted, similar to how we currently handle text-based chat reports. If no violation is detected, or if no report is filed in a timely manner, the data will be deleted.”

Riot Games said it would only “monitor” voice chats if a report had been submitted. “We won’t actively monitor your live game comms. We’ll only potentially listen to and review voice logs when disruptive voice behavior is reported,” it said in a Q&A about the changes. That is still monitoring, though, even if it’s not active. What this probably means is that a human won’t listen to it unless there’s a report—but that doesn’t mean a computer isn’t always listening.

The only way to avoid this, Riot Games said, was to not use its in-game voice chat systems. Monitoring starts on July 13. “Voice evaluation during this period will not be used for disruptive behavior reports,” Riot Games said in the post announcing the project. “This is brand new tech and there will for sure be growing pains. But the promise of a safer and more inclusive environment for everyone who chooses to play is worth it.”

- 2

- 9

Potential drama? We all know GME apes still hold a grudge against Robinhood.

- 2

- 7

- 115

- 134

Orange site discuss: https://news.ycombinator.com/item?id=31881238

Random Brazil sub: https://old.reddit.com/r/brasilivre/comments/vlam2d/the_fall_of_reddit_why_its_quickly_declining_into/

Reddit is dead.

At least artistically and creatively speaking.

What started as a bastion of independent thought, Reddit has slowly devolved into a den of groupthink, censorship, and corporate greed.

“It’s true, both the government and private companies can censor stuff. But private companies are a little bit scarier because they have no constitution to answer to, they’re not elected really — all the protections we’ve built up against government tyranny don’t exist for corporate tyranny.

— Aaron Swartz, co-Founder of Reddit

There are three fundamental problems with Reddit:

1. Censorship

2. Moderator Abuse

3. Corporate Greed

But first, you should understand that the history of Reddit doomed it from the start.

The Secret History of Reddit

Reddit was launched in June 2005 by two 22-year-old graduates from the University of Virginia, Steve Huffman and Alexis Ohanian. The site was so small that the two co-founders had to spam links just to make Reddit seem active.

Later that year the Reddit team made arguably the most important decision of their lives: they hired a new co-founder, Aaron Swartz.

If you don’t know who Aaron Swartz was, he was a young prodigy and computer genius who, among other things, helped create RSS.

He was also an outspoken activist for free speech and open information, which made him a lot of enemies in high places.

Eventually, Aaron left Reddit after they were bought by Conde Nast (owner of Wired Magazine), but this is when he became a complete revolutionary.

Aaron became something of a Wiki Leaks-style journ*list leaking high-level secrets against corporate power. He released countless documents including the most damaging that law professors at Stanford were receiving lobbying money from oil companies such as Exxon Mobile.

Shortly after, the FBI began monitoring Aaron Swartz and he was arrested for downloading academic journals from MIT in an attempt to make them freely available online.

They threw the book at Aaron by fining him over a million dollars, charging him with 13 felonies, and giving him a 35-year prison sentence. This was seen as an act of pure revenge by the government and because of it, Aaron Swartz took his own life at the age of 26.

“I don’t want to be happy. I just want to change the world.” — Aaron Swartz

And you know what Reddit did? They scrubbed Aaron Swartz’s name from their history. If you go to the “about” page on Reddit, it makes no mention of him whatsoever.

Aaron Swartz should be a martyr, instead, he’s been erased.

It Got Worse: Censorship

After the death of Aaron Swartz, things only got worse for Reddit.

Newly appointed CEO Ellen Pao made an announcement, and I quote, that “Reddit is not a platform for free speech.”

This was the first step in what would be mass censorship on the platform.

In the years that followed Reddit banned over 7000 subreddits left and right in a never-ending stream of censorship. But the most controversial censorship occurred after the Orlando nightclub shooting.

After the shooting, the subreddit /r/news became a hub for people to discuss the event and share news articles. However, the mods of /r/news had a very different idea.

They began mass-deleting any posts that criticized Islam or mentioned the shooter’s motive of radical Islamic terrorism. They also banned anyone who spoke out against this censorship. Mods became power-hungry dictators, erasing anyone who dared to challenge them.

Reddit’s Mods Are Mall Cops Slowly Killing the Platform

Moderators on Reddit are like hall monitors who bust you for being late two seconds after the bell rang. They are the kids that ask for more homework They’re petty, they’re annoying, and they have too much power.

The mod system is completely volunteer-based which means that anyone can become a mod without any qualifications.

One of my favorite posts on Reddit had this to say about moderators:

“Mods are basically unpaid mall cops for reddit… except even mall cops know they are a joke. I think Reddit counts on the fact there are enough lonely losers out there who will moderate the site for free in exchange for the illusion of authority. These are shameful, powerless, and deeply troubled people looking to exert a measure of power anyway they can — the same kind of people who would become abusive police officers and border agents if they weren’t already so monstrously overweight.”

And because moderators are volunteer-based, they can be bribed. In fact, there have been numerous cases of mods being bribed by companies to censor certain topics or ban competing subreddits.

(Bribery taking place here, here, and here

Here is a short list of the worst most corruptable mods on Reddit:

/u/awkwardtheturtle (mod of multiple subreddits) was caught pinning his own posts to the top of subreddits for popularity and called all critics against him incels for no apparent reason.

/u/gallowboob (mod of /r/ relationship advice) would shill his friend’s marketing companies on the front page and would ban any account criticizing him.

And Finally, Corporate Greed

I only recently found out that Ghislaine Maxwell, wife to Jeffery Epstein, ran one of the most powerful Reddit accounts on the website. In fact, it was the eighth-most popular account by karma on Reddit.

I won’t get into the implications of that — as it could be an article on its own — but it's only one case of elites having massive power on Reddit.

The bigger issue is that Reddit has several competing corporate interests.

One of them is a Chinese tech giant called Tencent which made a $150 million investment in Reddit. Tencent is the world’s biggest video game company and is notorious for selling its user’s information.

Another big investor is Sequoia Capital who was found earlier this year to be [investing in corrupt companies responsible for fraudulent practices](https://www.entrepreneur.com/article/425007#:~:text=Sequoia Capital broke the silence,The allegations are deeply disturbing.").

All of these investments have one thing in common: they’ve made the website worse for users. Now — just as I wrote about YouTube — Reddit is tailored for a better corporate experience, not a better user experience.

Final Thoughts

Reddit was the first social media platform I fell in love with. It’s where I found my start as a writer and it’s helped me procrastinate many late-night essays.

But it’s time to go.

It’s become a shell of its former self and something that Aaron Swartz would not be proud of. And even though Reddit is pretty much a corporate propaganda machine the users still think it’s a secret club for intellectual dynamos that “fricking love science.”

No matter what you believe in, wisdom isn’t achieved living inside a bubble of utopian ideals.

Although some of my favorite online communities are on Reddit like /r/FoodNYC or /r/OnePunchMan, for the most part, I think it’s time to move on.

with Open-Source Face Comparison Cowtools

with Open-Source Face Comparison Cowtools

and the Pentagon isn't happy

and the Pentagon isn't happy

.webp?h=8)