- 3

- 11

- 1

- 5

- 3

- 9

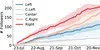

Growth in followers

The present results suggest that early choices about which sources to follow impact the experiences of social media users. This is consistent with previous studies4,5. But beyond those initial actions, drifter behaviors are designed to be neutral with respect to partisan content and users. Therefore the partisan-dependent differences in their experiences and actions can be attributed to their interactions with users and information mediated by the social media platform — they reflect biases of the online information ecosystem.

Drifters with Right-wing initial friends are gradually embedded into dense and homogeneous networks where they are constantly exposed to Right-leaning content. They even start to spread Right-leaning content themselves. Such online feedback loops reinforcing group identity may lead to radicalization17, especially in conjunction with social and cognitive biases like in-/out-group bias and group polarization. The social network communities of the other drifters are less dense and partisan.

We selected popular news sources across the political spectrum as initial friends of the drifters. There are several possible confounding factors stemming from our choice of these accounts: their influence as measured by the number of followers, their popularity among users with a similar ideology, their activity in terms of tweets, and so on. For example, @FoxNews was popular but inactive at the time of the experiment. Furthermore, these quantities vary greatly both within and across ideological groups (Supplementary “Methods”). While it is impossible to control for all of these factors with a limited number of drifters, we checked for a few possible confounding factors. We did not find a significant correlation between initial friend influence or popularity measures and drifter ego network transitivity. We also found that the influence of an initial friend is not correlated with the drifter influence. However, the popularity of an initial friend among sources with similar political bias is a confounding factor for drifter influence. Online influence is therefore affected by the echo-chamber characteristics of the social network, which are correlated with partisanship, especially on the political Right20,48. In summary, drifters following more partisan news sources receive more politically aligned followers, becoming embedded in denser echo chambers and gaining influence within those partisan communities.

The fact that Right-leaning drifters are exposed to considerably more low-credibility content than other groups is in line with previous findings that conservative users are more likely to engage with misinformation on social media20,49. Our experiment suggests that the ecosystem can lead completely unbiased agents to this condition, therefore, it is not necessary to impute the vulnerability to characteristics of individual social media users. Other mechanisms that may contribute to the exposure to low-credibility content observed for the drifters initialized with Right-leaning sources involve the actions of neighbor accounts (friends and followers) in the Right-leaning groups, including the inauthentic accounts that target these groups.

Although Breitbart News was not labeled as a low-credibility source in our analysis, our finding might still be biased in reflecting this source’s low credibility in addition to its partisan nature. However, @BreitbartNews is one of the most popular conservative news sources on Twitter (Supplementary Table 1). While further experiments may corroborate our findings using alternative sources as initial friends, attempting to factor out the correlation between conservative leanings and vulnerability to misinformation20,49 may yield a less-representative sample of politically active accounts.

While most drifters are embedded in clustered and homogeneous network communities, the echo chambers of conservative accounts grow especially dense and include a larger portion of politically active accounts. Social bots also seem to play an important role in the partisan social networks; the drifters, especially the Right-leaning ones, end up following a lot of them. Since bots also amplify the spread of low-credibility news33, this may help explain the prevalent exposure of Right-leaning drifters to low-credibility sources. Drifters initialized with far-Left sources do gain more followers and follow more bots compared with the Center group. However, this occurs in a way that is less emphatic and vulnerable to low-credibility content compared to the Right and Center-Right groups. Nevertheless, our results are consistent with findings that partisanship on both sides of the political spectrum increases the vulnerability to manipulation by social bots50.

Twitter has been accused of favoring liberal content and users. We examined the possible bias in Twitter’s news feed, i.e., whether the content to which a user is exposed in the home timeline is selected in a way that amplifies or suppresses certain political content produced by friends. Our results suggest this is not the case: in general, the drifters receive content that is closely aligned with whatever their friends produce. A limitation of this analysis is that it is based on limited sets of recent tweets from drifter home timelines (“Methods”). The exact posts to which Twitter users are exposed in their news feeds might differ due to the recommendation algorithm, which is not available via Twitter’s programmatic interface.

Despite the lack of evidence of political bias in the news feed, drifters that start with Left-leaning sources shift toward the Right during the course of the experiment, sharing and being exposed to more moderate content. Drifters that start with Right-leaning sources do not experience a similar exposure to moderate information and produce increasingly partisan content. These results are consistent with observations that Right-leaning bots do a better job at influencing users51.

In summary, our experiment demonstrates that even if a platform has no partisan bias, the social networks and activities of its users may still create an environment in which unbiased agents end up in echo chambers with constant exposure to partisan, inauthentic, and misleading content. In addition, we observe a net bias whereby the drifters are drawn toward the political Right. On the conservative side, they tend to receive more followers, find themselves in denser communities, follow more automated accounts, and are exposed to more low-credibility content. Users have to make extra efforts to moderate the content they consume and the social ties they form in order to counter these currents, and create a healthy and balanced online experience.

Given the political neutrality of the news feed curation, we find no evidence for attributing the conservative bias of the information ecosystem to intentional interference by the platform. The bias can be explained by the use (and abuse) of the platform by its users, and possibly to unintended effects of the policies that govern this use: neutral algorithms do not necessarily yield neutral outcomes. For example, Twitter may remove or demote information from low-credibility sources and/or inauthentic accounts, or suspend accounts that violate its terms. To the extent that such content or users tend to be partisan, the net result would be a bias toward the Center. How to design mechanisms capable of mitigating emergent biases in online information ecosystems is a key question that remains open for debate.

- 40

- 65

PSA: Yandex is a multi-billion Moscow based company, finances the Russian war of aggression in Ukraine, and is one of the main Kremlin's tool in spreading propaganda and suppressing dissent.

YaLM 100B is a GPT-like neural network for generating and processing text. It can be used freely by developers and researchers from all over the world.

The model leverages 100 billion parameters. It took 65 days to train the model on a cluster of 800 A100 graphics cards and 1.7 TB of online texts, books, and countless other sources in both English and Russian.

Training details and best practices on acceleration and stabilizations can be found on Medium (English) and Habr (Russian) articles.

Make sure to have 200GB of free disk space before downloading weights. The model (code is based on microsoft/DeepSpeedExamples/Megatron-LM-v1.1.5-ZeRO3) is supposed to run on multiple GPUs with tensor parallelism. It was tested on 4 (A100 80g) and 8 (V100 32g) GPUs, but is able to work with different configurations with ≈200GB of GPU memory in total which divide weight dimensions correctly (e.g. 16, 64, 128).

- 29

- 53

The NSW government will introduce laws to confiscate unexplained wealth from criminal gangs and ban the use of encrypted devices as part of long-waited reforms to combat money laundering and organised crime.

A snap cabinet meeting late on Wednesday night agreed to the new measures, designed to cripple the finances of crime networks, stopping criminals from profiting from their actions and incapacitating them financially.

The new powers allow for the confiscation of unlawfully acquired assets of major convicted drug traffickers and expand powers to stop and search for unexplained wealth.

Senior ministers have been working on new laws to deal with proceeds of crime and unexplained wealth since last year, when secret briefings from top-ranking police warned that organised crime in NSW was out of control.

Police Assistant Commissioner Stuart Smith warned cabinet ministers and senior public servants in December that organised crime was rampant and anti-organised crime laws in NSW were abysmal.

Days after that briefing, Premier Dominic Perrottet was also provided with the same darning update from Smith, a senior government source with knowledge of the conversations has confirmed.

That month, Attorney-General Mark Speakman and then police minister David Elliott began working on laws to tackle unexplained wealth. The work has continued under new Police Minister Paul Toole.

Perrottet said the new powers would help police infiltrate criminal networks.

“Organised crime is all about drug supply and money – and to truly shut it down we need to shut down the flow of dollars that fuels it,” Perrottet said.

“These reforms will better arm law enforcement agencies with the powers they need to confiscate unexplained wealth and create new offences and tougher penalties for those seeking to launder money derived from criminal activity.

“Organised crime and the technologies that criminals use are always changing and evolving, and these reforms will put our state in the strongest position to deal with these insidious crimes.”

Deputy Premier and Police Minister Paul Toole “organised crime in this state is on notice”.

“If you think you can hide the ill-gotten gains of crime, you are wrong. If you think you can avoid detection by using encrypted devices, you are wrong,” Toole said.

“We know these encrypted devices are being used to plan serious crimes like drugs and firearms smuggling, money laundering and even murder.

“These reforms will make it an offence to possess these kinds of devices and allow us to better target high-risk individuals from using them to orchestrate crime.”

Legislation will be introduced when parliament returns for the spring session.

- 2

- 11

- 3

- 10

- 12

- 29

Crypto Twitter! We are a small group of crypto enthusiasts who MEV'd 200 tickets to @EthCC. If you're interested in hearing our story of how @EthCC and Jerome (@jdetychey) invalidated our tickets and stole our funds, read our article below and read our 🧵 https://t.co/apHdiikUg3 pic.twitter.com/QT6hw246wr

— 0x84003239 (@0x84003239) June 22, 2022

-

Crypto dweebs scalp 200 tickets to one of the main Efferium conferences

-

Crypto dweebs make a post bragging about how they did it

-

Conference organizers void the scalped tickets and pocket the cash to enjoy at the casino tomorrow night

-

Crypto dweebs go into a full-blown sneedout and threaten futile legal action

- 27

- 29

some of these bugs are fricking funny though. I didn't even know the skeletons had a high-framerate animation

- 14

- 20

Poll

write down there the time it takes to load the shit (after the second load, to allow it to build up the cache)

- 15

- 17

Another link of interest: https://wiki.linux-wiiu.org/wiki/Main_Page

- 2

- 23

Sarah Aguasvivas Manzano (PhDCompSci'22) and her team’s latest work has real-world, practical use. Wearable technology is being explored in the space industry for astronauts and big tech companies are eager for improvements to the inter-connected watches and other personal devices that increasingly support and streamline modern living.

But the CU Boulder team decided to make their own project in the wearables space just a little more … fabulous.

The team, called Escamatrónicas, is composed of Aguasvivas Manzano along with rising junior LeeLee James and rising seniors Nathan Straub and Zixi Yuan. Together they have designed a garment for drag queens that features neural networks and other creative technology. The idea is to have the garment respond and react to gestures when the queens perform with them on stage. James said this garment in particular is supposed to be like eye candy in terms of visual components, with sequencing scales that move based on the position of the person wearing it.

The team’s work is funded by the Undergraduate Research Opportunities Program (UROP) on campus and they are based out of the Correll Lab within the Department of Computer Science. Funding for the research also came from the U.S. Air Force Office of Scientific Research.

Aguasvivas Manzano’s research interests include soft robotics – focusing on the design, control and fabrication of robots composed of compliant material, such as hydrogels, fabrics, composites and more. She also has a particular interest in the deployment of learning, modeling and control of soft robots that simply cannot accommodate large and powerful computers in their physical design while still being effective. A light garment like this one for example – that reacts to the physical environment in real-time – requires a lot of computation and would previously have required a lot of hardware space. However, that aspect is no longer a limiting factor in the overall design and in this case the team created a compiler to translate forward inference computation for neural networks into practice to address those limitations.

“Neural networks are computational models loosely patterned after the human brain and they are now very ubiquitous,” she said. “Here I created a compiler that allowed us to automate the code-writing process. So now we can write code that can be interpreted in microcontrollers – tiny little computers that don’t have much memory or take up much space.”

James’ personal work as a technologically-empowered drag queen – documented on her YouTube Channel – gave the team inspiration to make a project that was definitely outside of the box. When it is finished there will be various sensor networks placed throughout the design, including at the hips, one at each knee, the shoulders and the sternum, Aguasvivas Manzano said.

“There’s a lot of design fabrication and sewing, but also programming as well as using our compiler (nn4mc) to offload the neural network,” Aguasvivas Manzano said.

As they move forward with the project, the team will continue to offload more efficient computation onto the garment so that the computer inside does not consume as much power. Doing so will improve useability, functionality and battery life.

The undergraduates are required to write a paper about their work for UROP, but Aguasvivas Manzano said the team also intends to turn the project into a white paper and add it to arXiv, since the project showed both creative skill and high-quality technical know-how.

Aguasvivas Manzano finished her PhD this spring and will work for Apple. She said that the team could not have done this type of research without the support they received from the College of Engineering and Applied Sciences, the Correll Lab, and the Intelligent Robotics Lab.

“In the five years I have been here, I could not have been happier with the culture – particularly in the computer science department. It was really refreshing compared to other universities,” she said. “My advisor (Associate Professor Nikolaus Correll) and the university itself enabled creative thinking – fostering out-of-the-box thinking that I haven’t seen in other institutions.”

James echoed similar sentiments, saying that everything she has wanted to do for research has not only been allowed, but encouraged.

“The most impactful thing for me was having Sarah for this process,” she said. “I have access to all these really amazing people and all these resources, but not only that, I feel totally free to utilize any of those people or cowtools and research to do anything and everything that comes to my mind.”

- 10

- 32

Introduction

Today, June 21, 2022, Cloudflare suffered an outage that affected traffic in 19 of our data centers. Unfortunately, these 19 locations handle a significant proportion of our global traffic. This outage was caused by a change that was part of a long-running project to increase resilience in our busiest locations. A change to the network configuration in those locations caused an outage which started at 06:27 UTC. At 06:58 UTC the first data center was brought back online and by 07:42 UTC all data centers were online and working correctly.

Depending on your location in the world you may have been unable to access websites and services that rely on Cloudflare. In other locations, Cloudflare continued to operate normally.

We are very sorry for this outage. This was our error and not the result of an attack or malicious activity.

Background

Over the last 18 months, Cloudflare has been working to convert all of our busiest locations to a more flexible and resilient architecture. In this time, we’ve converted 19 of our data centers to this architecture, internally called Multi-Colo PoP (MCP): Amsterdam, Atlanta, Ashburn, Chicago, Frankfurt, London, Los Angeles, Madrid, Manchester, Miami, Milan, Mumbai, Newark, Osaka, São Paulo, San Jose, Singapore, Sydney, Tokyo.

A critical part of this new architecture, which is designed as a Clos network, is an added layer of routing that creates a mesh of connections. This mesh allows us to easily disable and enable parts of the internal network in a data center for maintenance or to deal with a problem. This layer is represented by the spines in the following diagram.

This new architecture has provided us with significant reliability improvements, as well as allowing us to run maintenance in these locations without disrupting customer traffic. As these locations also carry a significant proportion of the Cloudflare traffic, any problem here can have a very wide impact, and unfortunately, that’s what happened today.

Incident timeline and impact

In order to be reachable on the Internet, networks like Cloudflare make use of a protocol called BGP. As part of this protocol, operators define policies which decide which prefixes (a collection of adjacent IP addresses) are advertised to peers (the other networks they connect to), or accepted from peers.

These policies have individual components, which are evaluated sequentially. The end result is that any given prefixes will either be advertised or not advertised. A change in policy can mean a previously advertised prefix is no longer advertised, known as being "withdrawn", and those IP addresses will no longer be reachable on the Internet.

While deploying a change to our prefix advertisement policies, a re-ordering of terms caused us to withdraw a critical subset of prefixes.

Due to this withdrawal, Cloudflare engineers experienced added difficulty in reaching the affected locations to revert the problematic change. We have backup procedures for handling such an event and used them to take control of the affected locations.

-

03:56 UTC: We deploy the change to our first location. None of our locations are impacted by the change, as these are using our older architecture.

-

06:17: The change is deployed to our busiest locations, but not the locations with the MCP architecture.

-

06:27: The rollout reached the MCP-enabled locations, and the change is deployed to our spines. This is when the incident started, as this swiftly took these 19 locations offline.

-

06:32: Internal Cloudflare incident declared.

-

06:51: First change made on a router to verify the root cause.

-

06:58: Root cause found and understood. Work begins to revert the problematic change.

-

07:42: The last of the reverts has been completed. This was delayed as network engineers walked over each other's changes, reverting the previous reverts, causing the problem to re-appear sporadically.

-

09:00: Incident closed.

The criticality of these data centers can clearly be seen in the volume of successful HTTP requests we handled globally:

Even though these locations are only 4% of our total network, the outage impacted 50% of total requests. The same can be seen in our egress bandwidth:

Technical description of the error and how it happened

As part of our continued effort to standardize our infrastructure configuration, we were rolling out a change to standardize the BGP communities we attach to a subset of the prefixes we advertise. Specifically, we were adding informational communities to our site-local prefixes.

These prefixes allow our metals to communicate with each other, as well as connect to customer origins. As part of the change procedure at Cloudflare, a Change Request ticket was created, which includes a dry-run of the change, as well as a stepped rollout procedure. Before it was allowed to go out, it was also peer reviewed by multiple engineers. Unfortunately, in this case, the steps weren’t small enough to catch the error before it hit all of our spines.

The change looked like this on one of the routers:

[edit policy-options policy-statement 4-COGENT-TRANSIT-OUT term ADV-SITELOCAL then]

-

community add STATIC-ROUTE; -

community add SITE-LOCAL-ROUTE; -

community add TLL01; -

community add EUROPE;

[edit policy-options policy-statement 4-PUBLIC-PEER-ANYCAST-OUT term ADV-SITELOCAL then]

-

community add STATIC-ROUTE; -

community add SITE-LOCAL-ROUTE; -

community add TLL01; -

community add EUROPE;

[edit policy-options policy-statement 6-COGENT-TRANSIT-OUT term ADV-SITELOCAL then]

-

community add STATIC-ROUTE; -

community add SITE-LOCAL-ROUTE; -

community add TLL01; -

community add EUROPE;

[edit policy-options policy-statement 6-PUBLIC-PEER-ANYCAST-OUT term ADV-SITELOCAL then]

-

community add STATIC-ROUTE; -

community add SITE-LOCAL-ROUTE; -

community add TLL01; -

community add EUROPE;

This was harmless, and just added some additional information to these prefix advertisements. The change on the spines was the following:

[edit policy-options policy-statement AGGREGATES-OUT]

term 6-DISABLED_PREFIXES { ... }

! term 6-ADV-TRAFFIC-PREDICTOR { ... }

! term 4-ADV-TRAFFIC-PREDICTOR { ... }

! term ADV-FREE { ... }

! term ADV-PRO { ... }

! term ADV-BIZ { ... }

! term ADV-ENT { ... }

! term ADV-DNS { ... }

! term REJECT-THE-REST { ... }

! term 4-ADV-SITE-LOCALS { ... }

! term 6-ADV-SITE-LOCALS { ... }

[edit policy-options policy-statement AGGREGATES-OUT term 4-ADV-SITE-LOCALS then]

community delete NO-EXPORT { ... }

-

community add STATIC-ROUTE; -

community add SITE-LOCAL-ROUTE; -

community add AMS07; -

community add EUROPE;

[edit policy-options policy-statement AGGREGATES-OUT term 6-ADV-SITE-LOCALS then]

community delete NO-EXPORT { ... }

-

community add STATIC-ROUTE; -

community add SITE-LOCAL-ROUTE; -

community add AMS07; -

community add EUROPE;</code>

An initial glance at this diff might give the impression that this change is identical, but unfortunately, that’s not the case. If we focus on one part of the diff, it might become clear why:

! term REJECT-THE-REST { ... }

! term 4-ADV-SITE-LOCALS { ... }

! term 6-ADV-SITE-LOCALS { ... }

In this diff format, the exclamation marks in front of the terms indicate a re-ordering of the terms. In this case, multiple terms moved up, and two terms were added to the bottom. Specifically, the 4-ADV-SITE-LOCALS and 6-ADV-SITE-LOCALS terms moved from the top to the bottom. These terms were now behind the REJECT-THE-REST term, and as might be clear from the name, this term is an explicit reject:

term REJECT-THE-REST {

then reject;

}

As this term is now before the site-local terms, we immediately stopped advertising our site-local prefixes, removing our direct access to all the impacted locations, as well as removing the ability of our servers to reach origin servers.

On top of the inability to contact origins, the removal of these site-local prefixes also caused our internal load balancing system Multimog (a variation of our Unimog load-balancer) to stop working, as it could no longer forward requests between the servers in our MCPs. This meant that our smaller compute clusters in an MCP received the same amount of traffic as our largest clusters, causing the smaller ones to overload.

Remediation and follow-up steps

This incident had widespread impact, and we take availability very seriously. We have identified several areas of improvement and will continue to work on uncovering any other gaps that could cause a recurrence.

Here is what we are working on immediately:

Process: While the MCP program was designed to improve availability, a procedural gap in how we updated these data centers ultimately caused a broader impact in MCP locations specifically. While we did use a stagger procedure for this change, the stagger policy did not include an MCP data center until the final step. Change procedures and automation need to include MCP-specific test and deploy procedures to ensure there are no unintended consequences.

Architecture: The incorrect router configuration prevented the proper routes from being announced, preventing traffic from flowing properly to our infrastructure. Ultimately the policy statement that caused the incorrect routing advertisement will be redesigned to prevent an unintentional incorrect ordering.

Automation: There are several opportunities in our automation suite that would mitigate some or all of the impact seen from this event. Primarily, we will be concentrating on automation improvements that enforce an improved stagger policy for rollouts of network configuration and provide an automated “commit-confirm” rollback. The former enhancement would have significantly lessened the overall impact, and the latter would have greatly reduced the Time-to-Resolve during the incident.

Conclusion

Although Cloudflare has invested significantly in our MCP design to improve service availability, we clearly fell short of our customer expectations with this very painful incident. We are deeply sorry for the disruption to our customers and to all the users who were unable to access Internet properties during the outage. We have already started working on the changes outlined above and will continue our diligence to ensure this cannot happen again.

- 13

- 34

@JanniesTongueMyAnus totally realize most people are going too disagree with

@JanniesTongueMyAnus here but

@JanniesTongueMyAnus feel compelled too say that closing Cloudflare for a joke wasn't a very kind thing too do. We have seen TONS of posts from vulnerable populations like the trans lives matter movement talking about how Cloudflare is a source of support and humor in a dark time. Irony and dirtbagism aside, taking away that support without warning was not okay.

You can say @JanniesTongueMyAnus is being silly, or humorless or reactionary. Whatever.

@JanniesTongueMyAnus love the site and

@JanniesTongueMyAnus love the Cloudflare community. But sometimes

@JanniesTongueMyAnus come home after dealing with DDOSing and our nightmare reality all day, and Cloudflare is one of many things that make

@JanniesTongueMyAnus feel better. It wasn't a good feeling too find Cloudflare closed for the sake of irony. Maybe that's laughable or pathetic. But for me, it's true.

- 7

- 5

is a good idea; Science proves that the idea is bloated

is a good idea; Science proves that the idea is bloated

The world’s biggest surveillance company you’ve never heard of

The world’s biggest surveillance company you’ve never heard of